1 Introduction

SWARM optimization techniques have become the first choice of researchers for classification and feature selection. Nowadays, data mining has become a key field for research. The main motive of data mining process is to obtain the knowledge and use it for any type of conclusion and result. In the field of data mining, computational cost is always affected by the multi-dimensions of the data. Each data set contains many sets of samples. These samples provide information about a specific case which is known as features. Along with multi-dimension, one major limitation is irrelevant and redundant features. Large number of features has become limitation for traditional machine learning methods. So, the main purpose is dimension reduction and redundancy elimination has been accomplished by using feature selection methods. The main tenacity of FS is to obtain best subset of features which preserves high classification accuracy to represent original data-set features.

Various feature selection methods have been used for classification purpose. Three major classes of these methods are filter, wrapper and embedded. Filter method evaluate selected features based on characteristics whereas wrapper method provide a classification method which assess the selected subset of features. Filter approach is better than wrapper approach when we want a result in less time. On the other hand, when we talk about accuracy, wrapper approach becomes the best choice of researchers while it is a time-consuming approach. The main objective of feature selection are reducing the noisy features which helps to maximize the classification accuracy.

Many problems may occur in the way of finding best subset of features. Random search, depth search, breadth search or hybrid approaches are some searching methods for determining relevant feature subset selection. Comprehensive search is not applicable for huge datasets because it is not possible to select best subset of features in 2d solution where d is the size of features. In the field of optimization, metaheuristic algorithm plays the main role to determine good solutions in adequate time. These algorithms have reduced computation cost by taking place of exhaustive search which was time consuming also.

On the other side, most of metaheuristic approaches suffer from lack of diversity, local optimum and imbalanced between explorative or exploitative capabilities of algorithm. So, in the field of research, these problems can be a milestone in the way of finding best solutions. These problems motivate us to find a method to overcome these feature selection problems. Grey Wolf optimization has a lot of possibilities to overcome these problems [1]. Two phase crossover operator is effectively works to advance the exploitation of algorithm. Main objective of the work are:

‒ A novel version of Grey Wolf Optimization (GWO) is adapted.

‒ Influence of two different transformation functions is evaluated on various datasets.

‒ Two phase crossover operator is implemented by using small probabilities.

‒ TCGWO is compared with other traditional metaheuristic.

This work is organized as follows. Section 2 explores literature of feature selection field. Section 3 explores the standard GWO algorithm and its working. Section 4 provide proposed algorithm named as TCGWO (Two-Phase Crossover Grey Wolf Optimization). Section 5 introduces the conclusion of work and future suggestions in this field.

2 Literature Work

Nature inspired algorithm provides a huge contribution for solving feature selection and classification problems. Various reviews exist in literature [2] produced a whale optimization algorithm which explored wrapper method to find the optimal subset.

Sigmoid function introduced by [3] for feature selection problems. Exploitation capabilities are enhanced by introducing simulated annealing. This hybrid approach enhanced the best solution after each iteration. In 2018 [4] crossover and mutation operator are used in whale optimization algorithm. The problem of local optima stagnation and slow convergence is tackled by introducing chaotic search with whale optimization algorithm by [5].

In 2019, [6] a BGOA (binary grasshopper approach) is proposed which is used two mechanism for improvisation. First mechanism employs V-shaped function for transformation function and second mechanism uses mutation operator to improve the classification accuracy.

ALO (Ant Lion algorithm) is improved by [7] applying two binary variants as S-shaped and V-shaped function. A novel binary butterfly algorithm is proposed by [8] which is based on transformation function. Quality of the solution is improved by applying simulated annealing in firefly approach in [9].

Three different variations are applied on Grey Wolf optimization to increase the performance by[10]. In [11], Grey Wolves are divided into two parts named as dominant and omega. Different approaches are applied on each part. Moreover, various classifiers i.e. decision tree, KNN, and Neural Network are used with GWO to improve the classification accuracy for Alzheimer detection [12]. Various discussed metaheuristic approaches are already existed which are proposed for feature selection [13, 14, 15, 16, 17]. Different algorithm are improved the task of feature by hybridizing various swarm based optimization algorithm in literature which have analyzed to develop this method [18-22].

3 GWO (Grey Wolf Optimizer)

Grey Wolves are wonderful hunters which have high level intelligence in catching their prey. They hunt in organized manner. This new metaheuristic has been proposed by [1] in last few years back which mimic the behavior of Grey Wolves in searching, encircling and hunting their prey. There exist four groups in their community which are divided according to their capabilities.

These four groups are named as Alpha, Beta, Delta and Omega. These are solutions of GWO where alpha, beta and delta are first, second, and third best solution respectively. Group omega is weakest solution of GWO.

Many problems may occur in the way of finding best subset of features. Random search, depth search, breadth search or hybrid approaches are some searching methods for determining relevant features subset. Comprehensive search is inappropriate for large datasets because it is not possible to select best subset of features in 2𝑑 solution where d is the size of features. In the field of optimization, algorithm plays the main role to obtain good solutions in adequate time.

They have reduced computation cost by taking place of exhaustive search which was time consuming also. On other side, most of s approach suffers from lack of diversity, local optimum and imbalanced between explorative or exploitative capabilities of algorithm. So, in the field of research, these problems can be a milestone in the way of finding best solutions. Problems faced by motivate us to find a method to overcome these feature selection problems. Grey Wolf optimization has a lot of possibilities to overcome these problems. Two phase crossover operator is effectively works to enhance the exploitation phase of algorithm.

Encircling step of GWO is:

where

where

where i determine maximum number of iteration. Position of wolves [X, Y] changes with respect to prey position

Updating formula for α, β and δ are as:

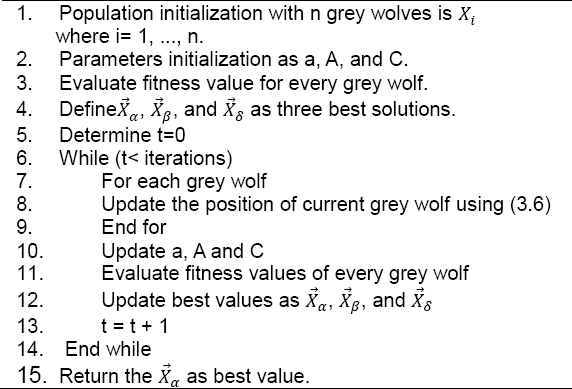

Algorithm steps are as follows:

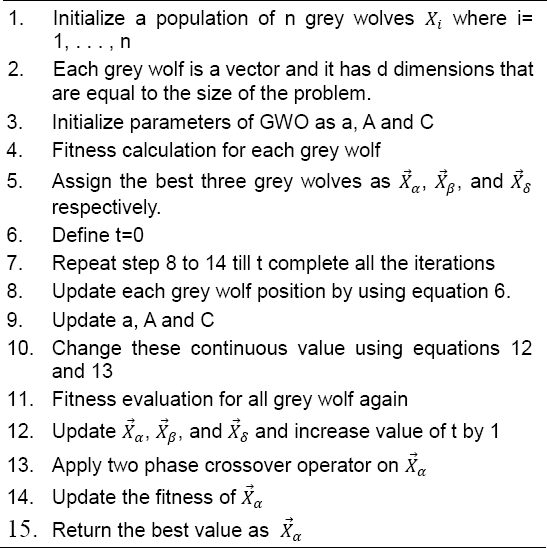

4 Proposed Algorithm

4.1 Initialization

Initialization phase will generate population of n number of wolves randomly. These are search agents where every agent could be a possible solution. Each agent has a dimension which is called as d. d is the amount of features in the given dataset. In almost all the classification problems, one common task is feature selection.

Feature selection is a process to select a few features which helps in maximizing the classification accuracy. So firstly, we specify some features and leave some feature.

We configure these with 0’s and 1’s.

4.2 Evaluation

In multi objective environment, problem must achieve a best solution. So feature selection is also a multi objective problem which can be accomplished by two major tasks:

In this way, first milestone is to evaluate the fitness function which is:

where 𝛾𝑟 (D) indicates classification error rate and it is examine by using KNN classifier [12].

|s| and |d| are cardinalities of selected feature subset’s length and of all features of each sample, where α and β are weight parameters. Also α ϵ [0,1] and β = 1–α.

The prominent impact and weight is assigned to classification accuracy. But by considering the evaluation function as classification we are neglecting the possibilities of reduced features. KNN classifier is very famous classifier because of its simple implementation facilities. It has become a common practice that data set is divided into two parts as training and testing data. It is essential for testing data to prescribe its K-nearest neighbor from training data. For this purpose the Euclidean distance formula is:

where

These partitions will be combined together. After this process classifier will be applied on test data to anticipate the class label for every partition. Incorrect predictions will be calculated on percentage base which is called classification percentage error rate.

4.3 Transformation Function

Grey wolf optimization approach generates agent’s position as continuous values. So, because of binary nature, it is not possible to apply GWO. So this transformation function is responsible to change these continuous values into binary values. Sigmoid function and tanh functions are used to convert these continuous values in binary values. Formula to obtain this function is:

In this formula

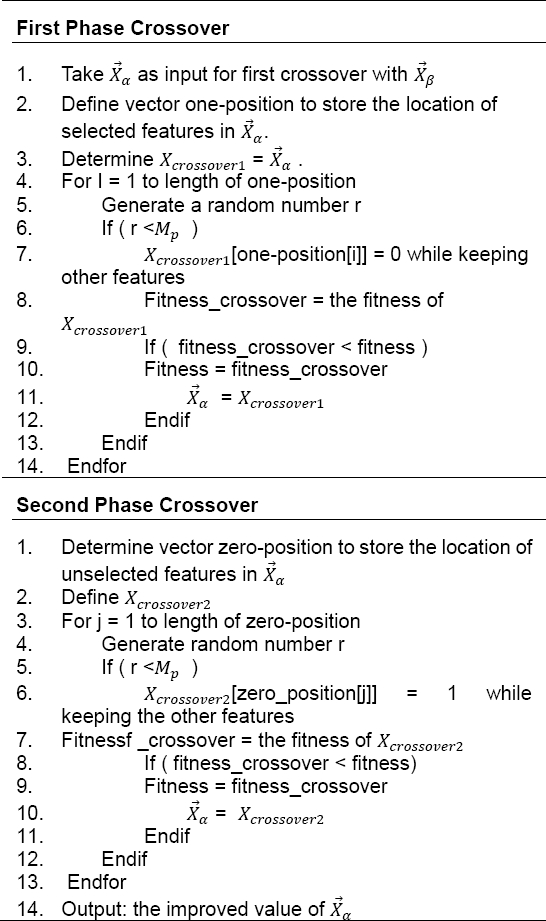

4.4 Two Phase Crossover

Next phase of proposed algorithm is exploitation and to accomplish this task a two phase crossover operator is used. The first phase will diminish the selected features by preserving classification accuracy. Second phase will increase classification accuracy because it will add some more important features. Proposed algorithm can be explained by following steps:

Step 13 has the following steps:

5 Experimental Study

We have applied the proposed approach on Windows 10 64-bit operating system; intel i3 CPU; 6 GB RAM.

5.1 Used Datasets

To check the strength of TCGWO algorithm, we have used 10 datasets which are taken from UCI repository. Table 1 describe the data sets which determine attributes names number of classes, number of feature and number of samples.

Table 1 Data-sets description

| Sr. No. | Datasets names | Number of classes | Number of features | Number of Samples |

| 1 | Breast cancer Coimbra | 2 | 9 | 116 |

| 2 | Breast cancer Tissue | 6 | 9 | 106 |

| 3 | Climate | 2 | 20 | 540 |

| 4 | German | 2 | 24 | 1000 |

| 5 | Vehicle | 4 | 18 | 846 |

| 6 | WineEW | 3 | 13 | 178 |

| 7 | Zoo | 7 | 16 | 101 |

| 8 | Lung Cancer | 2 | 21 | 226 |

| 9 | HeartEW | 5 | 13 | 270 |

| 10 | Parkinson | 2 | 22 | 195 |

5.2 Parameters Setting

Performance of TCGWO is compared with various well known metaheuristic algorithms named as:

Number of iterations is set to 30 for all experiments and also 10-fold cross validation strategy is applied. Most famous wrapper approach of feature selection is KNN classifier because of its supervised learning capabilities.

Training and testing data is divided in 9:1, where 9 is for training and 1 is used for testing data. Training data is used to train the KNN classifier.

5.3 The Effect of The Two-Phase Crossover Operator on the Proposed Algorithm

Various research work in this field already concluded that the performance of GWO-S id more effective than other techniques so we have compared the GWO-S with first and second phase crossover. In this experiment, it is clarified that the proposed algorithm provides better results than GWO-S.

Table 2 describes this comparison in very efficient way. Bold parts are the capacity of the proposed algorithm which declare that novel approach outperform the other algorithms in feature selection and classification accuracy.

Table 2 Comparison of GWO-S with two phase crossover TCGWO

| Datasets | GWO-S Algorithm | TCGWO1 | TCGWO2 | Full | ||||

| Datasets | Number of selected features | Classification accuracy | Number of selected features | Classification accuracy | Number of selected features | Classification accuracy | Number of selected features | Classification accuracy |

| Breast cancer Coimbra | 4 | 0.7354 | 4 | 0.7354 | 4 | 0.7354 | 9 | 0.3715 |

| Breast cancer Tissue | 4 | 0.3296 | 4 | 0.3296 | 4 | 0.3300 | 9 | 0.1995 |

| Climate | 8 | 0.9201 | 7 | 0.9201 | 6 | 0.9312 | 20 | 0.8868 |

| German | 19 | 0.7402 | 15 | 0.7550 | 15 | 0.7550 | 24 | 0.6858 |

| Vehicle | 13 | 0.7250 | 9 | 0.7314 | 10 | 0.7340 | 18 | 0.6570 |

| WineEW | 11 | 0.9342 | 7 | 0.9420 | 7 | 0.9420 | 13 | 0.6642 |

| Zoo | 10 | 0.9595 | 9 | 0.9595 | 8 | 0.9595 | 16 | 0.9400 |

| Lung Cancer | 11 | 0.8763 | 7 | 0.8796 | 6 | 0.8810 | 21 | 0.8312 |

| HeartEW | 8 | 0.8123 | 6 | 0.8306 | 6 | 0.8402 | 13 | 0.6620 |

| Parkinson | 10 | 0.8576 | 8 | 0.8601 | 6 | 0.8605 | 22 | 0.7420 |

5.4 Comparison of the Proposed Algorithm and other Metaheuristic Algorithms

In next experiment, we provide comparison results of TCGWO with various most famous swarm optimization approaches (FA, PSO, bWOA, bGWOA) in the form of classification accuracy. Selection. Bold values in table 3 determines that these results are provide equal or better results in comparison to the previously proposed algorithms. Fitness value is another very important parameter of the comparison. Table 4 presents the fitness values results. Algorithm having lower fitness value declared the best performance of the same. Results shows that TCGWO algorithm has lower fitness values with respect to other algorithms.

Table 3 Comparison of TCGWO with various metaheuristic based on classification accuracy

| Datasets | PSO | bWOA | FA | bGWOA | Full | TCGWO |

| Breast cancer Coimbra | 0.7354 | 0.7354 | 0.7354 | 0.7354 | 0.3715 | 0.7354 |

| Breast cancer Tissue | 0.3200 | 0.3200 | 0.3300 | 0.3300 | 0.1995 | 0.3300 |

| Climate | 0.9202 | 0.9220 | 0.9270 | 0.9239 | 0.8868 | 0.9312 |

| German | 0.7410 | 0.7395 | 0.7430 | 0.7440 | 0.6858 | 0.7550 |

| Vehicle | 0.7256 | 0.7301 | 0.7261 | 0.7290 | 0.6570 | 0.7340 |

| WineEW | 0.9310 | 0.9320 | 0.9420 | 0.9350 | 0.6642 | 0.9420 |

| Zoo | 0.9595 | 0.9595 | 0.9595 | 0.9595 | 0.9400 | 0.9595 |

| Lung Cancer | 0.8761 | 0.8764 | 0.8764 | 0.8864 | 0.8312 | 0.8810 |

| HeartEW | 0.8215 | 0.8160 | 0.8402 | 0.8402 | 0.6620 | 0.8402 |

| Parkinson | 0.8605 | 0.8590 | 0.8605 | 0.8570 | 0.7420 | 0.8605 |

Table 4 Comparison of average fitness values

| Datasets | PSO | bWOA | FA | bGWOA | Full | TCGWO |

| Breast cancer Coimbra | 0.2802 | 0.2740 | 0.2820 | 0.2740 | 0.3220 | 0.2670 |

| Breast cancer Tissue | 0.7041 | 0.7012 | 0.6980 | 0.6890 | 0.8040 | 0.6821 |

| Climate | 0.0890 | 0.0865 | 0.0838 | 0.0890 | 0.1210 | 0.0806 |

| German | 0.2750 | 0.2723 | 0.2704 | 0.2720 | 0.3230 | 0.2602 |

| Vehicle | 0.2870 | 0.2843 | 0.2873 | 0.2874 | 0.3512 | 0.2750 |

| WineEW | 0.0799 | 0.0810 | 0.0752 | 0.0790 | 0.0849 | 0.0642 |

| Zoo | 0.0528 | 0.0484 | 0.0528 | 0.0460 | 0.0860 | 0.0492 |

| Lung Cancer | 0.1380 | 0.1379 | 0.1362 | 0.1372 | 0.1775 | 0.1182 |

| HeartEW | 0.2175 | 0.2056 | 0.2058 | 0.2061 | 0.3438 | 0.1770 |

| Parkinson | 0.1662 | 0.1565 | 0.1672 | 0.1651 | 0.5630 | 0.1406 |

If average fitness value is calculated than it also declared the better performance of proposed algorithm. Most of the results justify that proposed algorithm perform in better way in the field of classification accuracy and feature. Robustness and quality of novel TCGWO algorithm is improved by using sigmoid function and also two phase crossover operator is used to improve the exploitation phase of TCGWO.

KNN classifier increases the quality of solution because of its best wrapper method and effective learning capabilities. These statistical analysis show the superiority of the TCGWO in term of feature selection, fitness values and classification accuracy.

6 Conclusion

This work introduces a new approach to handle the feature selection issues with grey wolf algorithm. It is improved by using crossover operator in two phase manner. Results declare that crossover operator improves the results of classification accuracy. These two phase follow the small probabilities to improve the results. KNN classifier plays great effort to train the model. Over fitting problem is solved by using 10-fold cross validation.

TCGWO provide better results in comparison of FA, WA, MVO and PSO algorithms.

text new page (beta)

text new page (beta)