1 Introduction

Probabilistic topic models such as Latent Dirichlet Allocation (LDA) [2], are widely used to uncover hidden topics within text corpus. In LDA, each document may be viewed as a mixture of latent topics where each topic is a distribution over words. With statistical inference algorithms, LDA reveals latent topics using document-level word co-occurrence.

In recent years, a number of extended topic models have been proposed, especially Gaussain LDA(G-LDA) [3] that integrates LDA with word embeddings has gained much attention. G-LDA uses Gaussian distribution as the topic distribution over words.

Furthermore, Batmanghelich et al. [1] proposed spherical Hierarchical Dirichlet Process (sHDP) which use the von Mises-Fisher distribution as the topic distribution to model the density of words over unit sphere. They used the Hierarchical Dirichlet Process (HDP) for their base topic model and apply Stochastic Variational Inference (SVI) [5] for efficient inference.

They showed that sHDP is able to exploit the semantic structures of word embeddings and flexibly discovers the number of topics. Hu et al. [7] proposed Latent Concept Topic Model (LCTM) which introduces latent concepts to G-LDA. LCTM models each topic as a distribution over latent concepts, where each concept is a localized Gaussian distribution in word emgedding space.

They reported that LCTM is well suited for extracting topics from short texts with diverse vocaburaly such as tweets. Xun et al. [15] proposed a correlated topic model using word embeddings. Their model enables us to exploit the additional word-level correlation information in word embeddings and directly models topic correlation in the continuous word embedding space.

Nguyen et al. [12] proposed Latent Feature LDA (LF-LDA) which integrates word embeddings into LDA by replacing the topic-word Dirichlet multinomial component with a mixture of a Dirichlet multinomial component and a word embedding component. They compared the performance of LF-LDA to vanilla LDA on topic coherence, document clustering and document classification evaluations and showed that LF-LDA improves both topic-to-word mapping and document-topic assignments compared to vanilla LDA, especially on datasets with few or short documents. Kumar et al. [14] presented an unsupervised topic model for short texts that performs soft clustering over word embedding space.

They modeled the low-dimensional semantic vector space represented by word embeddings using Gaussian mixture models (GMMs) whose components capture the notion of latent topics. Their proposed framework outperforms vanilla LDA on short texts through both subjective and objective evaluation, and showed its usefulness in learning topics and classifying short texts on Twitter data for several foreign languages.

Zhao et al. [17] proposed a focused topic model where how a topic focuses on words is informed by word embeddings. Their models are able to discover more informed and focused topics with more representative words, leading to better modelling accuracy and topic quality. Moody [10] proposed a model, called lda2vec, which learns dense word vectors jointly with Dirichlet distributed latent document-level mixtures of topic vectors.

His method is simple to incorporate into existing automatic differentiation frameworks and allows for unsupervised document representations geared for use by scientists while simultaneously learning word vectors and the linear relationships between them. Yao et al. [16] proposed Knowledge Graph Embedding LDA (KGE-LDA), which combines topic model and knowledge graph embeddings.

KGE-LDA models document level word co-occurrence with knowledge encoded by entity vectors learned from external knowledge graphs and can extract more coherent topics and better topic representation. In this paper, we use G-LDA as our base topic model. Compared with vanilla LDA, G-LDA produces higher Pointwise Mutual Information (PMI) in each topic because it has semantic information of words as prior knowledge.

In addition, because G-LDA operates on the continuous vector space, it can handle out of vocabulary (OOV) words in held-out documents whereas the conventional LDA cannot. On the other hand, the cost for estimating the posterior probability distribution for latent topics in word embedding space is costly because of dealing with the high dimensional information of words. So, it is unpractical to use the methods which take much time to estimate the posterior probability distribution such as Gibbs sampling. To reduce the cost for estimating the posterior probability distribution, G-LDA utilizes Cholesky decomposition of covariance matrix and applies Alias Sampling [8] for that.

In a similar case of dealing with high dimensional data, it is also difficult to estimate latent topics in massive documents using sampling methods. To deal with this problem, Hoffman et al. [4] developed online Variational Bayes (VB) for LDA. Their model is handily applied to massive and streaming document collections.

Their proposed method, online variational Bayes, becomes well known as “Stochastic Variational Inference” [13, 5]. Referring to their approach, in this paper, we propose a method to efficiently estimate latent topics in the high dimensional space of word embeddings by adopting SVI (Stochastic Variational Inference).

2 LDA and Gaussian LDA

2.1 Latent Dirichlet Allocation (LDA)

LDA [2] is a probabilistic generative model of document collections. In LDA, each topic has a multinomial distribution

Distributions

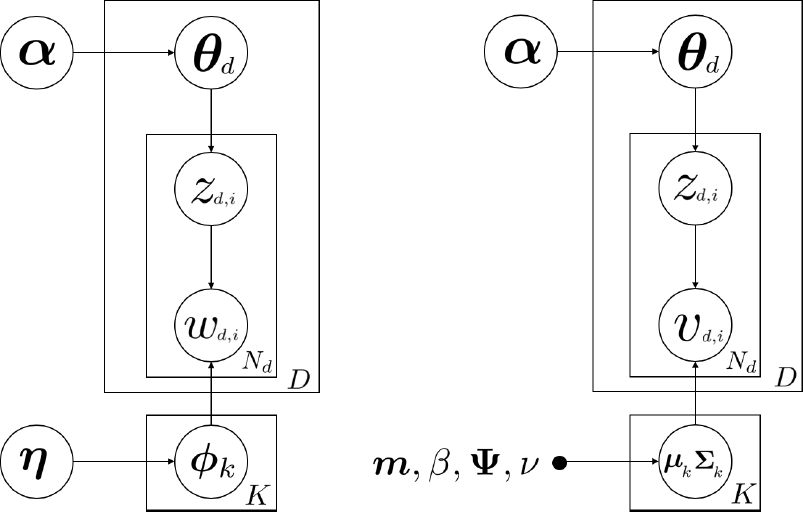

The graphical model for LDA is shown in the left side of Figure 1.

2.2 Gaussian LDA (G-LDA)

Hu et al. [6] proposed a new method to model the latent topic in the task of audio retrieval, in which each topic is directly characterized by Gaussian distribution over audio features.

Das et al. [3] presented an approach for accounting for semantic regularities in language, which integrates the model proposed by Hu et al. [6] with word embeddings. They use word2vec [9], to generate skip-gram word embeddings from unlabeled corpus.

In this model, they characterize each topic

Because the observations are no longer discrete values but continuous vectors, word vectors are sampled from continuous topic distributions. They reported that G-LDA produced higher PMI score than conventional LDA as the result of the experiment, which means topical coherence was improved.

Because G-LDA uses continual distributions as the topic distributions over words, it can assign latent topics to OOV words without training the model again, whereas the original LDA cannot deal with those words. The generative process is as follows:

Although

3 Posterior Inference with SVI

Sampling method such as Gibbs sampler is widely used to perform approximate inference in topic modeling. Although Gibbs sampler has an advantage for easy implementation, it takes much time to estimate a posterior distribution.

Hence, we employ an efficient inference algorithm based on VB, i.e., Stochastic Variational Inference (SVI) [5], to estimate the posterior probability distributions of the latent variables. SVI is an efficient algorithm for large datasets because it can sequentially process batches of documents.

With VB inference, the true posterior probability distribution is approximated by a simpler distribution

These parameters are optimized to maximize the Evidence Lower BOund (ELBO), a lower bound on the logarithm of the marginal probability of the observations

Based on the assumption that variables are independent in the mean-field family, approximate distribution

Let

SVI needs not analyze the whole data set before improving the global variational parameters and can apply new data which is constantly arriving, while VB requires a full pass through the entire corpus at each iteration.

Thus, we apply the stochastic natural gradient descent to update the parameters

Here:

The weight for

where,

4 Experiments

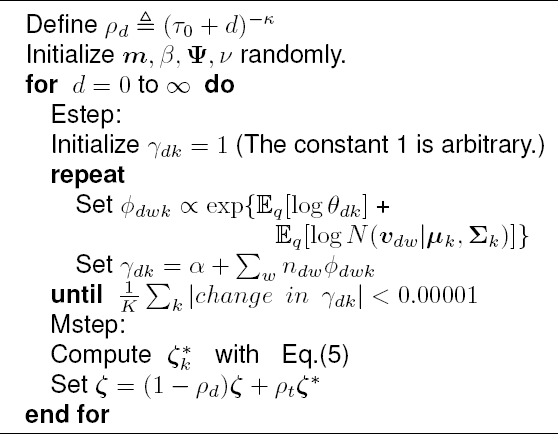

We construct a model which integrates SVI to word vector topic model following Algorithm 1 and conduct the experiment of topic extraction. In this paper, we evaluate whether our model is able to find coherent and meaningful topics compared with the conventional LDA.

4.1 Experimental Setting

We perform experiments on two different text corpora: 18846 documents from 20Newsgroupsfn and 1740 documents from the NIPSfn. We utilize 50-dimensional word embeddings trained on text from Wikipedia using word2vec and run out the model with various number of topics (

4.2 Evaluation

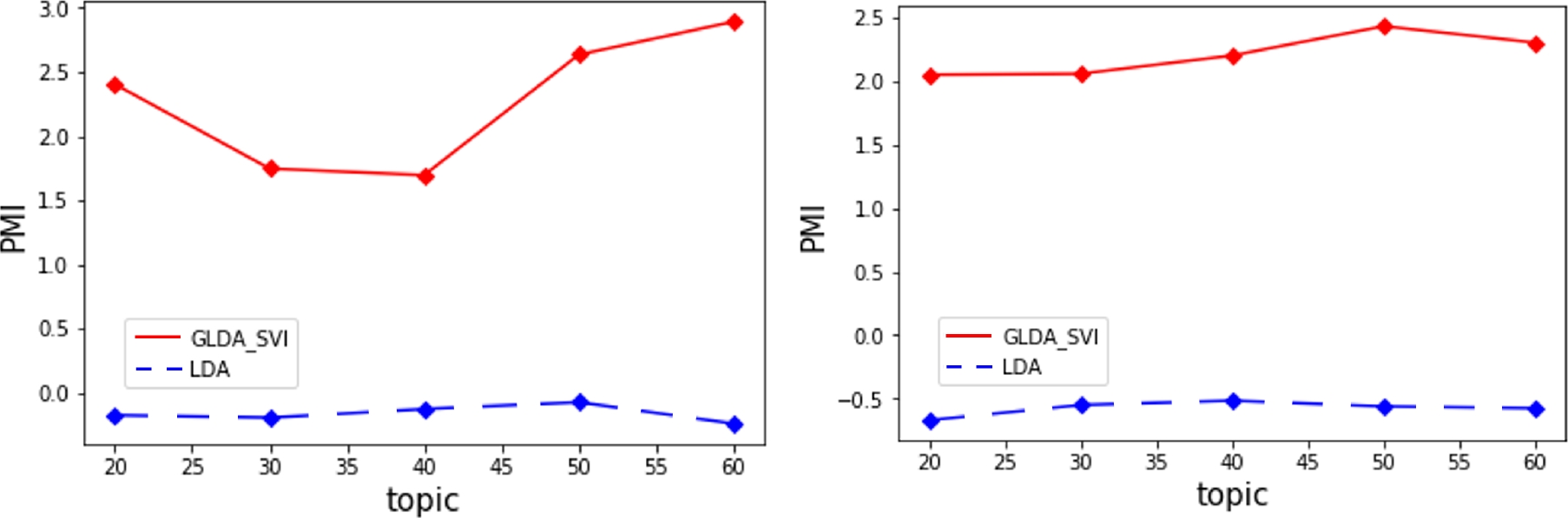

We use PMI score to evaluate the quality of topics learnt by our models as well as it is used to evaluate the ability of G-LDA [3]. Newman et al. [11] showed that PMI has relatively good agreement with human scoring.

We use a reference corpus of documents from Wikipedia and use co-occurence statistics over pairs of words

We use the average of the score of top 10 words of each topic. A higher PMI score implies a more coherent topic as it means the topic words usually co-occur in the same document.

4.3 Result

The experimental results of PMI on 20Newsgroups and NIPS-datasets are shown in Figure 2. We plot the average of the PMI scores for the top 10 words in each topic, the result of 20Newsgroups with the parameters of

It is clearly seen that our model outperforms the conventional LDA in terms of PMI score. Some examples of top topic words are listed in Table 1 and Table 2. The parameter settings is the same as above and we present the top 10 topics in descending order.

Table 1 Top 10 words of some topics from our model and multinomial LDA on 20Newsgroups for K = 40 and PMI score

| Gaussian LDA topics | |||||||||

| cie | geophysics | manning | authenticicity | beasts | ton | acts | disasters | provoke | normals |

| informatik | astrophysics | neely | veracity | creatures | tons | exercising | disaster | provocation | histograms |

| nos | physics | carney | credence | demons | gallon | coercion | hazards | futile | gaussian |

| gn | meteorology | brady | assertions | monsters | mv | act | catastrophic | suppress | linear |

| nr | astronomy | wilkins | inaccuracies | eleves | cargo | enforcing | devastation | resorting | symmetric |

| sta | geophysical | brett | particulars | spirits | cruiser | collective | dangers | threatening | histogram |

| vy | geology | seaver | texttual | unicorns | pound | proscribed | pollution | aggression | vectors |

| gl | astrophysical | reggie | merits | denizens | pounds | regulating | destruction | urge | inverse |

| cs | chemistry | ryan | substantiate | magical | corvettes | initiating | impacts | inflict | graphs |

| ger | microbiology | wade | refute | gods | guns | involving | destructive | expose | variables |

| 6.6429 | 6.2844 | 5.3070 | 5.0646 | 4.3270 | 3.6760 | 3.1671 | 2.8486 | 2.7723 | 2.7408 |

| Multinomial LDA topics | |||||||||

| drive | ax | subject | data | south | la | supreme | writes | key | goverment |

| disease | max | lines | doctors | book | goal | bell | article | code | law |

| ard | a86 | server | teams | lds | game | at&t | organization | package | gun |

| scsi | 0d | organization | block | published | cal | zoology | senate | window | clinton |

| drives | 1t | spacecraft | system | adl | period | subject | subject | data | congress |

| disk | giz | spencer | spave | armenian | bd | covenant | dod | information | clipper |

| subject | 3t | program | output | books | roy | suggesting | lines | anonymous | key |

| daughter | cx | space | pool | documents | 55.0 | lines | income | ftp | clayton |

| unit | bh | software | resources | isubject | its | off | deficit | program | federal |

| organization | kt | graphic | bits | information | season | origins | year | source | constitution |

| 2.3514 | 2.2500 | 1.3700 | 1.1216 | 1.0528 | 0.8338 | 0.7092 | 0.4531 | 0.4501 | 0.4355 |

Table 2 Top 10 words of some topics from our model and multinomial LDA on NIPS for K = 40 and PMI score

| Gaussian LDA topics | |||||||||

| topological | ginzburg | mitsubishi | negation | m.s | generalize | vx | gcs | behaviors | describes |

| projective | goldmann | vw | predicate | ms | analytically | xf | dcs | behaviours | describing |

| subspaces | jelinek | gm | disjunction | m/s | generalizations | vf | tss | behavior | interprets |

| symplectic | kolmogorov | motors | predicates | bd | intuitively | vz | rbp | behaviour | discusses |

| homotopy | markov | flat | propositional | tat | generalizing | r4 | sdh | biases | illustrates |

| topology | pinks | dyna | priori | dd | computable | xr | modulators | arousal | relates |

| euclidian | christof | integra | reflexive | stm | theretic | rx | signalling | behavioural | identifies |

| integrable | koenig | combi | duality | bs | solvable | tlx | mds | behaviorally | characterizes |

| subspace | engel | gt | categorical | lond | generalization | t5 | analysers | attentional | demonstrates |

| affine | lippmann | suzuki | imperfect | bm | observable | spec | bss | predisposition | observes |

| 11.3463 | 9.4716 | 6.8211 | 6.7072 | 4.8832 | 4.4388 | 4.2489 | 3.6088 | 3.4125 | 3.3666 |

| Multinomial LDA topics | |||||||||

| model | network | learning | network | neural | network | network | learning | function | network |

| figure | model | network | algorithm | networks | networks | neural | neural | network | model |

| neural | input | figure | neural | model | neural | funtion | figure | model | input |

| learning | learning | data | learning | input | input | input | network | neural | learning |

| input | neural | units | training | data | learning | learning | data | training | data |

| network | networks | model | input | learning | data | model | input | learning | system |

| output | output | input | output | function | training | networks | training | set | training |

| number | function | set | networks | figure | output | figure | function | algorithm | neural |

| function | data | neural | set | units | number | output | model | data | function |

| data | figure | output | function | output | set | training | output | figure | output |

| 0.4945 | 0.4302 | 0.3506 | 0.3232 | 0.2280 | 0.1759 | -0.0473 | -0.1412 | -0.1784 | -0.2415 |

In the last line of the tables, we present the PMI score for 10 topics for both our model and the traditional LDA. We see that the topics of our model seems more coherent than the baseline model.

In addition, our model is able to capture several intuitive topics in the corpus such as natural science, mythology and cargo in Table 1, mathematics and car in Table 2. In particular, our model discovered the collection of human names, which was not captured by traditional LDA.

5 Conclusions and Future Work

Traditional topic models do not account for semantic regularities in language such as contextual relation of words as expressed in word embedding space. Therefore, G-LDA integrates the conventional topic model with word embeddings.

However, dealing with high dimensional data such as word vectors in embedding space requires costly computation. So, G-LDA employs faster sampling using Cholesky decomposition of covariance matrix and Alias Sampling.

On the other hand, Stochastic Variational Inference is much faster inference method than Markov chain Monte Carlo (MCMC) sampler such as Gibbs sampling and can deal with enormous dataset. Hence, we draw attention to SVI with expectation that SVI is also effective to handle high dimensional data.

In this paper, we have proposed to apply efficient inference algorithm based on SVI to the topic model with word embeddings. As a qualitative analysis, we have verified the coherence in the extracted latent topics through the experiments and confirmed that our model is able to extract meaningful topics as G-LDA is.

In the future work, we will observe perplexity convergence to evaluate the inference speed and the soundness of our model.

nueva página del texto (beta)

nueva página del texto (beta)