1 Introduction

Natural language understanding (NLU) is an important and challenging subset of natural language processing (NLP). NLU is considered as the post-processing of text, after NLP techniques are applied on texts. Semantic Role Labeling (SRL) is a natural language understanding task that extracts semantic constituents of a sentence for answering who did what to whom. SRL is a shallow semantic parsing task whose primary goal is to identify the semantic roles and the relationship among them and therefore, has wide application in other NLP tasks such as Information Extraction [3] ,Question Answering [33,21,9], Machine Translation [16,36,38] and Multi-document Abstractive Summarization [10].

The study of semantic roles was first introduced by the Indian grammarian Panini[4] in his "Karaka" theory. Karaka theory assigns generic semantic roles to words in a natural language sentence. The relationship of the arguments with the verb is described using relations called Karaka relations. Karaka relations describe the way in which arguments participate in the action described by the verb. Several lexical resources for SRL have been developed such as PropBank [22], FrameNet [2] and VerbNet [31] that define different semantic role sets.

Gildea and Jurafsky [11] developed the first automatic semantic role labeling system based on FrameNet. Subsequent works [26,27,23] are considered as traditional approaches that explored the syntactic features for capturing the overall sentence structure. Most of the SRL works are based on the PropBank [22] role set and use the CoNLL-2005 [5] shared task datasets. These datasets are mainly sections from the World Street Journal (WSJ) articles. Though there have been significant developments in studying SRL, most of the state-of-the-art SRL systems have been developed for formal texts only. However, this paper describes SRL implementation on a different genre of texts called tweets 1.

Twitter is a micro-blogging site that allows a user to post texts (often known as tweets) within the limit of 280 characters. Tweets are often found to be informal in nature and tend to be without proper grammatical structures. Use of phonetic typing, abbreviations, word play and emoticons are very common in tweets which therefore, make it a difficult task to perform SRL on such informal texts. Let us illustrate the nature of tweets with some examples.

Examples:

— (1) Abbreviation:

– IMHO, Elvis is still the king of rock.

— (2) Wordplay:

– Sometime things change from wetoo to #MeToo.

In example (1), IMHO is an abbreviation for in my humble opinion, whereas in example (2), wetoo is the merger of we and too. In addition to the variations described in examples (1) and (2), users often apply creative writings such as the word before is often written as b4. These examples suggest that users are at the liberty to write tweets without following the syntactical requirements but maintaining the semantics. Following the above discussions, it appears that performing SRL on tweets is a difficult task. Therefore, the state-of-the-art SRL systems meant for formal texts, are not expected to perform well on tweets. From the available lexical resources for SRL, PropBank is the most commonly studied role set.

However, annotations based on the PropBank role set requires sufficient knowledge about the constituent arguments of a predicate. Therefore, instead of using the PropBank role set, we adopted the concept of 5W1H (Who, What, When, Where, Why, How) as described in [6]. 5W1H concept is widely used in journalism because an article is considered complete only when all the 5W1H are present. The concept of 5W1H is similar to the Karaka relations and easy to understand. We discuss in detail about 5W1H in later sections.

The major contributions of this paper are:

— Development of a corpus for 5W1H extraction from tweets.

— Development of a Deep Neural Network for the 5W1H extraction from tweets.

The rest of the paper is organized as follows. Section 2 discusses the related work. Section 3 discusses the background concepts on SRL. In section 4 the deep neural network implementation is discussed. Section 5 discusses the experiments performed. Results are discussed in section 6 followed by analysis in section 7. We conclude the paper in section 8.

2 Related Work

Though the traditional approaches of Gildea and Jurafsky [11], Pradhan et al. [26],Punyakanok et al. [27] explored the syntactic features, recently, deep neural network based implementations have outperformed the traditional approaches. Zhou and Xu [40] were the first to build an end-to-end system for SRL, where an 8 layered LSTM model was applied which resulted in outperforming the previous state-of-the-art system. To assign semantic labels to syntactic arguments, Roth and Lapata [29] proposed a neural classifier using dependency path embeddings.

He et al. [13] developed a deep neural network with highway LSTMs and constrained decoding that improved over earlier results. To encode syntactic information at word level, Marcheggiani and Titov [19] implemented their system by combining a LSTM network with a graph convolutional network which improved their LSTM classifier results on the CoNLL-2009 dataset.

Attention mechanism was pioneered by Bahdanau et al. [1]. Cheng et al. used [7] LSTMs and self-attention to facilitate the task of machine reading. Tan et al. [35] implemented a self-attention based neural network for SRL without explicitly modeling any syntax that outperformed the previous state-of-the-art results. Strubell et al. [32] implemented a neural network model that combines multi-head self-attention with multi-task learning across dependency parsing, part-of speech tagging, predicate detection and SRL. Their [32] method achieved the best scores on the ConLL-2005 dataset. Liu et al. [17] are the first to study SRL on tweets. They considered only those tweets that reported news events.

They trained a tweet specific system which is based on the mapping between predicate-argument structures from news sentences and news tweets. They further extended their work in [18] where similar tweets are grouped by clustering. Then for each cluster a two-stage SRL labeling is conducted. [20] describe a system for emotion detection from tweets.Their work mainly focuses on identification of roles for Experiencer, State and Stimulus of an emotion. [30] proposed an SRL system for tweets using sequential minimal optimisation (SMO) [25]. Our work adopts the 5W1H extraction for SRL using deep neural network attention mechanism of Bahdanau et al. [1].Our experiments also show that the attention mechanism is effective on the sequence labeling task of 5W1H.

3 Background

3.1 PropBank based SRL

This section describes the SRL based on the PropBank role set. We first discuss what SRL is and then describe how the PropBank role set is applied for the SRL task. A sentence may represent an event through different surface forms. Let us consider the event of someone (John) hitting (event) someone (Steve).

Example:

(3) Yesterday, John hit Steve with a stick

The above sentence has different surface level forms such as:

— Steve was hit by John yesterday with a stick

— Yesterday, Steve was hit with a stick by John

— With a stick, John hit Steve yesterday

— Yesterday Steve was hit with a stick by John

— John hit Steve with a stick yesterday

In the above example, despite having different surface level representations, the event is described by the verb (hit) where "John" is the syntactic subject and "Steve" is the syntactic object. A subject in a sentence is the causer of the action (verb) whereas, an object is the recipient. From example (3), we are able to represent the fact that there was an event of assault, that the participants in the event are John and Steve, and that John played a specific role, the role of hitting Steve.

These shallow semantic representations are called semantic roles. For a given sentence, the objective of SRL is to first identify the predicates (verb) and the arguments; and classify those arguments of each predicate (verb). In PropBank, every verb (predicate) is described by some senses and for each verb sense, there are specific set of roles defined. For example, the verb hit has five different senses in the PropBank database as shown below (only two senses are shown here):

Applying PropBank role set on the sentence in example (3) yields the following semantic roles:

3.2 Modelling 5W1H

The 5W1H (Who, What, When, Where, Why and How) model has been attributed to Hermagoras of Temnos [28] who was an Ancient Greek rhetorician which was further conceptualized by Thomas Wilson [37]. Nowadays, 5W1H is often used in journalism to cover a report. The 5W1H are considered as the answers to a reporter's questions, which are considered as the ground for information gathering. In journalism, a report is considered complete only if it answers to the question of Who did what, when, where, why and how.

Let w = {w1,w2,…,wn} be the sequence of words in a tweet and X be the attribute to which w is to be mapped. We therefore, assume a tweet as (w, X), where, X is the tuple (WHO, WHAT, WHEN, WHERE, WHY, HOW) in 5W1H.

3.2.1 Defining 5W1H

In this sub section, we define the 5W1H in line with the definitions of [39]. Let w = "John met her privately, in the hall, on Monday to discuss their relationship":

Definition 1: Who. It is the set of words that refer to a person, a group of people or an institution involved in an action.

In w, Who={ John }

Definition 2: What. It is the set of words that refer to the people, things or abstract concepts being affected by an action and which undergo the change of state.

In w, What={ met her }

Definition 3: When. It is the set of words that refer to temporal characteristics. In tweets, the notion of time may be the days, weeks, months and year of a calendar or the tick of a clock. It also refers to the observations made either before, after or during the occurrence of events such as festivals, ceremonies, elections etc.

In w, When={ on Monday }

Definition 4: Where. It is the set of the words that refer to locative markers in a tweet. The notion of location is not restricted to physical locations but it also refers to abstract locations.

In w, Where={ in the hall }

Definition 5: Why. It is the set of words that refer to the cause of an action.

In w, Why={ to discuss their relationship }

Definition 6: How. It is the set of words that refer to the manner in which an action is performed.

In w, How={ privately }

We denote

3.3 5W1H vs. PropBank

Semantic roles in PropBank are defined with respect to an individual verb sense. In PropBank, the verbs have numbered arguments labeled as: ARG0, ARG1, ARG2, and so on. In general, numbered arguments correspond to the following semantic roles shown in Table 1. Apart from the numbered arguments, PropBank also involves verb modifiers often known as the functional tags such as manner (MNR), locative (LOC), temporal (TMP) and others.

Table 1 List of PropBank roles

| Argument | Role |

|---|---|

| ARG0 | agent |

| ARG1 | patient |

| ARG2 | instrument,benefactive, attribute |

| ARG3 | starting point, benefactive, attribute |

| ARG4 | ending point |

| ARGM | modifier |

Unlike the PropBank role set, the 5W1H scheme does not specify semantic roles at fine grain levels. However, it defines a simplistic approach for extracting the key information from a given sentence (tweets in our case) for other tasks such as event detection, summerization etc. A comparison of 5W1H and PropBank is illustrated with the following examples.

Example:

(4) Trump's Pyrrhic Victory Provides a BIG Silver Lining for Democrats https://t.co/NzO8NBBkDS

PropBank on example (4):

— predicate: provide

5W1H on example (4):

— predicate: provide

Example:

(5) One +ve I will take from Trump's victory is the acknowledged death of political correctness

PropBank on example (5):

— predicate: take

— predicate: acknowledged

5W1H on example (5):

— predicate: take

— predicate: acknowledged

Annotation:

Annotation based on the PropBank role set requires deep knowledge of SRL and the constituent role arguments. On the other hand, the 5W1H annotation scheme is a simplistic Q&A approach as described in our earlier work [6]. Applying the simple question of "Who did what, when, where, why and how" yields the constituents of the 5W1H. In example (4), for the predicate provide, on applying the 5W1H question of "Who is the provider", yields "Trump's Pyrrhic Victory" as the Who and the question "What is being provided" yields "a BIG Silver Lining for Democrats" as the What. Similarly, in example (5), we obtain the 5W1H constituents for each predicate (take, acknowledge).

However, in both these examples (4 and 5), the arguments ARG1 and ARG2 are merged as "What".

Therefore, the 5W1H model does not distinguish between ARG1 and ARG2. Despite the scenario described in these examples, the constituents of the 5W1H model are mostly similar to some of the PropBank role set. A comparison between the PropBank role set and the 5W1H on our dataset is shown in Table 2. From Table 2, we observe that "Who" is mostly similar to ARG0 (84.48%) with a small fraction (10.34%) being similar to ARG1. This is explained with the following example.

Example:

(6) Murphy Brown Comes Forward With Her Own #MeToo Story https://t.co/kKw81IWz5t via @thedailybeast

Table 2 Percentage distribution of similarity between 5W1H and PropBank in our dataset

| PropBank Role | Who | What | When | Where | Why | How |

|---|---|---|---|---|---|---|

| ARG0 | 84.48 | 0.00 | 3.33 | 0.00 | 0.00 | 0.00 |

| ARG1 | 10.34 | 53.85 | 0.00 | 0.00 | 0.00 | 0.00 |

| ARG2 | 0.00 | 9.89 | 0.00 | 0.00 | 0.00 | 0.00 |

| ARG3 | 0.00 | 0.00 | 0.00 | 22.86 | 0.00 | 0.00 |

| ARG4 | 0.00 | 3.29 | 0.00 | 34.29 | 0.00 | 0.00 |

| ARGM-TMP | 0.00 | 1.09 | 60.00 | 0.00 | 0.00 | 0.00 |

| ARGM-LOC | 0.00 | 1.09 | 10.00 | 25.71 | 0.00 | 0.00 |

| ARGM-CAU | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 | 0.00 |

| ARGM-ADV | 0.00 | 4.39 | 20.00 | 0.00 | 0.00 | 0.06 |

| ARGM-MNR | 0.00 | 3.85 | 0.00 | 8.57 | 0.00 | 90.91 |

| ARGM-MOD | 0.00 | 4.39 | 0.00 | 0.00 | 0.00 | 0.00 |

| ARGM-DIR | 0.00 | 0.01 | 0.00 | 5.71 | 0.00 | 3.03 |

| ARGM-DIS | 0.00 | 1.65 | 0.00 | 0.00 | 0.00 | 0.00 |

| ARGM-NEG | 0.00 | 1.09 | 0.00 | 0.00 | 0.00 | 0.00 |

In example (6), for the predicate comes, "Murphy Brown is ARG1 as per PropBank. However, if 5W1H model is applied then "Murphy Brown is identified as "Who". An important observation is the coverage of "What" with ARG2, ARG4, ARGM-ADV and ARGM-MOD. The 5W1H model does not specify fine grain semantic roles as compared to the ProbBank role set. This is already illustrated in examples (4) and (5). From Table 2, we also observe that "When", "Where", "Why" and "How" are closely similar to ARGM-TMP, ARGM-LOC, ARGM-CAU and ARGM-MNR respectively.

4 Deep Neural Network for 5W1H Extraction

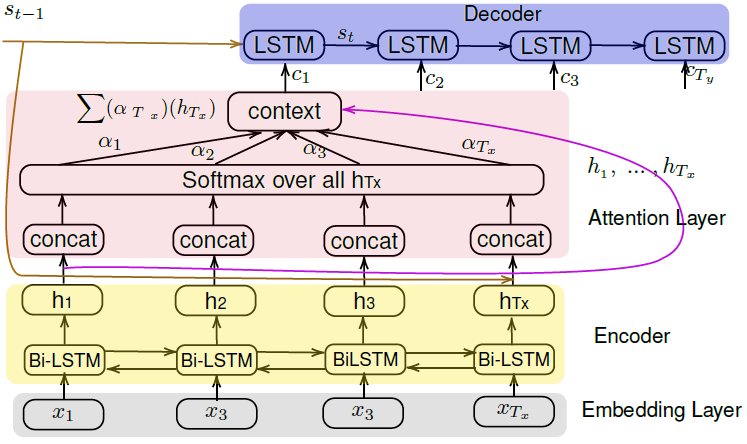

4.1 Attention Background

In this section, we discuss the fundamentals of attention mechanism as proposed by Bahdanau et al. [1]. It is understandable from the example in section 3.1 that SRL is a sequence labeling task. Transforming an input sequence (source) to a a new output sequence (target) is the objective of the sequence to sequence (seq2seq) model [34]. In seq2seq, both sequences can be of arbitrary lengths. The seq2seq model is basically an encoder-decoder architecture. An encoder encodes an input sequence and compresses the information into a context vector of a fixed length. A decoder is initialized with the context vector to produce the transformed output.

Under such an architecture, the decoder initial state is obtained only from the last state of the encoder network. However, there is one major disadvantage of this scheme. The fixed-length context vector is incapable of remembering long sentences. In SRL, an argument may span a long sequence in a sentence. In such a scenario, the seq2seq model is not suitable because it often forgets earlier parts once it completes processing the whole input. The attention mechanism was introduced by Bahdanau et al. [1] to resolve this problem. Attention mechanism creates connections between the context vector and the entire source input rather than building a single context vector out of the encoder's last hidden state. The weights of these shortcut connections are customizable for each output element.

A sequence of dense word vectors are used to represent the input sentence. These word vectors are fed to a bi-directional long short-term memory (Bi-LSTM) [14] encoder to produce a series of hidden states that represent the input. Let us consider a source sequence x of length Tx and then use it to output a target sequence y of length Ty:

An encoder state is represented by the concatenation of the hidden states:

The attention mechanism plugs a context vector c t between the encoder and the decoder. For each single word that the decoder wants to generate, the context vector is used to compute the probability distribution of source language words.

The context vector c

t depends on a sequence

where both va and Wa are weight matrices to be learned in the alignment model.

4.2 Architecture

Our deep attention architecture is in similar lines with Bahdanau et. al. [1] where the input is a sequence of words

4.2.1 Encoder

Our encoder is a B-directional RNNs (Recurrent Neural Network) with LSTM cells. The encoder outputs hidden states

4.2.2 Attention

The attention mechanism is a feed-forward neural network of two layers. In the first layer, at every time step t, we use the concatenation of the forward and backward source hidden states h in the bi-directional encoder and target hidden states s t-1 in the previous time step of the non-stacking unidirectional decoder. The score score(st , hi ) in eq.(5) is the result of the concatenation which is then passed to a softmax to generate the αt,i. Since the αt,i is generated for only one hidden state hi , we need to apply the softmax over all the h of the input sequence Tx . This is obtained by copying the s t-1 to all the h in Tx:

Therefore, αt,i is calculated as:

The generated αt,i are then used with the hidden states h in Tx to compute the context vector ct which is a weighted sum of the products of αt,i and hi in Tx as shown in eq.(3).

4.2.3 Decoder

The decoder is a single layer unidirectional LSTM network responsible for generating the output token yt where t = 1, 2,...,Ty . A learned distribution over the vocabulary at each time step is used to generate yt from t given its state st , the previous output token yt-1, the context vector ct and M. We can parameterize the probability of decoding each word yt as:

where g is the transformation function that outputs a vocabulary-sized vector. Here, st is the RNN hidden unit, abstractly computed as:

where f computes the current hidden state given the previous hidden state in a LSTM unit.

Minimization of the negative log-likelihood of the target token yt for each time step is the primary objective of our model at the time of training. The loss for the whole sequence (X) is calculated as:

5 Experiments

5.1 Dataset

We used two different datasets, one based on the US Elections held in November, 2016 and the other based on the #MeToo4 campaign. The dataset on the US Elections are taken from [30] containing 3000 English tweets. For the #MeToo dataset, we crawled 248,160 tweets using hash tags such as #MeToo, #MeTooCampaign, #MeTooControversy, #MeTooIndia, as query with the twitter4j5 API. We applied regular expressions to remove the Re-tweets (tweets with RT prefix) and Non-English tweets. Most of the Non-English tweets are in Roman transliterated form and therefore, they are manually removed.

After manually removing the re-tweets and Non-English tweets, the dataset is finally reduced to 8175 tweets . The reason for such a drastic reduction is due to the presence of tweets containing Roman transliterated Non-English words. All the tweets are then tokenized with CMU tokenizer [12]. We prepared the datasets in such a manner that for every tweet that has multiple predicates, the tweet is repeated in the corpus for each predicate (Table 3).

5.2 Model Setup

We setup our model with Keras [8] and initialize the model with pre-trained 300-dimensional GloVe [24] embeddings. Our vocabulary size is set to

5.3 Learning

Our models are created on a fifth generation Intel core i7 based machine with four cores and 16 gigabytes of Random Access Memory without any GPU (Graphics Processor Unit) support. Due to the lack of a GPU support, a single epoch takes a considerable amount of time. With the available system configuration, a single epoch took around 30 to 40 minutes. We therefore, experimented with only different epochs of 5, 10 and 20 and got the best results with 20 epochs with a batch size, BATCH_SIZE = 1000. We use Adam optimizer [15] and a learning rate lr = 0.1. The dataset was split into 90% train and 10% test sets.

6 Results

The objective of our work is to extract the 5W1H from tweets. But for comparison with previous [30] SRL systems on tweets, we evaluated our system (Deep-SRL) for PropBank role identification task. In Table 4 , we give the comparison of our system (Deep-SRL) with the SRL system of Rudrapal et. al. [30](DRP-SRL) on the PropBank role identification task. For evaluation purpose, we used the standard measures of Precision, Recall and F-1 .

Table 4 Comparison of DRP and our system on the PropBank role identification task for the US Election corpus.

| System | #Tweets | F-1 |

|---|---|---|

| DRP-SRL | 3000 | 59.76 |

| Deep-SRL | 3000 | 88.48 |

The comparison is done on the US Elections 2016 dataset, on which our system outperformed DRP-SRL system by overall F-1 of 28.72. This is a significant improvement over previous results. In Table 5 , we give the performance of Deep-SRL for 5W1H extraction on both the two datasets (US Elections 2016 and metoo movement). Deep-SRL achieves an overall F-1 score of 88.21 in the whole corpus.

Table 5 Our System (Deep-SRL) for 5W1H extraction on both the US Election and #MeToo corpus.

| Corpus | Precision | Recall | F-1 |

|---|---|---|---|

| US Elections | 90.87 | 86.21 | 88.48 |

| #MeToo | 90.63 | 85.40 | 87.94 |

| Average | 90.75 | 85.8 | 88.21 |

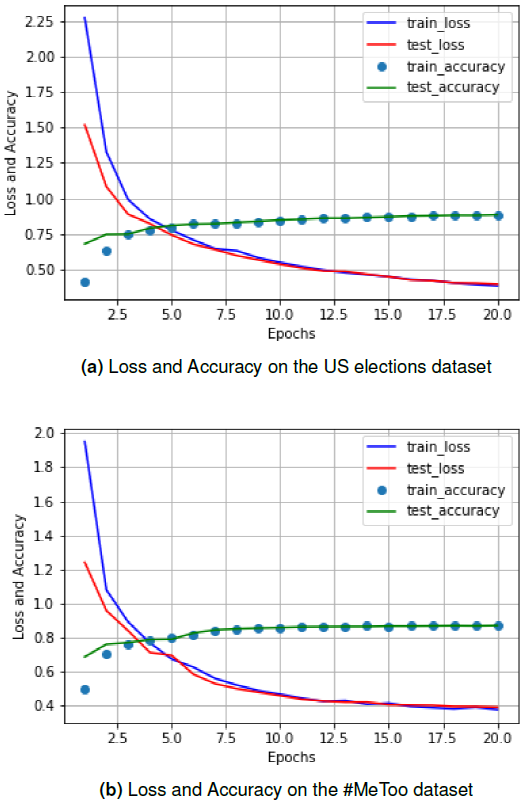

Fig 2(a) and (b) show the loss and accuracy of our model on the train and test sets on both the datasets respectively. The reported loss at epoch = 20 for the US Elections dataset is 0.5 and that for the #MeToo dataset is 0.45. This indicates a drop in the loss by 0.05.

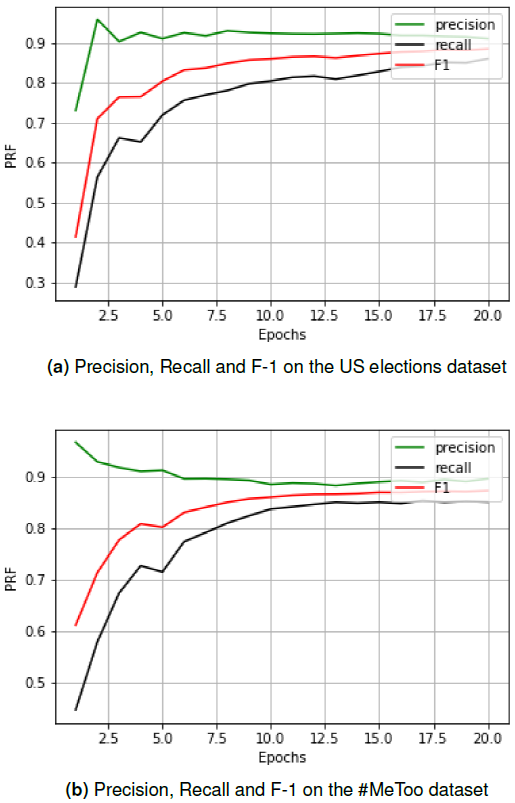

Our models reported an accuracy of 88.32% for the US Elections dataset and 88.15% for the #MeToo dataset. The three metrics of precision, recall and F-1 score is shown in fig 3(a) and (b).

7 Analysis

Since we adopted the BIO6 tagging format, it is necessary to identify the argument span. Here, argument span means the maximum number of tokens falling under a WHO or WHAT or WHEN or WHERE or WHY or HOW. To verify argument spans, we measure the percentage of overlap between the predicted argument spans and the gold spans. We found that 85.4% of the predicted spans match the gold spans completely, 5.23% of the predicted spans are partially overlapping with gold spans, and 9.37% of the predicted spans do not overlap at all with gold. There are partial overlaps because the model could not tag some of the group of tokens with a proper BIO sequence. For example, a token which is supposed to be tagged with a B-WHO, was tagged as I-WHO.

8 Conclusion

SRL based on the PropBank role set is a finegrained approach but requires deep knowledge about the role arguments for annotation. In contrast, our simplistic 5W1H approach is easier to annotate a corpus with a little compromise at the fine-grain level role set identification task. In this work, we proposed a deep attention based neural network for the task of semantic role labeling by extracting the 5W1H from tweets. We trained our models and evaluated them on the 2016 US Elections dataset that was used by a previous SRL system for tweets. We compared our models with previous SRL systems on tweets and observed a significant improvement over the previous implementations. We also prepared a new dataset based on the #MeToo campaign and evaluated our models on them. Our experimental results indicate that our models substantially improve SRL performances on tweets. However, there are certain limitations in the 5W1H adoption as the fine-grain semantic roles are ignored in such an approach, thus, limiting the in-depth SRL role identification. However, the 5W1H concept could be very convenient to perform other information extraction tasks such as event detection, even summarization on tweets.

nueva página del texto (beta)

nueva página del texto (beta)