INTRODUCTION

The aim of this research is to explore the correlation between affordances, conceived as the perceived opportunities for motor action in virtual environments and the experience of spatial presence, understood as the experience of “being inside” Virtual Reality (VR). How do we perceive space in VR? Does our ability to move and act in VR intensify our experience of spatial presence? How can the intensity of spatial presence be measured? The experience of space in virtual environments is studied through theoretical approaches that range from philosophical questions about the nature of consciousness and perception, to cognitive science inquiries about the neural processes that underlie spatial experience. The general premise is that our ability to feel inside a virtual environment depends on our ability to perceive affordances. The general hypothesis of this research states there is a positive correlation between affordance’s variety and spatial presence. According to the SPES (Spatial Presence Experience Scale) proposed by Hartmann et al. (2016), the key factors that affect the intensity of spatial presence are self-location (SA) and possible actions (PA). Other variables used in the scale are domain-specific interest, spatial imagery skills, attention allocation, spatial mental model, cognitive involvement, and trait absorption (Hartman et al., 2016).

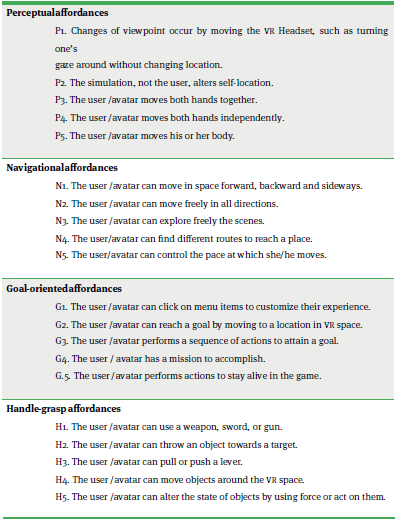

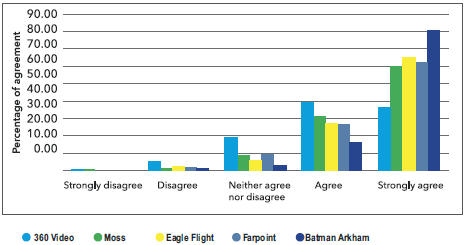

To measure the intensity of spatial presence in user’s experience of virtual reality, we used Hartman et al. (2016) SPES as a questionnaire, in an experiment with 30 participants. We measured the number of affordance types in each simulation while proposing a model for the classification of affordances in VR videogames. The model includes four types: perceptual, navigational, goal-oriented, and handle-grasp affordances; and 20 subtypes, as Figure 1 shows. Each participant tested five different VR simulations. After each simulation, participants answered a Likert scale questionnaire based on the SPES. The five simulations were presented to the participants in an order determined by the variety of affordances and the amount of interaction and movement required from the user. This was calculated through the number of affordance types and subtypes available in each simulation. Simulations ranked from low interaction, with the lowest number of affordance types (simulation 1, 360º Video ) to high interaction and most possible movements, with the highest number of affordance types (simulation 5, Batman Arkham, a VR videogame played standing with one controller in each hand), as expressed in Figure 2. Each simulation involved either the embodiment of a third person avatar, a first-person avatar or no avatar (as in the case of 360˚ Video). This article presents the following sections: a literature review followed by methods, results, findings and references.

LITERATURE REVIEW

The question of how we perceive space through immersive media points at situated and embodied cognition as the centre of human perceptual processes. Perception as an embodied process has been theorized since the work of Maurice Merleau-Ponty ([1945]1962), and has inspired recent research in cognitive science (Csordas, 1994; Dyson, 2009; Gallese, 2005; Durt and Fuchs, 2017; and Penny, 2017.) The connection between human beings and technology has also been studied from post-phenomenological approaches (O'Neil Irwin, 2016). Perception is rooted not only in the mind but also in the human body and its senses as well as in its connection to the world. Cognition is both embodied and situated (Tversky, 2009, p. 202). This means that we perceive space in relation to the situation of our body. Spatial perception is central to human survival, as it allows us to act and move in the world. The interdependent relation between action and situated cognition can be understood in the way we live through the spaces in which our body acts. Action and perception are co-constituted; they are inter-dependently produced (Robbins and Aydede, 2009, p. 4). Simon Penny writes: “Put simply, in sensory experience there is no objective world ‘out there’. By this logic, mind and world are simultaneously cocreated” (Penny, 2017, p. 17). J. Kevin O'Regan and Alva Noë, instead of assuming that visual experience consists of an internal representation of the external world, they describe it as an exploratory activity mediated by sensory and motor contingencies (2001, p. 940). Hubert L. Dreyfus and Stuart E. Dreyfus, based on the work of James J. Gibson ([1986] 2015), conceive the physical environment not as a source of information external to the mind, but as a space interrelated to the mind, where affordances, as opportunities for motor action arise, such as possible trajectories or tools (Dreyfus and Dreyfus, 1999; Andler, 2006).

Affordances

Since its emergence in the work of Gibson (1966, p. 285), the concept of affordances has been widely debated. Affordances are based on the capacity of organisms (human and animal) to perceive possibilities for motor action, according to the capabilities of their own bodies (Sanders, 1999; Penny, 2017). Pawel Grabarczyk and Marek Pokropski write: “Affordances are neither objective features of objects, such as shape, nor a subjective representation, but they are relational i.e. they emerge dynamically in a subject’s perceptual and motoric activity in the environment” (2016, p. 34).

The sensory field is impregnated with possibilities for action. Objects in the world are perceived as invitations to motor action, for example in the use of tools that appear in our near perceptual environment (Costantini et al., 2010). The concept of affordances is translated from the German Aufforderungscharackter (Sanders, 1999; Heras-Escribano, 2019). Aufforderung refers to the stimulating nature of an object, that is, the exhortation or invitation it proposes. The objects we perceive tell us what to do with them (Sanders, 1999, p.129). In the same way that an animal detects escape routes, food sources or simple movements by following the options that its perceptual field reports (such as branches of trees, hiding places, food or available prey), humans perceive the possibilities for action in our perceptual field, whether it is mediated by technology or not. Humans as well as every other organism, learn to react to affordances in the environment through bodily experience (Penny, 2017, p. 27). Each organism perceives different types of affordances, according to its species and its own capacity for movement; it can only perceive the world in which it can act (Gibson, [1986] 2015; Penny, 2017).

We suggest the following definition of affordances: they are relational properties perceived as opportunities for action in the environment. Affordances are relational because they appear in the perceptual field always in relation to the individual characteristics of the organism, such as bodily dimensions, skills, knowledge, and goals. In this study, affordances in virtual reality are categorized in four types: perceptual, navigational, goal-oriented or handle-grasp. The spatial physical environment that human beings perceive as immediate is full of affordances manifested in objects and in the characteristics of spatial surroundings, such as inclinations, roads, precipices, rocks and so on. In VR media, users perceive scenes, paths, menus, buttons, levers and other objects that function as interactive routes through which they navigate, perform actions and attain goals.

An affordance emerges at the intersection of the user’s perception, the environment, and the physical properties of the object (Burlamaqui and Dong, 2015, p. 3). Although they are not always evident or visible, just as in the physical world, some affordances in VR can be perceived while others cannot (Norman, 2013, p. 18-20). Some can only be perceived but not acted upon, for example a tree in the background of a VR scene, which can be seen but does not afford climbing it. Norman terms these “misleading signifiers”, a kind of perceptual affordance (2013, p.18). The ‘users’ perception of affordances depends on their own individual skills and abilities within the virtual environment. Unlike Norman, Dreyfus and Dreyfus (1999) describe affordances as opportunities for action that we can perceive - either because we know them or because we are in the process of knowing them. Dreyfus and Dreyfus work is theoretically closer to Merleau-Ponty’s concept of intentional arc, which tells us that we acquire embodied skills by dealing with specific tasks and objects, and these in turn modify the way those objects and tasks appear to us (Dreyfus and Dreyfus, 1999, p. 103). The characteristics of the human world, what allows us to walk, reach or act, are on one hand correlated to our bodily capacity and on the other, to the skills we have acquired (Dreyfus and Dreyfus, 1999, p. 104). Like Dreyfus and Dreyfus, David Kirsh agrees that affordances are objective (2009). Kirsh writes: “If at first an agent does not see a possible action, she can interact with the environment and increase her chances of discovering it” (2009, p. 293).

This research considers that the probabilities of discovering affordances in VR are higher if there are more types of affordances embedded in the simulation. Affordances in VR only become opportunities for action when the user perceives them. However, invisible affordances are latent in VR environments and can be discovered as the user learns to perceive them. As it was observed during the experiment, VR users go through a learning curve when first experiencing VR, and become acquainted with ways of moving, handling tools and interacting with objects. Each user responds differently to affordances in the same scenario. The sequence of affordances a user perceives is unique because each user has an individual set of skills and an individual collection of knowledge. These specific profiles are built of cognitive and sensorimotor skills needed to make sense of and move in VR. Therefore, it is difficult to quantify the number of affordances experienced by users in complex VR environments. As Brett R. Fajen and Flip Phillips write: “Possibilities for action furnished by the environment are not fixed. Affordances can materialize, disappear, and vary because of changes in the material properties or the positions of objects in the environment” (2012, p. 70).

The measuring of affordances in VR is usually done with minimal sensorial inputs in a short time frame. For instance, Regia-Corte et al. experiments with a slanted surface, in which the simulation consists only of a room with a slanted wooden surface (2013, p. 8). Measuring number of affordances in complex VR environments is not often done in empirical research. Spatial presence and affordances are difficult to measure because they are subjective states of experience (Grabarczyk and Pokropski, 2016, p. 30). Each participant perceives different courses of action inside virtual environments, which trigger different numbers of possible affordances in each chosen route. For instance, taking one route in one simulation might allow the user to perceive more moving objects (perceptual affordances) but fewer possible actions with the hand controllers (handle-grasp affordances). For these reasons, we chose to quantify the variety of affordances present in each simulation by ranking affordance types and subtypes.

As Figure 1 shows, this research proposes a classification and ranking system of VR affordances, based on four types: perceptual, navigational, goal-oriented and handle-grasp affordances. This model was informed by previous typologies used for the categorisation of affordances in videogames (van Osch and Mendelson, 2011; Bentley and Osborn, 2019; Steffen et al., 2019; Cardona-Rivera and Young, 2013). The categories for VR affordances can also be related to Tversky three categories of spaces in spatial cognition: “the space of the body, the space around the body and the space of navigation” (2009, p. 208). The space of the body is embodied space, experienced from the inside of the body (Tversky, 2009, p. 203). Perception is anchored in the space of the body; it is the space in which visual, auditory, kinaesthetic and tactile representations are produced while engaging with VR environments. The second type of space mentioned by Tversky is the space around the body, also called peripersonal space (2009). It is the space close to our limbs, or the virtual representation of them. In peripersonal space we can grasp objects and interact with them. It is a lived space, measured in relation to our body’s movement and dimensions. The third space is navigational, one that is too large to be perceived all at one, so it must be constructed through pieces, experiences, memories, maps or information we know (Tversky, 2009, p. 205). Perceptual affordances are perceived from primordial embodied space. Navigational affordances appear in the space of navigation and refer to the possibility of voluntary spatial movement, such as walking along a path, moving sideways, exploring a scene, moving between locations, finding routes, flying, jumping or climbing and controlling the pace or speed of movement. Handle-grasp affordances appear in peripersonal space or the space around the body and refer to hand and arm movements performed with the controller to grasp and manipulate objects in the simulation, such as holding and firing a weapon; throwing an object towards a target, pulling or pushing a lever, picking up objects and moving them around or altering the state of objects by acting upon them, such as opening a door, putting together a puzzle, playing a piano or using a key. Goal-oriented affordances involve all spaces and appear as a sequence of events to reach a goal. They refer to actions needed to reach a goal in the simulation, such as clicking on menu items, having a mission during the game, and performing and avoiding actions to remain alive.

In this model, perceptual affordances are the only type that involves perceived, not actual movement, the other types involve opportunities for motor action that are performed by the user. Perceptual affordances refer to features intrinsic to the tridimensionality of immersive environments in VR, they are visual elements that are perceived stereoscopically but are not interactive, such as scenic features - trees in the background, shelves or objects that are unreachable to the user but are part of the scene. These perceptual elements require no voluntary action from the user and are presented as opportunities to perceive virtual space as immersive. The other three types of affordances (navigational, goal-oriented and handle-grasp) involve opportunities for motor action, which are voluntary actions performed by the user with the aid of hand controllers. As it can be seen in Figure 1, this model proposes 20 subtypes of affordances that can rank a value of 1 if the affordance subtype is present and 0 if the affordance subtype is not present in the simulation. The total score reflects the variety of affordance subtypes in a VR simulation, not the frequency in which they are presented to the user. The exact number of affordances perceived by each participant was not measured due both to the multiplicity of sensorial stimuli in each simulation and the complexity of recording subjective perception in an environment with multiple courses of action.

Spatial Presence

Spatial presence is described as the feeling of being in VR as though it were a real place (Lombard and Jones, 2015; Papagiannis, 2017). For a detailed literature review on the concept of presence, see Matthew Lombard and Matthew T. Jones (2015). Prominent theorists on the concept of presence include Matthew Lombard and Theresa Ditton (1997), David Jacobson (2002), Frank Biocca (2003) and Riva et al. (2004). The ability to experience spatial presence in VR is related to the ability to do or act within it (Hartmann et al., 2016). The mechanism of perception in which the motor cortex is stimulated by the perception of represented spaces is at the core of spatial presence. Our motor cortex becomes active when encountering affordances, responding to the virtual environment in a similar way in which it would respond to physical space (Grodal, 2009, p. 150; Laarni et al., 2015, p. 144; Gallese and Guerra, 2012).

Biocca (2003) writes that the concept of presence works within a two-pole model: the physical reality and the virtual reality. This leads to a problem, which he identifies as “the book problem”, the “physical reality problem” or the “dream state problem” (2003, p. 2). It refers to a disconnection between spatial attention and mental imagery (Biocca, 2003). In the two-pole model, users can only be present in one of the two environments: the physical or the virtual, this assumes that sensorimotor immersion is the primary cause of presence, something that does not correspond to the experience of presence in non-immersive media, such as books or mobile apps (Biocca, 2003). Some people experience presence while reading a book, which is considered a medium with low immersion levels, therefore presence cannot happen only with immersive media (Baumgartner et al., 2006). In other words, higher immersion in media might not necessarily mean higher levels of presence (Schubert and Crusius, 2002, p.1; Biocca, 2003, p. 2; Lombard and Jones, 2015, p. 21). Biocca proposes a three-pole model, developed from an evolutionary viewpoint; the third pole refers to the mental imagery space, because VR users are mentally constructing the simulation (Biocca, 2003, p. 5-6).

The study of presence accompanies a debate on the mediation of technology in perception. Some theorists consider that regardless of the medium used, all human experience is based on the cognitive representation of what the senses perceive, therefore, all perception is always already mediated (Lombard and Jones, 2015, p. 22). For Lombard and Jones all human experience of the “outside world” is mediated by biology (2015, p. 22). For Brian Lonsway, there are other forms of mediation, such as genetic, cultural, physical, and technological (2002, p. 65). It is evident that the dichotomy of interior/exterior underlines debates about perception and presence. The concept of mediation refers to technology as an intermediary in the process of perception (Lombard and Jones, 2015, p. 22). The premise is that the less perceptible the technology is in the user's experience, the greater the intensity of presence in VR. Some other factors that contribute to increase the experience of presence are realism, accurate visual alignment, and a fast response of the VR to the user’s movements (Papagiannis, 2017, p.70).

Similarly, to Biocca’s three-pole model, in the spatial presence model developed by Draper et al. (1998), the attention of the user is decisive. According to this, the more attention is given to the stimulus presented, the greater the identification with the environment and the more intense the experience of telepresence will be (Draper et al., 1998, p. 366). The concept of telepresence developed by Jonathan Steuer (1992) emphasizes two properties of virtual environments: liveliness and interactivity. Liveliness is understood as the richness in the representation of the mediated environment; the more senses are stimulated by a media system; the greater degree of liveliness will appear. Spatial presence is achieved when the sensory channels are saturated in VR and the physical space in the perceptual field is suppressed (Steuer, 1992; Hartmann et al., 2016, p. 121).

A key idea in theories about spatial presence is the relationship between actions and the construction of meanings (Hartmann et al., 2016, p. 128). Mental capacities are linked to the subjective perception of space, that is, the spatial location perceived as "my perceptual field", which enables the possibilities of action (Hartmann et al., 2016, p. 118). Self-location (SL) is a variable in the SPES and refers to the positioning of one’s sensorium within VR. An avatar often enables immersion but even when there is no avatar, users can sense, move, and experience the virtual world. This is the case of 360˚ Video, in which users enter the virtual environment through a subjective camera’s viewpoint. Grabarczyk and Pokropski call 360˚ Video a case of minimal embodiment, in which the user embodies the camera viewpoint, experiencing the virtual world through the camera’s spatial orientation (2016, p. 37). The spatial situation model proposes an egocentric frame of reference, where the user is placed at the centre of objects and their environment (Lombard et al., 2015, p. 5-6). In immersive environments, sensory information displayed coincides with the user's own proprioception, that is, with the user's spatial sense (Sanchez-Vives and Slater, 2005; Hartmann et al., 2016). The users of immersive environments identify their own presence with their virtual body, with the representation of the user in VR as well as with their own point of view (their subjective frame of visual reference) with the point of view offered by the virtual scenario.

There is a close relation between the body morphology of the avatar and the affordances that emerge in VR. The user perceives affordances in relation to the characteristics of the avatar. As in the case of human physical bodies, the capacities of the virtual body, specifically its shape and size, will determine the potential to perform actions (Merleau-Ponty, [1945] 1962; Dreyfus and Dreyfus, 1999). Rybarczyk et al. (2014) studied the effect of avatars in triggering a sense of embodiment and suggest that affordances are scaled to fit an individual’s body (2014, p.2). According to Thomas Schubert, Frank Friedmann and Holger Regenbrecht (2001) an embodied mental state immediately triggers a sense of spatial presence. Meyer et al. (2019) describe sensorimotor affordances (SMA) as properties found in the VR environment that provide feedback between the contingencies of the user’s body and the environment (2019, p.2). For Costantini et al. (2010) affordances appear when objects and tools are in the reachable space of the body, termed the peripersonal space. In VR, what appears beyond our peripersonal space works as a perceptual affordance, it might suggest a route towards a different scene or might help us understand spatial relations in the simulation.

The perception of spatial relations in VR - such as what is far, what is near or what can be done in a scene - are based on the conditions in which the user enters VR, whether through a first-person view or through a non-embodied avatar. In other words, the perception of space in VR depends on the relation between the sensorimotor contingencies of the embodied self (avatar or not) and the VR simulation. The scale of the avatar as well as the congruence between the avatar’s movements and the user’s, induce a feeling of presence and body ownership on its user (Rybarczyk et al., 2014, p.3). The idea of congruence between avatars’ scale and movement and the user’s was a key factor in the categorization of affordances for this study. VR games that are played in first person perspective seem to induce a higher degree of presence and sense of body-ownership, especially when the avatar closely mirrors the movement of the user’s body. In the case of VR games that are played in 3rd person (non-embodied avatar), there is a more obvious disparity between the user’s self-location and the avatar. This type of interaction, enabled by a controller or a joystick, requires a learning process in which the user relates his or her own movement to the avatar’s movement (Rybarczyk et al., 2014, p.7).

METHODS

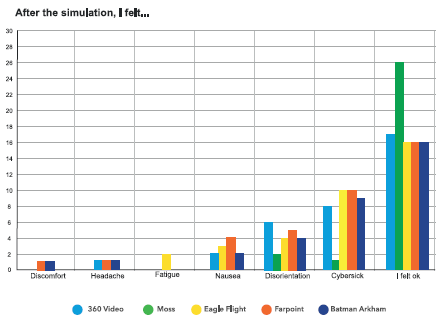

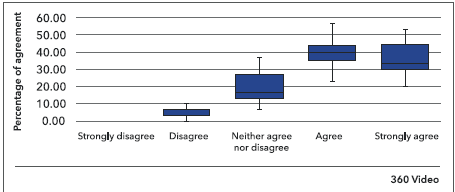

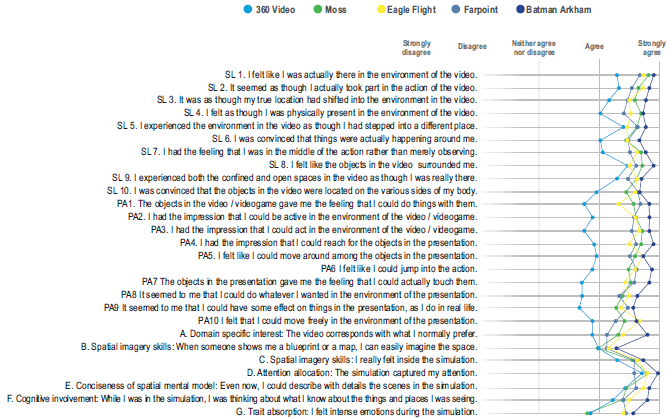

After the literature review, this research involved an experiment with a group of 30 participants aged between 19-23 years old. Participants were all Spanish-speaking students at the Autonomous University of Tamaulipas in Ciudad Victoria, Tamaulipas, Mexico and were recruited voluntarily in a classroom. Each participant tested five VR simulations; each simulation was followed by one questionnaire based on the SPES (Spatial Presence Experience Scale) proposed by Hartmann et al. (2016). In total, 150 SPES questionnaires were filled. Each questionnaire consists of 27 questions in Likert Scale measuring the level of agreement, as shown in Figure 3. “The SPES positively correlates to the users’ self-location (questions SL 1 to SL 10), possible actions (questions PA 1 to PA 10), domain-specific interest (question A), spatial imagery skills (question B, C), attention allocation (question D), conciseness of spatial mental model (question E), cognitive involvement (question F) and trait absorption (question G)”. (Hartmann et al., 2016, p.6). The questionnaire was translated to Spanish and answered on a website.

Source: Author's elaboration.

Figure 3 A graphic representation of the comparison of means for each question. Means for each question can be seen in Figure 3

The duration of each individual experiment lasted an average of one hour and 20 minutes. Experiments were carried in a room equipped with a 55 television, a PlayStation 4 console, a head mounted display for virtual reality, speakers, a comfortable chair for the participant and a sitting area for the researcher. All experiments were carried under controlled conditions at a temperature of 22˚ degrees Celsius (71.6 F). Each participant experienced the experiment individually, after giving his or her informed consent in written form. The questionnaire also inquired about frequency of videogame usage, previous experience with VR and physical sensations during the simulation.

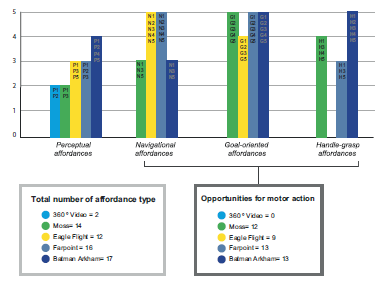

Each participant experienced the first VR simulation, a 360˚ Video of a waterslide, followed by a questionnaire. This procedure was repeated four times with different VR videogames. All five simulations were tested with an HMD (head-mounted display) connected to a PlayStation 4 console. The VR simulations were one 360˚ Video of a waterslide and four VR videogames. Each simulation gave users different opportunities for action and movement, inscribing different affordances in each simulation experience. Order effects were considered in the design of the experiment. The order of the simulations was not randomised; they were presented in the same order, from the least interactive (less affordance types) to the most interactive (most affordance types). Although the logic behind randomizing stimuli is that randomization helps to achieve balance, this is not always the case (Lilly, 2009, p. 246). We had to consider that 24 out of 30 participants had never experienced VR before and 66 % of participants played with videogames less than two times a month, which was inquired upon recruitment. Clinical trials in therapeutic VR do not always use a randomised order; this is because the researchers must consider the acceptability and tolerability of participants to the VR technology (Birckhead et al., 2019). Acceptability not only involves the willingness to use the technology but also the participants’ feelings of “scepticism, fear, vulnerability, and concern” (Birckhead et al., 2019). Most participants expressed verbally they were excited about using VR for the first time. All participants were asked at the beginning of the experiment to express verbally if they felt any discomfort, such as nausea or vertigo and were reassured by the researcher that the simulation could be stopped at any time. None of the participants requested to stop the experiment. However, all participants were asked again in the questionnaires to choose if they felt one or more of the following sensations: cyber sickness (motion sickness triggered by VR), nausea, discomfort, fatigue, headache, disorientation, or no discomfort at all. Some participants verbally expressed they had never used a videogame before, and they asked for instructions to handle the controllers and to find routes and tasks inside the videogames. These participants found it harder to identify which opportunities for action were available. They were verbally directed when they asked for instructions. However, all participants were able to use the controllers and became acquainted with them through the experiment. Most first-time users of VR seemed to experience a sense of amazement and excitement. During the experiment, many users exclaimed words of surprise when a new place, tool, character, or object appeared in their visual field. Some users were more expressive than others; some displayed an expressive body language that indicated excitement, others moved their heads towards objects with curiosity, others verbally expressed they were nervous or anxious.

As mentioned in the introduction, the five simulations were presented to participants in an order determined by the variety of affordances and the amount of interaction and movement required from the user. See types and subtypes of affordances in Figure 1. The five vr simulations were presented from the least interactive to the most interactive. This was calculated through the number of affordance types and subtypes in each simulation. The five simulations ranked from low affordance variety to the most possible affordance types, as Figure 2 shows. Based on this, the experiment had the following order: 1) a 360˚ Video of a waterslide; 2) Moss, an adventure game which is mostly played through a non-embodied avatar (third person perspective) and resembles the dynamics of traditional videogames; 3) Eagle Flight, a VR flight simulator that involves navigating with the movement of the head with little use of the hand controller; 4) Far Point, a first person game that involves more complex movements but was played in a seated position with one hand controller; 5) Batman Arkham, which offers the most opportunities for motor action, requires two motion controllers, one in each hand and the game is played standing, which involves a wider range of body movements compared to the other simulations.

The affordances in all simulations were categorised and ranked into types and subtypes, as seen in Figure 2. The data collected in the SPES questionnaires was analysed using a chi-square test and a residual analysis, which identified the variables making a larger contribution to the result. The data collected was also summarized as a graphic report showing the standard deviation of the Likert scale answers and a mean of each question’s response. Results were analysed using descriptive statistics to visualize the tendency towards intensity of spatial presence (Siegel and Castella, 2009). The level of correlation was calculated with a Cochran’s Q test. The five VR simulations that constituted the main test of the experiment were ranked by number of affordance subtype, according to the classification presented in Figure 1. Although both the SPES and the classification of affordances by type are based on the users’ experience and relate to similar concepts, the measuring variables are not identical. In the questionnaire, questions related to motor affordances are abbreviated as PA (Possible Actions). However, only PA3, PA5, PA6, PA8 and PA10 are related to navigational affordances. PA1, PA2, PA3, PA4, PA7 and PA9 can be linked to handle-grasp affordances and PA2 and PA3 relate to goal-oriented affordances. Questions related to perceptual affordances are abbreviated as SL (self-location), and are numbered SL1 to SL10.

RESULTS

Types of affordances

Figure 2 shows the number of affordance types and sub-types in each VR simulation. The 360˚ Video presented only two perceptual affordances but not the other types. Eagle Flight ranked higher on navigational affordances than on other types, because it is a flight simulator in which the navigation is the main activity for the user. Moss ranked higher in goal-oriented affordances than in handle-grasp because the game is played in third person, through a mouse avatar. Therefore, the user’s perception of movement is non-embodied. In other words, most of the grasping action is perceived from afar, not in first person. The opposite occurs in Batman, Far point and Eagle Flight, where actions are perceived in first person perspective.

Spatial Presence Experience Scale (SPES)

As it can be seen in Figure 3, participants tended to agree more strongly with questions referring to Batman Arkham, showing a generally shorter standard deviation and a higher rank in the SPES. The five-point Likert scale is expressed as follows: strongly disagree (1), disagree (2), neither agree nor disagree (3), agree (4) and strongly agree (5). The mean values for each question in each simulation can be seen in Figures 6, 10, 13, 15 and 18. Figure 3 shows that the mean of answers that correspond to the simulation with most affordances (Batman Arkham) is closer to the parameter “Strongly Agree”, which represents a more intense experience of spatial presence. The lines represent the mean of answers of each simulation and show that intensity of spatial presence increases with variety of affordances.

Frequency of videogame use

Figure 4 shows frequency of videogame use, 43% of participants answered they almost never played with videogames and 23% played less than once or twice a month. 80% had never experienced virtual reality before the experiment. Through direct observation, it was evident that users who almost never played with videogames showed lower dexterity with virtual tools. Only 5 participants stated that they play videogames almost every day, these same participants showed a superior level of dexterity with the hand controllers and with virtual tools inside the simulations.

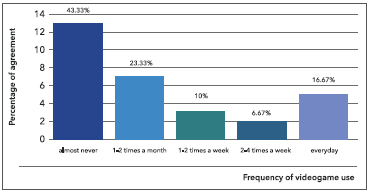

Although all participants were initially asked to verbally express if they felt any discomfort or if they wanted to have a break or stop altogether, none of them required stopping the experiment. This contrasts with their answers in the questionnaire, which asked them after each simulation, to indicate if they felt any of the physical sensations shown in Figure 5. As shown, most participants felt ok during the experiment. However, approximately one third of participants felt cybersick during the 360˚ Video (26.6%), Batman Arkham (30%), Eagle Flight (33.3%) and Farpoint (33.3%).

Case Study 1: 360º video

The 360˚ Video of a waterslide (Glass Canvas UK, 2016), involves a travelling camera moving though different computer-generated scenarios such as Las Vegas, Dubai, a mountain, the inside of a house and a lake. There is no interactivity; 360˚ videos allow an immersive view without giving the user the option of using tools inside the simulation or moving the position of the camera. The user can only look around in 360˚. Affordances are limited to the movement of the user’s head, which determines the viewpoint from which the simulation is perceived. No other opportunity for action is involved, there are no tasks, no interactivity and the user does not use the controller. As the camera moves through the waterslide, the user can see different objects and landscapes; some objects are near the user’s perceptual body. The experience of spatial presence was ranked differently between participants (χ2 = 444.84, df = 104, P < 2.2e-16). 39.20% of participants agreed with the statements describing the experience of spatial presence, 36.37 % strongly agreed, 19.12 % neither agreed nor disagreed, 5.06% disagreed and 0.24% strongly disagreed. The simulation ranked high in question D, which measured the level of attention in the user. Interestingly, even though there was no interactivity involved, participants had the sensation that performing actions was possible.

Case Study 2: Moss

Moss is an adventure VR game published by Polyarc (2018), which combines first and third person perspectives. The player begins the game in a library, reading a book that narrates the main story. Then, the player enters a forest, where a mouse named Quill has to be manipulated to solve puzzles and find her way through the adventure. It is played with one hand controller Dual Shock 4. Affordances in this simulation are mostly induced through third person perspective. Although the initial scene of the game begins with a first person perspective, subsequent scenes are played in third person through a mouse avatar that is seen from a distance, as in a model. Image 1 and 2 show the different viewpoints in Moss,Image 1 shows the initial scene in first person perspective. And Image 2 shows the following scene, played in third person viewpoint, where the user moves a small avatar of a mouse, seen from “outside”. Affordances in most of this game occur through the movement of the avatar. Only in the first scene users embody and act from a first-person perspective. As Image 1 shows, the blue crystal ball represents the user’s hands and it’s used to turn the pages of the book.

Source: Moss [videogame]. (n.d.b.).

Image 2 Third person perspective in Moss, enabled by a non-embodied avatar

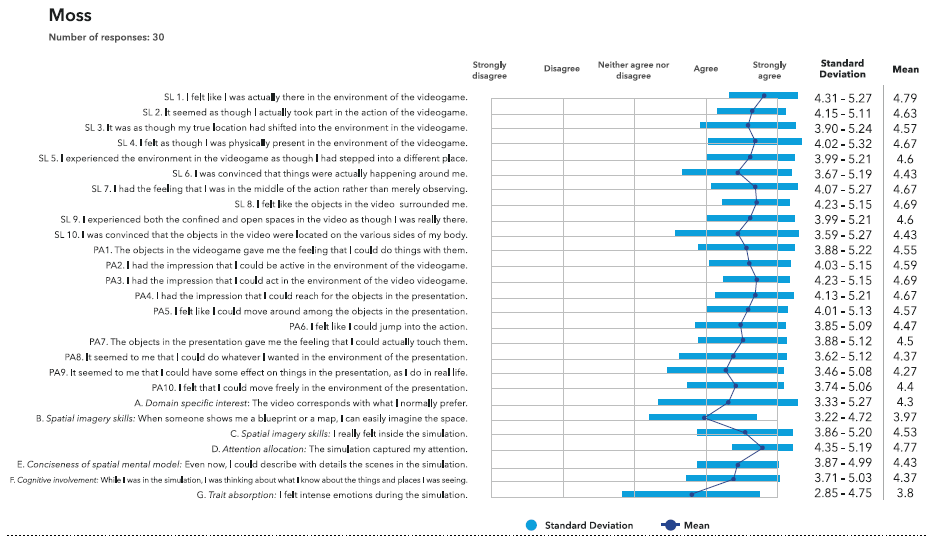

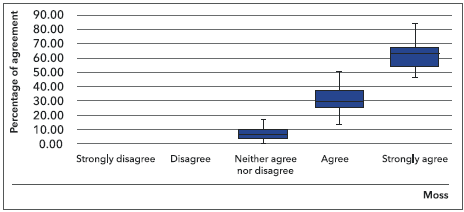

Playing the game in third person perspective means there is not an embodied mental state; affordances are transferred to the avatar. Possibilities for motor action include the avatar can walk through a path in the woods, jump, climb and use a sword. The mouse performs these actions, while the user remains outside the scene, as if looking at a model of the mouse’s world from a distance. All actions were performed seated. Participants ranked the experience of spatial presence as follows (χ2 = 596.74, df = 104, P < 2.2e-16). 59.81% of participants strongly agreed to the statements describing spatial presence, 30.75% agreed, 8.34% neither agreed nor disagreed, 0.98 disagreed and 0.12% strongly disagreed.

Source: Author's elaboration.

Figure 9 Box plot percentage of level of agreement in statements describing the experience of spatial presence in Moss

Case Study 3: Eagle Flight

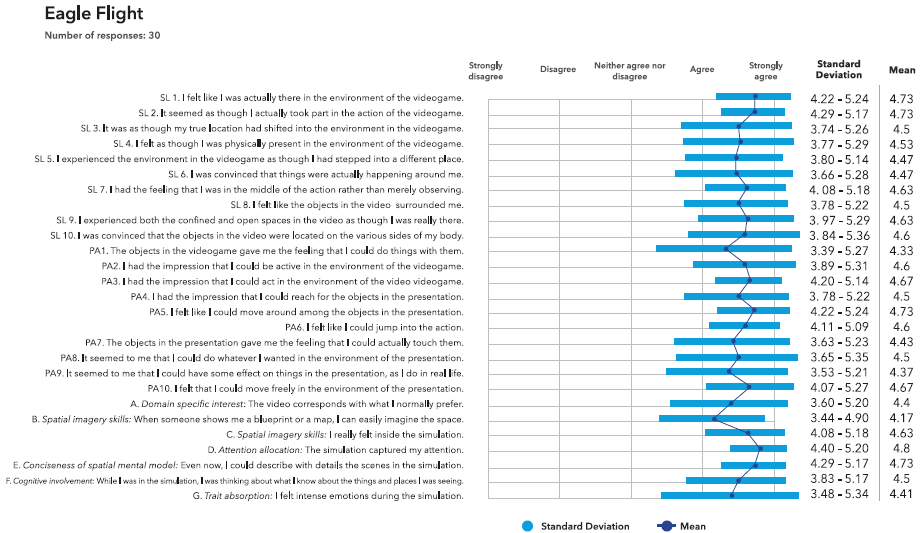

Eagle Flight is a flight simulator published by Ubisoft (2016) in which the player embodies an eagle flying over a post-apocalyptic version of the city of Paris. In this game, participants were instructed to choose the free flight mode. The main interaction performed by the user is directing flight through the user’s head movement, a hand controller Dual Shock 4 is used to increase or decrease the speed of flight. Tilting and turning the head-mounted display controls navigation. Navigational affordances are numerous in both type and frequency because the eagle has free range of motion during flight and is possible to move in all directions. The eagle can pass through trees, buildings, bridges, tunnels, etc. However, it is not possible to stop movement and the avatar cannot touch or interact with any objects in the scene. Because of this, most questions inquiring about possible actions ranked lower compared to other simulations, except question PA 5 and PA 10, which measure the sensation of moving among objects and the sensation of moving freely, respectively.

All actions were performed seated. Participants ranked the experience of spatial presence as follows (χ2 = 267.27, df = 78, P < 2.2e-16). 64.95% of participants strongly agreed to the statements describing spatial presence, 27.30% agreed, 5.54% nor agreed or disagreed, 2.21 disagreed and 0% strongly disagreed.

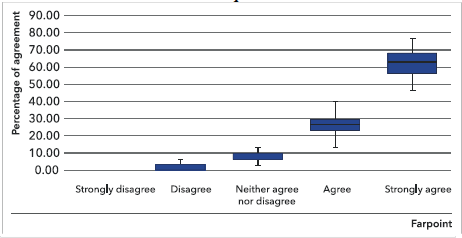

Case Study 4: Farpoint

Farpoint is a first person outer space adventure game published by Sony Interactive Entertainment (2017). The player begins inside a spaceship, which falls into a wormhole and crashes into an alien planet. It is played with one hand controller Dual Shock 4. Users’ hands are represented by a gun, which can be used to shoot, throw, scan, select or click on menu items. In the first scene, users are inside a spacecraft where they can only see outer space. After falling into a wormhole and crashing in an alien planet, the user goes outside the craft to explore. At this point, the first person avatar can walk in all directions and has to fight against a giant spider. All actions were performed seated. Participants ranked differently the perceived spatial presence they experienced while playing this game (χ2 = 248.22, df = 78, P < 2.2e-16). 62.22% of participants strongly agreed to the statements describing spatial presence, 26.42% agreed, 9.38% nor agreed or disagreed, 1.98 disagreed and 0% strongly disagreed.

Source: Author's elaboration

Figure 13 Box plot percentage of level of agreement in statements describing the experience of spatial presence in Farpoint

Case Study 5: Batman Arkham

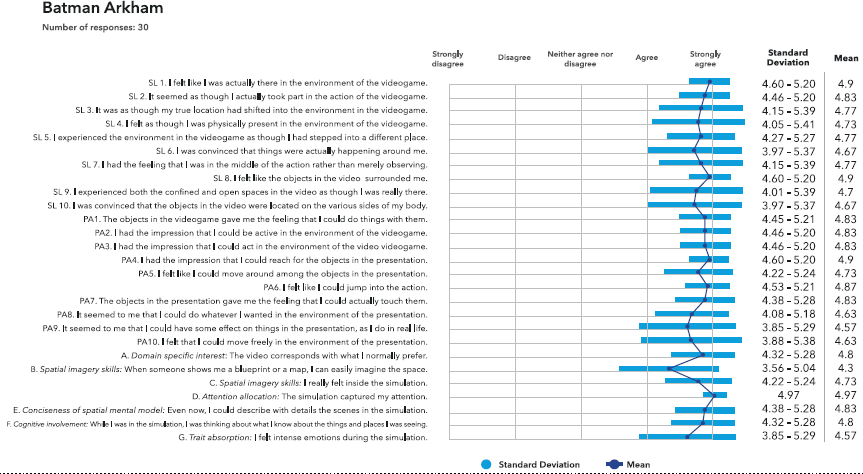

Batman Arkham is a first-person adventure VR game published by Warner Bros. Interactive Entertainment (2017). The player embodies the avatar as Bruce Wayne and turns into Batman using motion sticks controllers to perform different actions. It is played with two PlayStation Move Motion Controllers. In the first scene, players put on the Batman suit while seeing themselves in a mirror. There is a close correspondence between the user’s movement and the avatar’s, which grants a stronger sense of embodiment compared to the other games. Hands are represented independently with each motion controller.

The user can handle tools with each hand, shoot, throw, grab and turn a wider variety of objects. Users play standing and perform a wide range of bodily movements, such as putting on a helmet, pulling levers, using a syringe to sample blood, turning a key, playing piano and putting together a puzzle made of 3D pieces.

Participants ranked significantly different the perceived sense of spatial presence (χ2 = 287.34, df = 78, P < 2.2e-16). 80.25% of participants strongly agreed to the statements in the questionnaire, 16.05% agreed, 2.58% neither agreed nor disagreed, 1.11% disagreed and 0% strongly disagreed.

Source: Author's elaboration.

Figure 15 Box plot percentage of level of agreement in statements describing the experience of spatial presence in Batman Arkham

FINDINGS

Overall, the 5 simulations presented significant differences in the ranking of spatial presence (χ2 = 49.159, df = 16, P = 3.118e-05). According to the Likert scale questionnaire, participants felt the most presence with Batman Arkham, followed by Eagle Flight, Farpoint, Moss and the 360º Video . These results closely align with the intensity of spatial presence reported by participants at the end of the questionnaire, they were asked to choose the game that gave them the most intense spatial presence, 50% felt spatial presence more intensely with Batman, followed by 26.67% choosing Eagle Flight, 16.67% chose Farpoint, 3.33% chose Moss and 3.33% chose the 360º Video . However, when participants were asked to choose the game they felt allowed them to perform actions more easily, 50% answered Eagle Flight, followed by Batman chosen by 36.6% of participants, Moss by 10% and Farpoint by 3.33%; 0% chose the 360º Video , this is obvious as the simulation doesn’t allow any interactive actions apart from head motion.

The general hypothesis of this study states there is a positive correlation (rQ=0.6400; P <0.001) between affordances and spatial presence in VR. Particular hypothesis identifies the following variables as conditions that increase the sense of spatial presence: first person perspective, which increases sensorimotor embodiment; degree of realism perceived by the user, which translates in a less perceptible technological interface; increased level of attention and increased range of bodily movement in the simulation cause a more intense sense of spatial presence.

A key premise in this research is that virtual spaces with more types of affordances generate a higher intensity of spatial presence in the user, as ranked by the SPES. As expected, the general hypothesis was confirmed by the experiment, this is evident in the comparison of means shown in Figure 3 and in Figure 20, which show the general percentage of level of agreement in statements describing the experience of spatial presence between games. Other factors surfaced during the experiment and the analysis of data, which suggest they might affect the assessment of spatial presence, such as: self-reported motor and spatial skills; participants’ level of familiarity with virtual environments (which can determine dexterity with virtual tools) and the user’s proximity to objects and tools in the VR simulation. As previously stated by Hartman et al. (2004) and Wirth et al. (2007) users with higher visual imagery skills tend to rank higher in the score, as well as users who are interested in the stimulus.

The 360˚ Video ranked considerably lower than videogames in most questions, except in question G. Trait absorption. When participants were asked if they felt intense emotions during the simulation, the lowest rank was given to Moss, closely followed by the 360˚ Video. However, when participants were asked in question SL.1 I felt like I was actually there in the environment of the videogame, Moss ranked second highest to Batman. This suggests emotional involvement, or trait absorption didn’t affect the intensity of spatial presence.

Results confirm O’Regan and Noë’s view on spatial perception as an embodied, “exploratory activity” (2001, p. 940). They also match Heras-Escribano’s conceptualisation of affordances as a process, in which the user explores the environment, interacts, modifies it and learns from it (2019, p. 20). As Sanders (1999) stated, affordances are rooted in the perceptual field, not in the physical environment. Therefore, dexterity and previous knowledge of virtual environments and specifically, videogames can potentially open the spectrum of perceived affordances. This is the key notion behind Merleau-Ponty’s concept of intentional arc, which states that acquired skills modify the way objects, and tasks appear to us (Dreyfus and Dreyfus, 1999). Dexterity with virtual tools allows for the construction of a more precise mental model of space, which is built inter-sensorially, mainly through aural, visual and kinesthetics experience. The development of a more intricate mental model of virtual space allows for an increase in the experience of flow and attention towards the simulation, ultimately increasing the intensity of spatial presence. Affordances emerge in our perceptual field always in relation to what we already know about the space and tools around us, our learned skills about how to use these tools and our specific bodily capacities (Dreyfus and Dreyfus, 1999, p.104). Regular videogame users displayed higher dexterity in finding routes and using tools within the VR environments, although more research is needed to explore the relation between intensity of spatial presence and user’s previous gaming experience. Only 5 participants stated that they play videogames almost every day, these same participants showed a superior level of dexterity with the hand controllers and with virtual tools inside the simulation. Although previous knowledge is key for the user to perceive affordances, in this experiment no correlation was found between the intensity of spatial presence and frequency of videogame use. This might be because users build knowledge quickly in 3D immersive environments (Dan and Reiner, 2016). There is less cognitive load in VR than in 2D representations because the brain is used to working in 3D space in physical reality (Alex and Reiner, 2016). VR mimics the natural conditions of perception, which allows for a more immediate experience of spatial presence.

CONCLUSIONS

To summarize, the perception of space in VR is closely related to situated and embodied cognition. Spatial cognition in VR emerges from the interaction with virtual environments. The user’s perception of available affordances in VR affects his or her ability to move and act in the simulation. The variety of affordance types increases the intensity of spatial presence. In other words, virtual spaces with more opportunities for action provide the user with more possibilities for perceiving affordances, generating a higher intensity of spatial presence. The immersive visual perspective of VR graphics, understood as perceptual affordances, are key to the experience of spatial presence. However, this research shows that motor affordances, such as navigational, goal-oriented and handle-grasp affordances, intensify spatial presence. Users performing voluntary movements and actions engage their individual sensorimotor contingencies directly with the VR interface. This binds together the focused attention of the user (through performed actions) to specific VR elements, perceived as spatial tasks (navigational affordances), goal-oriented and handle-grasp affordances. The results show that simulations that provide more opportunities for motor action yield higher ranks in the SPES. Specifically, when simulations include interactive objects and tools in the space near the user’s virtual body (peripersonal space), as seen in the game Batman Arkham. This was also observed when users were given a range of free movement, as in the game Eagle Flight. The SPES is a useful tool to measure spatial presence, although it is important to take into consideration other observable aspects of the user’s experience. First person perspective, bodily movement, kinesthetics sensation and liveliness of VR all play an important role in experiencing spatial presence in VR environments.

It is possible that in the future, as VR technological appropriation increases, we might come to perceive VR affordances with the same ease we perceive affordances in physical reality. Future research on affordances and spatial presence should explore the relation between dexterity in motor and spatial tasks and spatial presence intensity. Most participants in this research became acquainted with VR technology through the experiment. In a future experiment, it would be interesting to work with experienced, skilled participants to observe if there is a correlation between motor and spatial skills in VR and their experience of spatial presence. One of the main problems faced today by researchers in this area is the quantification of the user’s perceived affordances. The exact number of possible actions in a VR simulation is not the same than perceived affordances. The problem of latent and invisible affordances remains. Perhaps this could be solved by observing learning curves and discovery of affordances in longer periods of time, while using VR head-mounted displays equipped with eye-tracking technology.

nueva página del texto (beta)

nueva página del texto (beta)