Introduction

The teaching-learning process in medicine is based on the teaching of theory and the development of practical skills that begin with the auscultation of a healthy patient to later identify and treat those with some pathology. Initially, the teaching was a tutorial from the teacher to the student, working directly with patients1.

However, at present, the demand for health services leads to a decrease in the time devoted to each consultation2, making the student unable to fully implement their auscultation skills or obtain feedback from their Professor, in addition, the clinical scenarios are not standardized and sometimes there is little availability of patients with cardiac pathologies. If we add to the above that the margin of error in medicine carries a significant risk for the patient, requiring safer systems for its development3, it is evident that some medical schools began to look for alternatives to traditional teaching, employing other methods and techniques for the development of clinical practice such as simulated patients, simulators and some others use more than one.

Clinical simulation has shown advantages over other methods since it imitates aspects of reality in medical care, confronting students with the problems they will have to deal with on a daily basis in their medical practice. It also facilitates the repetitive practice of the skills until their total acquisition as well as their evaluation and immediate feedback on their performance is allowed4.

Since the late 1960s, simulation has been used to teach cardiac auscultation using a manikin, as well as the recording of heart sounds5,6. The usefulness of simulation to carry out practices of this type is undoubted. However, the interaction that students have with patients or with simulated patients cannot be fully supplemented since it encourages the student to empathize with the patient and also gives him more confidence when performing the physical examination than when doing it. with a simulator7,8, for this reason, some schools have developed hybrid models with simulated patients and electronic components attached to their torsos for the auscultation of different heart sounds9.

Despite the use of all these strategies, it has been documented that the cardiac examination skills in students decrease after having taken the subject in school10,11, partly attributed to the fact that in some medical schools, simulation is used to introduce students to heart sounds rather than to perform repeated practice, and the impact of the use of this teaching tool on the performance of cardiac auscultation is not measured12.

In this article, in order to measure the impact that the use of simulation has on the performance of cardiac auscultation, we compare the diagnostic accuracy and the scores obtained by students trained with simulators vs. patients, finding that both techniques are equivalent for the development of auscultation skills, however, those who used simulators achieved greater diagnostic precision.

Method

We invited sixty-nine 4th-year students from a medical school, to take part in this longitudinal study.

Baseline assessment

After consent, the students were randomized into two groups according to the teaching method to be used (A: simulators and B: patients). Every student was given a unique study number to guarantee anonymity.

An Initial test was performed on a cardiac simulator (Kyoto, Kagaku model M8481-8 high quality sounds, 88 cardiac sounds and palpable pulses) using a validated check list to evaluate a proper cardiac examination using 16 items (see appendix). Three of them are specific for the identification of abnormal cardiac examination findings. Plus, the diagnostic accuracy of the 5 more common cardiac conditions seen in the clinical environment (aortic and mitral insufficiency, mitral, aortic, and tricuspid stenosis). This frequency was measured by a dichotomous “yes or no” question for a right diagnosis given (Figure 1).

Intervention phase

To have a proper learning experience, both groups took a theoretical lesson on cardiac examination skills by a certified cardiologist with teaching experience, during which they reviewed basic cardiovascular assessment, the cardiac cycle, and most frequent cardiovascular pathologies observed in the Hospital, as mentioned above. At the end of the lessons, they were provided with didactic material, including recordings of cardiac sounds of the pathologies seen in class (Figure 2).

Next, group A had 2 hours of practical lessons on cardiac examination skills using cardiac simulators (Kyoto, Kagaku model M8481-8) in the school of medicine, while group B had 2 hours of practical training on the field on cardiac examination skills in live patients at the hospital, both with the same certified cardiologist who taught them how to introduce themselves, put the patient on a right position for an auscultation protocol and emphasizing on the points of auscultation and identification of normal and abnormal heart sounds (Figure 1).

Final test

Once teaching lessons were finished, both groups went into a final test on the cardiac simulator, using the same checklist as for the initial test and assessed by the original teacher. This session was videotaped to have proper feedback (Figure 1).

Ethical considerations

All the participants freely gave their informed consent to participate on this study. They were explained that their participation was completely voluntary and that they could quit on the study whenever they wished so.

This study was based on Helsinki’s declaration statements and approved by the Research Ethics Committee of the (Name not shown in order to keep anonymity on peer-review process) (Act. Number 0220092018).

Statistical analysis

We carried out an analysis by using measures of central tendency for the results of the scale, also, Mann Whitney U test was used to calculate the differences between the study groups, considering significant a p-value <0.05.

Results

After inviting 69 4th year medical students to participate in this study, only 46 decided to take part providing consent; all of them completed the study. 67% of them were men and 33% were women. The average age of these students was 23 years old, with a range between 21 and 26 years old.

These students had already taken cardiology lessons during their college formation.

Students were distributed randomly into two groups (A and B) and then went into an initial test, before having any training (except for one of their curricula backgrounds) and one week after having it. We performed an initial and a final test to evaluate two parameters. The first one was “Cardiac examination skills” and the second was “diagnostic accuracy”.

The results are as follows:

Cardiac examination skills

Initial test

The mean of the group that was going to be trained with cardiac simulators (A), was 7.1/10 points, while the mean of the group that was going to be trained with live patients (B) was 7.3. No statistical difference was found meaning a similar baseline level of knowledge in the cardiac examination (Table 1).

Table 1 Measures of central tendency from scores obtained after the initial y final tests in both groups

| Initial test patients | Final test patients | Initial test simulators | Final test simulators | |

|---|---|---|---|---|

| Minimum | 2,5 | 7,5 | 4,3 | 7,5 |

| 25% Percentile | 5,6 | 8,1 | 6,2 | 8,1 |

| Median | 6,8 | 8,7 | 7,5 | 8,7 |

| 75% Percentile | 8,7 | 9,3 | 8,1 | 8,7 |

| Maximum | 10 | 10 | 8,7 | 9,3 |

| Mean | 7,0 | 8,7 | 7,2 | 8,5 |

| Std. Deviation | 2,0 | 0,8 | 1,0 | 0,5 |

| Std. Error of Mean | 0,43 | 0,18 | 0,22 | 0,12 |

* The scale used was taken from the “CESIP, Centro de Enseñanza de Simulación de Posgrado, DICiM Universidad Nacional Autónoma de México UNAM” Mexico City, previous authorization.

Final test

To determine if there was a significant difference in the results of the cardiac examination test taken by the students depending on the teaching technique and after the students were trained, they went into a final test.

The mean of the group that was trained with simulators, was 8.8/10 points, while the mean of the group trained with patients was 8.6. No statistical difference was found, however, since the scores show a tendency to an increased result in simulation training, we think that a bigger sample size could help in making this difference clearer (Table 1).

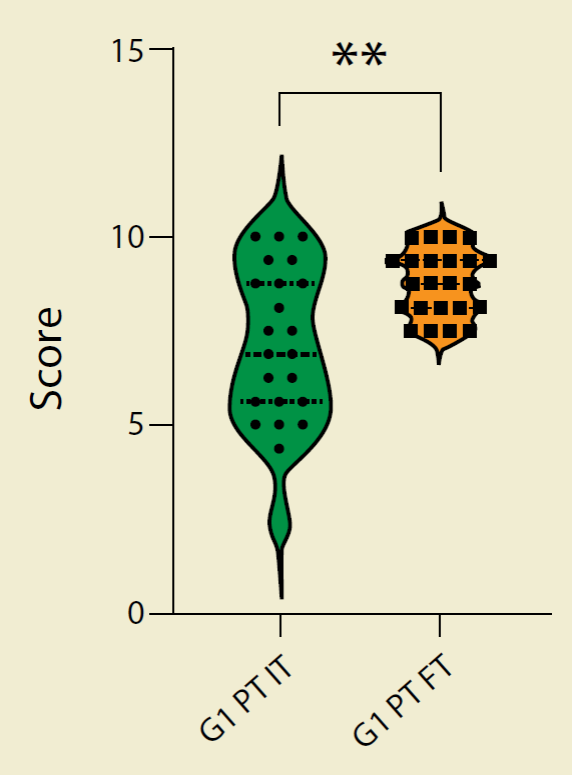

Although there was not a significant difference in the test results between training techniques, we found a significant difference between the initial and the final test results of both groups (Figure 3 and 4).

Figure 3 Comparison between initial and final means scores obtained by the students trained with patients. Mann Whitney U test p<0.05 (G1 PT IT: Group 1 patients trained Initial test. G1 PT FT: Group 1 patients trained Final test.)

Diagnostic accuracy

Finally, we evaluate the capability to give the correct diagnosis (diagnostic accuracy) by the students during the cardiac examination test between both groups. Despite both groups improved the diagnostic accuracy after training, the group trained with cardiac simulators had the highest frequency of correct diagnosis (16 out of 23 vs. 13 out of 23 students).

Discussion

Simulation is a particular type of modeling. As a particular way of understanding the world, it can simplify our understanding of it, making it more reproducible, educational, and risk-free. Borrowing from Aristotle “the things we have to learn before we do, we have to do”, in this article we try to understand the difference between the use of clinical simulators and patients in medical education, specifically for cardiology training.

It is documented that cardiology examination skills decrease over time in medical students and doctors, hence, the importance of continuous medical practice, which can be carried out using simulators. The simulators allow clinical exploration protocols to be repeated to gain competencies, improve performance, acquire, and master skills, and ultimately become an expert13,14.

In our study, both groups have already had previous training in cardiology according to the study plans of the medical school, however, at the end of the study, we observed better performance in the cardiac examination regardless of the teaching method used, which suggests that the technique does not affect the acquisition of skills, it is the deliberate and continuous practice that strengthens the acquisition of skills so that the responses to a medical problem become intuitive and systematized. Then the medical student or the doctor can respond appropriately without thinking twice, which has a positive impact on reducing the risk of errors15,16.

The foregoing agrees with previous reports by Issenberg et al (2002) who applied tests in a cardiology review course for internal medicine residents using simulation technology vs. deliberate practice17, finding a significant difference between the grades obtained before and after the course.

Kern and Mainous reported that students who received cardiac examination skills training with standardized patients plus a cardiopulmonary simulator performed significantly better than the control group. However, the results of our study showed that there is no significant difference in the performance of medical students trained with patients versus those trained with cardiac simulators.

This could be explained by the difference in the number of participants since Kern and Mainous compared many participants (control group: 281 and 124 study groups) versus 46 medical students in our study18.

In the present study, in addition to observing an improvement in student performance before and after training, we also observed a better diagnostic accuracy, which suggests that the learning objectives were achieved.

These results contrast with the findings of Gauthier, Johnson, et al (2019) who report that there are no differences in the mean scores of the Objective Structured Clinical Examination (OSCE) using real patients19.

However, the use of standardized scenarios and simulation learning objectives helps to ensure the quality of medical practice since all students can learn the same thing, which is not always possible within a hospital or clinic since pathologies from one patient to another may have variations. Not to mention, sometimes patients don’t want to be examined by a medical student or a group of them.

Simulation alone cannot guarantee the acquisition of clinical skills by the user if he does not have the opportunity for deliberate and constant practice20,21, which is why McKinney, Cook, Wood, et al (2013) suggest that future studies should focus on comparing the key features of instructional design and establishing the effectiveness of simulation-based medical education (SBME) in comparison with other educational interventions22.

In addition to the above, and based on the results of this study, we recommend combining a teaching program using cardiac simulators and training with real or standardized patients, trying to involve students and with special attention to debriefing, since it is considered which is the most important part of training23. All this would favor the teaching-learning process, considering that teachers would act as guides or facilitators working on a problem-solving model since this develops skills for their resolution24.

This is challenging as simulation is still under development in many countries for many reasons; some think it is a time-consuming teaching method, some students find it difficult to get involved with simulators and even some teachers may be reluctant to use them25, however, it is worth its implementation, since it is true what the simulator says adage: “Never the first time in a patient”13, therefore we propose a general guideline to create a successful simulation experience that should be complemented with a future evaluation of the learning experience referred by the student (Figure 5).

Limitations

A limitation on the present study was the number of students participating on it, since a larger number could point the results to another direction.

Conclusions

Clinical simulation and patient training are two different ways of achieving the same goal. There are many reports that claim simulation is best to train medical students, but in this report, we did not observe a statistical difference among them. This is not however, a disadvantage. Clinical simulation offers better learning experience, shown as a tendency in the scores and better diagnostic accuracy (Figures 3 and 4). The lack of significant difference between the simulator/patient groups can be explained by a small sample size.

nueva página del texto (beta)

nueva página del texto (beta)