Serviços Personalizados

Journal

Artigo

Indicadores

-

Citado por SciELO

Citado por SciELO -

Acessos

Acessos

Links relacionados

-

Similares em

SciELO

Similares em

SciELO

Compartilhar

Ingeniería, investigación y tecnología

versão On-line ISSN 2594-0732versão impressa ISSN 1405-7743

Ing. invest. y tecnol. vol.12 no.2 Ciudad de México Abr./Jun. 2011

A LabVIEW–based Autonomous Vehicle Navigation System using Robot Vision and Fuzzy Control

Sistema de navegación autónoma de un vehículo usando visión robótica y control difuso en LabVIEW

Ramírez–Cortés J.M1., Gómez–Gil P.2, Martínez–Carballido J.3 and López–Larios F.4

1 Coordinación de Electrónica Instituto Nacional de Astrofísica, Óptica y Electrónica. E–mail: jmram@inaoep.mx

2 Coordinación de Computación Instituto Nacional de Astrofísica, Óptica y Electrónica. E–mail: pgomez@inaoep.mx

3 Coordinación de Electrónica Instituto Nacional de Astrofísica, Óptica y Electrónica. E–mail: jmc@inaoep.mx

4 Departamento de Ingeniería Electrónica Universidad de las Américas, Puebla. E–mail: lopez.filiberto@gmail.com

Información del artículo: recibido: octubre de 2007.

Aceptado: octubre de 2010.

Abstract

This paper describes a navigation system for an autonomous vehicle using machine vision techniques applied to real–time captured images of the track for academic purposes. The experiment consists of the automatic navigation of a remote control car through a closed circuit. Computer vision techniques are used for the sensing of the environment through a wireless camera. The received images are captured into the computer through the acquisition card NI USB–6009, and processed in a system developed under the LabVIEW platform, taking advantage of the toolkit for acquisition and image processing. Fuzzy logic control techniques are incorporated for the intermediate control decisions required during the car navigation. An efficient approach based on logic machine–states is used as an optimal method to implement the changes required by the fuzzy logic control. Results and concluding remarks are presented.

Keywords: fuzzy, control, robot, vision, autonomous, navigation.

Resumen

En este artículo se presenta un sistema de navegación para un vehículo autónomo usando técnicas de visión robótica, desarrollado en LabVIEW con fines académicos. El sistema adquiere en tiempo real las imágenes del camino por recorrer. Estas imágenes son enviadas en forma inalámbrica a una computadora, en donde un sistema de control, basado en reglas de control difuso, toma las decisiones de movimiento correspondientes. La computadora envía en forma inalámbrica las señales adecuadas al vehículo de control remoto, cerrando de esta manera el lazo de control. Las imágenes son capturadas en la computadora a través de la tarjeta de adquisición NI USB–6009 y procesadas en un sistema desarrollado bajo la plataforma de LabVIEW y sus herramientas de adquisición, procesado de imágenes y control difuso. Se incorpora un eficiente esquema de diseño basado en máquinas de estados para la navegación por las diversas escenas detectadas por la cámara. Se presentan resultados y conclusiones de este trabajo.

Descriptores: difuso, control, visión, navegación, autónoma, robot.

Introduction

An autonomous navigation system consists of a self–piloted vehicle that does not require an operator to navigate and accomplish its tasks. The aim of an autonomous vehicle is to have self sufficiency and a decision making heuristic installed within it, which allows it to automatically move in the corresponding environment, as well as to accomplish the tasks required (Ar–mingol et al., 2007). Some of the areas where autonomous vehicles have been successfully used are space rovers, rice planting and agricultural vehicles (Tunstel et al., 2007, Nagasaka et al, 2004, Zhao et al, 2007), autonomous driving for urban areas (De la Escalera et al., 2003), security and surveillance (Srini, 2006, Flan et al, 2004, Micheloni et al., 2007), and also exploration of any place where human life may be at risk, like a mine with toxic gases or during a nuclear plant disaster (Isozaki et al., 2002). There is a whole variety of autonomous vehicles present, with an extensive classification and categories depending on their characteristics (Bertozzi et al., 2000). Some of those characteristics described in the literature are: autonomy level, methods of data acquisition, methods of localizations, goal tasks, displacement techniques, control methods, and so on. The project presented in this paper is restricted to the autonomous navigation of a small control remote car through a closed circuit. Although it is a very specific task in a controlled environment, it is aimed to provide a platform for academic purposes, in which several approaches of navigation control can be tried, and different schemes of pre–processing image techniques can be used in educational experiments. The software package LabVIEW and their available toolboxes on image analysis, computer vision, and fuzzy logic control (NI 2002, 2005), have been found to be an excellent platform for experimentation purposes for a quick design, implementation, and test of the prototypes. It is expected to continue the experimentation with this prototype in order to explore further tasks, which would require the use of more sophisticated control heuristics in the field of artificial intelligence or neural networks techniques.

Hardware description

The implemented system basically consists of the wireless control of a small remote control car from a laptop computer as shown in figure 1. The vehicle is equipped with a wireless camera which sends in real time the video signal corresponding to the path. Once the streaming data corresponding to the video signal of the path is entered into the computer, it is processed through a LabVIEW application, which generates the control signals to be applied to the remote control of the vehicle. A block diagram of the system is presented in figure 1. The used hardware is listed as follows: data acquisition card (DAQ) NI USB–6009, wireless analog mini–camera JMK, Dazzle USB video capture card, and a small digital–remote control car.

The wireless camera transmits a video signal with a horizontal resolution of 380 TV lines in the frequency of 1.2 GHz on the ISM (Industrial, Scientific, and Medical) radio band. Due to the limitations on the maximum current provided by the output port of the I/O card, a simple optocoupler–based interface was included between the NI card and the remote control as a conditioning signal stage. The digital signals obtained from the interface are applied to the remote control, which sends the movement commands to the 27 MHz radio controlled car and the cycle is closed.

Fuzzy logic control in LabVIEW

Fuzzy logic control has been extensively used in many applications, including commercial products. There is a large amount of references and books on the topic with a detailed revision of the basic theory (Kovacic et al., 2005, Jantsen et al. , 2007), as well as some references on fuzzy control in the context of autonomous navigation (Hagras et al. , 2004). For the purpose of the work presented, it is important to point out the two types of fuzzy inference systems that are most used currently: Mamdani–type and Sugeno–type (Zhang et al. , 2006, Ruano, 2005). These two types of inference systems vary in the way the outputs are determined. The method used in the control described in this paper is the Mamdani type, which includes fuzzification of input data based on membership functions, an inference rules database, and defuzzification of the output signal. Mamdani–type inference expects the output membership functions to be fuzzy variables, in consequence, after agregation of signal outputs there is a fuzzy set for each output variable to be defuzzified. The LabVIEW toolbox on fuzzy logic control was found an excellent platform to support design, implementation, and test of a system control based on fuzzy logic techniques.

This feature in conjunction with the image analysis and acquisition library IMAQ–VISION and Vision Builder from NI, were the key for a quick and accurate implementation of this project. The LabVIEW toolbox on fuzzy logic control includes edition of the fuzzy variables and the corresponding triangular and trapezoidal membership functions, a friendly rulebase editor to enter the if–then rules associated to the fuzzy control, and some deffuzzification methods such as centroid, center of maximum, and min–max, which are mathematical operations over a two dimensional function obtained from the combination of the fuzzy outputs.

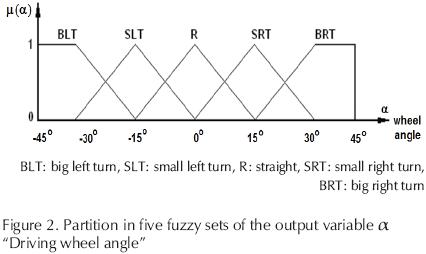

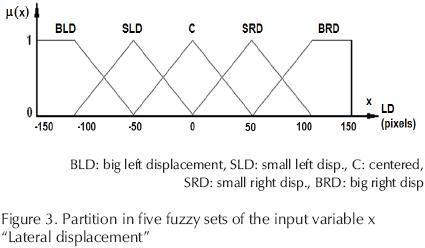

In this project, there are two output variables: the steering wheel angle, and the speed. These two signals are connected to the remote control as the guidance mechanism of the vehicle. Each signal allows sixteen values coded in 4 bits. In the case of the speed it covers positive and negative values for movements in reverse, and in the case of the steering wheel angle it covers an angle range of ± 45° Figure 2 shows the partition of the variable α "Driving wheel angle", in five fuzzy sets. In a similar way, the output variable "Speed" is partitioned in five fuzzy sets as: BM: back medium, BS: back small, SS: straight small, SM: straight medium, SH: straight high. The input information needed to locate the relative position of the vehicle relies on the image sequence detected by the wireless camera, which is a streaming data of 30 images per second. The program automatically segments and marks the frontal part of the car as the image reference with a yellow box, and the path lanes are segmented and marked with two red lines. The marking is presented in the screen as a visual representation, and simultaneously registered in the image file. The numerical information obtained from both markings is further used during the tracking algorithm. The fuzzy input variables used in this work are: lateral distance to the nearest lane, and the inclination angle of the incoming curve obtained as the average of the two angles detected from the lateral borders of the road. These variables are represented in membership functions derived from the partition of the variables in five fuzzy sets. Figure 3 shows the partition of the input variable x "Lateral displacement". In a similar way, the input variable  "Angle of the incoming curve" is partitioned as: LTC: left tight curve, LSC: left soft curve, S: straight, RSC: right soft curve, RTC: right tight curve. The inference rules of the fuzzy control system in the form IF–THEN are located in a database which is accessed in each iteration. The database was constructed considering the actions that a human being would perform in every situation during the trajectory, with the restrictions of the range designated for each variable. This information is further analyzed in order to make a decision concerned to the car position with respect to the path, and the required action to keep the track in the circuit. The numerical information regarding the angle of the lines representing the road path, is codified as a fuzzy variable to be used in the control system, as described in the next sections.

"Angle of the incoming curve" is partitioned as: LTC: left tight curve, LSC: left soft curve, S: straight, RSC: right soft curve, RTC: right tight curve. The inference rules of the fuzzy control system in the form IF–THEN are located in a database which is accessed in each iteration. The database was constructed considering the actions that a human being would perform in every situation during the trajectory, with the restrictions of the range designated for each variable. This information is further analyzed in order to make a decision concerned to the car position with respect to the path, and the required action to keep the track in the circuit. The numerical information regarding the angle of the lines representing the road path, is codified as a fuzzy variable to be used in the control system, as described in the next sections.

The LabVIEW IMAQ vision toolbox

The IMAQ vision is a virtual instruments library aimed to the design and implementation of computer vision and image analysis scientific applications. It includes tools for vision controls using different type of images, image processing operations, such as binarization, histograms, filters, morphology operations, and so on. It also provides options for a graphical and numerical image analysis through lines, circles, squares or coordinate systems on the captured image. In addition, National Instruments has developed the so–called Vision Builder, which is an interactive software package for configuring and implementing complete machine vision applications using the same graphic philosophy of LabVIEW, with high–level operations such as classification or optical character recognition. In the project described in this paper, the image is video–captured, modified from color to gray scale type, and binarized through the corresponding tools. The image of the road is analyzed in order to segment the lines defining the path. Numerical information regarding the relative position of these lines with respect to the reference, which is the center of the car, as well as the angle, is obtained through the analysis of those lines, and is codified as fuzzy variables to be used in the control.

Programming approach

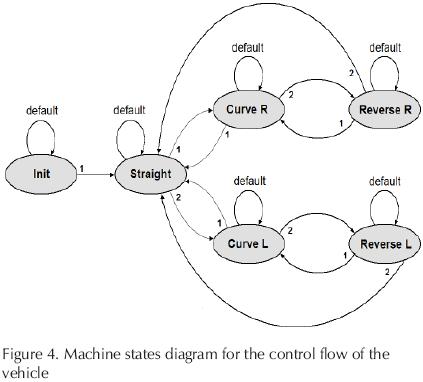

The control program developed in LabVIEW was developed following an approach of machine states logic design. According to the scene detected, the vehicle could be located in one of six states as described in the state diagram of figure 4. Once the system is turned on, the car stays in the state 'inif until the user presses the button Start. Pressing this button initializes the route and moves the car to the state 'straight'. In each state, the system waits for the camera to sense the road, derives the numerical information from the relative position of the car, and makes a decision according to the fuzzy logic inference rules stored in the database. After defuzzification, the output signals are sent to the car through the wireless remote control, which closes the control loop by making the corresponding movement in the logic–states machine, and an iteration is completed.

Figure 5 shows the code in the LabVIEW graphical language corresponding to the state 'straight/. In this diagram there is an input signal corresponding to the image of the road obtained from the wireless camera, and an output signal connected through the DAQ assistant, which will generate the electric signal required by the remote control. The main operation relays on three sub–virtual instruments named 'straight', 'fuzzy', and 'wheel', which are designed to evaluate the video–signal obtained from the camera, calculate the numerical information regarding the relative position of the car, and derive the corresponding action based on the fuzzy inference rules contained in the database. The internal routines included in each machine state are basically the same with small differences according to the corresponding position, so for the purposes of this paper only the state 'straight' will be explained.

Inside the main block in figure 5, we can distinguish a sub–virtual instrument called 'straight', which has the purpose of obtaining the numerical representation derived from the visual information of the road. Figure 6 corresponds to the code used to derive two values named as 'max' and 'min' with respect to the center of the vehicle, from the right and left lines obtained from the input image of the road.

The obtained values max, min, and center, are entered to the next stage, which applies the fuzzy logic rules to obtain the required output value used to control the steering wheel angle, and the displacement of the vehicle, in consequence. The output value is converted to the 4 bit digital word required in the remote control through a table containing the corresponding codes, as shown in figure 7. Once all the operations are completed, the process starts again in a new state depending on the position of the vehicle. When the button 'stop' in the main display is pressed, the program goes to the state 'init', where the program waits until the user decides to resume the car movement or to finish the process.

Results

Figure 8 shows the frontal panel of the program in two cases. The frontal panel consists of buttons start–stop, the current machine state, the visual indicators for the speed and the steering wheel angle, and a window to show the scene visualized from the car in real time. When the button 'start' is pressed it changes to green color, the machine state changes to 'straight', and the vehicle starts moving. The first case showed in figure 8 corresponds to the initial state, once the system is turned on. The second one shows the case in which the vehicle detects a curve to the right and the steering wheel is conditioned to do the turn.

A simple experiment aimed to test the response of the vehicle was implemented. The car was located in a linear track of 4 meters, with an initial position of 70 pixels out of the center. The car was expected to correct its position until the center is reached. The experiment was carried out several times using partitions of the variables in three and five fuzzy sets. After averaging the trajectories, the curves shown in figure 9 were obtained. It can be seen that the vehicle stabilizes after some oscillations in approximately 1.5 meters. Additional experiments and results can be checked at the document in the complete project report (Lopez, 2007).

Conclusions

An autonomous vehicle navigation system, based on fuzzy logic control techniques with robot vision capabilities has been presented. This experiment was designed with academic purposes on a LabVIEW platform, taking advantages of the toolbox on fuzzy logic control and the acquisition library IMAQ–VISION. These resources were found to be an excellent tool–set to develop in short time with a very good flexibility and excellent performance, the design, implementation and testing of a control system such as the one described in this paper. Particularly, the paradigm of control based on the use of machine states, represents an interesting approach for automatic vehicle navigation, as well as a didactic case of study. This prototype is ready to support further experimentation in different tasks, including different heuristics of control with the use of artificial intelligence techniques in different environments. It is also worth to point out that a rigid camera like the one used in this project is a considerable limitation. The use of a more sophisticated camera with options like pan, tilt, or zoom, could be a very good improvement, for the possibility to anticipate possible trajectories and do a movement strategy in advance, with a reasonable increasing in the cost of the prototype.

Acknowledgments

The authors would like to thank the anonymous reviewers for their detailed and helpful comments.

References

Armingol J.M., De la Escalera A., Hilario C., Collado J.M., Carrasco J.P., Flores M.J., Pastor J.M., Rodríguez J. IVVI: Intelligent Vehicle Based on Visual Information. Robotics and Autonomous Systems, 55(12):904–916. December 2007. ISSN: 0921–8890. [ Links ]

Bertozzi M., Broggi A., Fascioli A. Vision–Based Intelligent Vehicle: State of the Art and Perspectives. Robotics and Automation Systems, 32(1):1–16. January 2000. ISSN: 0921–8890. [ Links ]

De la Escalera A., Mata M. Traffic Sign Recognition and Analysis for Intelligent Vehicle. Image and Vision Computing, 21(3):247–258. July 2003. ISSN: 02 [ Links ]

Flan N.N., Moore K.L. A Small Mobile Robot for Security and Inspection. Control Engineering Practice, 10(11):1265– 1270. 2004. ISSN: 0967–0661. [ Links ]

Hagras H.A. A Hierarchical Type–2 Fuzzy Logic Control Architecture for Autonomous Mobile Robots. IEEE Transactions on Fuzzy Systems, 12(4):524–539. August 2004. ISSN: 1063–6706. [ Links ]

Isozaki Y., Nakai K. Development of a Work Robot with a Manipulator and a Transport Robot for Nuclear Facility Emergency Preparedness. Advanced Robotics, 16(6):489–492. 2002, ISSN: 0169–1864. [ Links ]

Jantsen J. Foundations of Fuzzy Control. West Sussex, England. John Wiley & Sons, 2007. Pp. 13–69. [ Links ]

Kovacic Z., Bogdan S., Fuzzy Controller Design: Theory and Applications, Control Engineering Series. Boca Raton, Florida, U.S. CRC Press, Taylor and Francis Group. 2005. Pp. 9–40. [ Links ]

Lopez L.F. Navegación de un vehículo guiado por tratamiento y análisis de imágenes con control difuso. Tesis (Maestría en ciencias en electrónica). México. Universidad de las Américas, Puebla. 2007. 132 p. [ Links ]

Micheloni, C., Foresti, G.L., Piciarelli, C., Cinque, L. An Autonomous Vehicle for Video Surveillance of Indoor Environments. IEEE Transactions on Vehicular Technology, 56(2):487–498. 2007. ISSN: 0018–9545. [ Links ]

Nagasaka Y., Umeda N., Kanetia Y., Taniwaki K., Sasaki Y. Autonomous Guidance for Rice Transplanting Using Global Positioning and Gyroscopes. Computers and Electronics in Agriculture, 43(3):223–234. 2004. ISSN: 0168–1699. [ Links ]

NI Fuzzy Logic Control Toolbox. Application Notes, National Instruments, 2002. [ Links ]

NI Vision for LabVIEW User Manual, National Instruments, 2005. [ Links ]

Ruano A.E. Intelligent Control Systems using Computational Intelligence Techniques. London, United Kingdom. The Institution of Engineering and Technology. 2005. Pp. 3–34. [ Links ]

Srini V.P. A Vision for Supporting Autonomous Navigation in Urban Environments. Computer, 39(12):68–77. 2006. ISSN: 00189162. [ Links ]

Tunstel, E., Anderson, G.T., Wilson, E.W. Autonomous Mobile Surveying for Science Rovers Using in Situ Distributed Remote Sensing. On: IEEE International Conference on Systems, Man and Cybernetics. Montreal, Canada. October 2007, pp. 2348–2353. [ Links ]

Zhang H., Liu D. Fuzzy Modeling and Fuzzy Control. Boston. Birkhauser. 2006. Pp. 33–75. [ Links ]

Zhao B., Zhu Z., Mao E.R., Song Z.H. Vision System Calibration of Agricultural Wheeled–Mobile Robot Based on BP Neural Network. On: International Conference on Machine Learning and Cybernetics. Hong Kong, China. August 2007, pp. 340–344. [ Links ]

About the authors

Juan Manuel Ramírez–Cortés. Was born in Puebla, Mexico. He received the B.Sc. degree from the National Polytechnic Institute, Mexico, the M.Sc. degree from the National Institute of Astrophysics, Optics, and Electronics (INAOE), Mexico, and the Ph.D. degree from Texas Tech University, all in electrical engineering. He is currently a Titular Researcher at the Electronics Department, INAOE, in Mexico. He is member of the National Research System, level 1. His research interests include signal and image processing, computer vision, neural networks, fuzzy control, and digital systems.

Pilar Gómez–Gil. Was born in Puebla, Mexico. She received the B.Sc. degree from Universidad de las Americas A.C, Mexico, the M.Sc. and Ph.D. degrees from Texas Tech University, USA, all in computer science. She is currently an Associate Researcher in computer science at INAOE, Mexico. She is member of the National Research System, level 1. Her research interests include neural networks, image processing, fuzzy logic, pattern recognition, and software engineering. She is a senior member of IEEE, and a member of ACM.

Jorge Martínez–Carballido. Received the B.Sc. degree in electrical engineering from Universidad de las Americas, Mexico, the M.Sc. degree and the Ph.D. degree in electrical engineering, both from Oregon State University. He is currently a Titular Researcher at the National Institute of Astrophysics, Optics, and Electronics (INAOE), Mexico. His research interests include digital systems, reconfigurable hardware, signal and image processing, and instrumentation.

Filiberto López–Larios. Received the B.Sc. degree in electronics and communications engineering from Universidad La Salle Bajío, and the M.Sc. degree in electrical engineering from Universidad de las Américas Puebla. His research interests include digital signal processing, fuzzy control systems, and the design of applications based on virtual instrumentation.