1 Introduction

Nowadays, thanks to different music streaming services such as Spotify, Pandora or Apple Music, we have access to tens of millions of musical pieces [17], which makes it increasingly difficult for users to find songs that are of interest to them and also according to their tastes [1]. However, in the field of information systems, plenty of research has been carried out to reduce the search cost and to offer relevant elements of the huge amount of accessible data. For that reason, we have several systems that provide us with musical pieces, and also study the user’s behavior to recommend music of interest and thus facilitate the interaction with the system. To achieve this, there are two basic approaches to recommendation, the content-based filtering that focuses on the description of the song to propose musical pieces to the user and the collaborative filtering that offers the user relevant elements of other nearby users according to their profile and listening history [2]. Likewise, there are proposals that combine the aforementioned approaches with some other characteristic of the user such as their context, or their uncommon tastes as in [3, 4,1].

These investigations usually recommend musical pieces that match the preferences of their users. However, they are still far from perfect and often produce unsatisfactory recommendations. This arises as a result of the fact that users’ tastes and musical needs depend largely on a multitude of factors [14], and they do not take advantage of different artificial intelligence tools such as natural language processing (NLP), taking into account that within the words, text or sentences we can find sentimental information of the user and that music is closely related to the emotions and feelings of human beings [13].

As a result, there are investigations such as [8] that made use of NLP to analyze texts based on reviews made by users and recommend songs according to their sentimental information.

Therefore, although there are different studies to recommend music, many do not take into account the sentimental information of the user, which is relevant to make a more accurate recommendation and although there are studies that made use of NLP to analyze feelings, this technique could be more exploited if we start from publications, texts or opinions made within a social network, since it is often where much sentimental information is often reflected.

This paper presents a music recommendation model that makes use of social network posts to analyze sentimental information using NLP with the help of a multi-layer perceptron neural network and make music recommendations, which are linked to Spotify through its API.

The rest of the paper is organized as follows. Section II deals with the work related to this research. Section III deals with the design of the model architecture and Section IV describes our evaluation and experimental results, the conclusions are presented in the final part.

2 State of the Art

2.1 User Context Focused Models

The authors [3] proposed the MEM model that took into account the user’s context without having explicit data of the listened musical pieces. It learned from the embeddings of musical pieces from playback logs and their metadata. This method was compared with three reference methods, IPF, BRP and FISM, showing that with respect to performance the best work was done by the MEM method with relative improvements of about 96%, 69.7% and 42.6% respectively.

In the other paper [5], it is proposed to improve music recommendation by adapting collaborative filtering with context about music consumption in the recommendation process, extracting this information from playlist names and aggregating them into contextual groups. This approach showed a 33% performance improvement over collaborative filtering.

On the other hand, [6] presented an approach that took context into account so that it could infer the user’s general and contextual preferences from their playlists. This approach inferred embeddings of playback streams from the music2vec model, and users’ contextual and general preferences from their complete and recent listening logs.

To measure the performance, they compared the following methods: HRM, FPMC, IPF, BRP, FISM and UserKNN obtaining improvements around 22.2%, 32.4%, 43.5%, 97.8% and 125.6% respectively.

In similar manner, [7] presented an approach that aims to model contextual information such as feedback, time and location for music recommendation. For this purpose, they presented their HMRS recommender system that works with context and collaborative approach.

The performance of HMRS when suggesting Top-N items for (N=1,5,10,20) was measured with the Recall metric and compared to other recommendation methods such as CORLP, MGW, CF, UIG and PG managing to be superior to the other approaches at N=1, 5, 10 and 20 with a Recall greater than 0.008, 0.006, 0.014 and 0.018 respectively.

From the studies mentioned above, it was found that there are researchers such as [3, 6] that used data sources from the web music service Xiaomi music while [5, 6] used #nowplaying and last.fm data sources respectively.

In addition, [3, 6, 7] extract contextual features from playlists while [5] extracts contextual information from users’ playlist names. Investigations [5, 7] are presented as improvements of the collaborative filtering model while [3, 6] are new alternatives based on mathematical models such as graphs and vectors.

Regarding the results, investigations [3, 6] were compared with IPF, BRP and FISMAUC methods obtaining performance improvements of 69.7%, 42.6%, 96% for [3] and 43.5%, 97.8%, 70.9% for [6].

2.2 Models Focused on User Sentimental Information

Proposed a model that tried to demonstrate that if a sentiment metric does not consider the user profile, the metric cannot generate a true value of sentiment intensity, for this they used the sentiment-Br2 metric and through a correction factor they formed the eSM mathematical model [8], finally they applied the model to a mobile recommendation system, the results obtained concluded that the system achieved 91% user satisfaction.

Research [9] proposed to extract emotions at different levels of granularity 2d, 7d and 21d following the research of [15] and during different times correlating three elements: the user, the music and the users’ emotion.

For the results, they compared the proposed method (UCFE) with others such as BRP and RankALS, UCF, ICFEm RWE demonstrating better results for HiRate metric being higher than 60%, accuracy with 75% and Recall and F1 with 60%. [10] seeks to address two problems: how to distinguish sentimental texts from reviews and how to discover emotions and/or feelings in music with higher accuracy.

For this, they built a semi-supervised model where they trained a CNN to identify the reviews with sentimental information and, finally, they designed an algorithm to extract features from each song that can be used to identify sentimental information from the playlist, the results obtained by the research were 80.13% accuracy.

Authors [11] focused their research on discovering the relationship between affective Twitter hashtags with user’s music preferences. For this, they applied an unsupervised sentiment dictionary approach.

Subsequently, they used a next-generation network integration method to learn latent feature representations of users, tracks and hashtags, the results showed an MRR of 0.92 for the study.

They emphasized the factors that should be considered to obtain a higher accuracy in the affective analysis with musical approach [12] to be applied to a recommender system (RS), for this they made use of a Convolutional Neural Network and classified emotions into happiness, sadness, anger, fear and surprise, the study presented a higher accuracy than other related works, obtaining a F1 metric of 0.98 and 0.96 for the emotions of sadness and anger.

Studies [9, 10] extracted data from websites such as WEIBO and NetEase respectively. On the other hand, [11] made use of Zangerle’s #nowplaying data source, [8] collected data from forms completed by 100 people for their study and [12] collected data extracted from Twitter by programming practices.

Research [9] used the Word2Vec algorithm for word association while [8, 12] used the Sentiment-Br metric as a tool, [9] used the ICTCLA tool for word segmentation and the DUTIR and HITCIR dictionaries, while [11] used the AFINN dictionary, Opinion Lexicon, The SentiStrengthlexicon, The Vader dictionary. Studies [8, 12, 9] emphasize the intensity of emotions through the study of a correction factor for [8, 12] and the use of the study of emotion granularities for [9].

Studies [9, 10, 11] make use of natural language processing and vectors to relate users, musical pieces and words with sentimental information, while [8] makes use of natural language processing to represent words in numerical values.

On the other hand, [12] uses convolutional neural networks for the analysis of texts with sentimental information. Regarding the results, for [8, 12] users showed 78% and 97% satisfaction respectively, considering the Sentimeter-Br sentiment metric. Regarding accuracy, [9, 10, 12] obtained results of 75%, 80.13% and 98% respectively.

2.3 Models That Take Into Account Non-Sentimental and Non-Contextual Features

Authors [1] aimed to present a model that recommends novel musical pieces to the user. This was the Preference-linked and Positive Graph-Based (PPGB) algorithm, that combined two graphs to create a hybrid one that obtains novel and highly relevant recommendations.

The results show that PPGB obtained a result slightly above 40% for the F1 metric while the results for the N(L) metric were above 60%. Research [4] attempts to address the problems with collaborative and content-based filtering. For this, they proposed a recommendation method based on listening coefficients to address the above mentioned shortcomings of recommender systems when little information is available.

The metrics for the model show 0.940 for MAE, and 1.231 for RMSE. [16] proposes to analyze the listening logs to personalize the recommendation to users and help companies to improve user loyalty.

For this purpose, they propose an improved music recommendation method based on link prediction of bipartite graphs with homogeneous node similarity, presenting an improvement to the CORLP method. Finally, the Top-N experiment is used to test the performance of the proposed method.

Based on this, it was obtained that the accuracy results with N=25 showed the highest results for the proposed method with 0.2833. Apart from that, it was obtained that the Recall indicator for the proposed model shows the result of 0.0729 when N=25 and the F1 indicator for the proposed shows the result of 0.1200 when N=25.

From these studies, [1] aimed to recommend musical pieces to the user, while [4] sought to solve the shortcomings of collaborative filtering. [16] aimed to make personalized recommendations and generate loyalty with the application that provides music. Regarding the technologies used, studies [1, 16] make use of graphs to improve music recommendation, while [4] makes use of the calculation of a reproduction coefficient for this purpose.

Regarding the use of the dataset, [1] made use of their own dataset by means of a form filled by the users, while [4] made use of Last.FM dataset and [16] used data from Shrimp Music Community. Research [1] analyzed user playlists to help with the recommendation, while [4] analyzed implicit user information.

From the results, [1] obtained a result slightly above 40% for the F1 metric while the results for the N(L) metric were above 60%. On the other hand, [16] obtained 0.1200 for the F1 metric and [4] obtained 0.940 and 1.231 for the MAE and RMSE metrics respectively.

3 Architecture of the Model

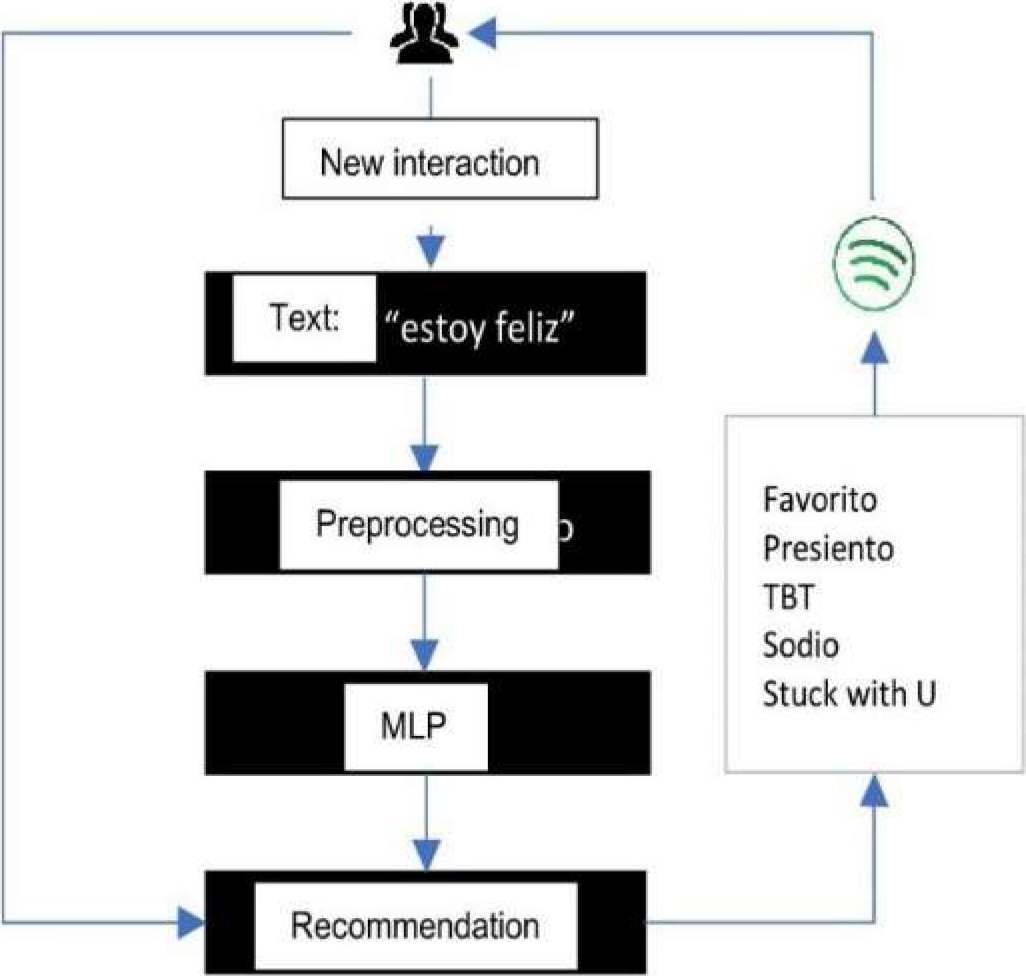

The proposed model for music recommendation from sentiment analysis in texts is defined in 4 modules: preprocessing module, sentiment analysis module, recommendation module and the user module, as shown in Fig. 1.

3.1 Dataset

The dataset used was extracted from the Taller de Análisis Semántico de la SEPLN (TASS), which contains 10,318 Spanish texts up to 240 characters, collected from Twitter and labeled with positive, negative and neutral polarity.

In addition to this, a dataset was formed from Twitter users who made posts in Spanish commenting on the song they were listening to at the time.

From this information, those data with the #nowplaying tag that had a song linked to Spotify were chosen. This collected dataset contains 4,114 songs and related texts.

3.2 Modules

3.2.1.1 Data Cleaning

Within this component the cleaning of data extracted from the TASS that are useful for the training of the Multi-Layer Perceptron (MLP) is performed as well as the cleaning of the data extracted from Twitter.

In the case of the former, the data containing a set of texts labeled with negative and positive polarity were used, leaving the data without polarity eliminated.

In the case of Twitter, data containing the #NowPlaying tag in Spanish language and with a Spotify link were used.

For the cleaning process we first made use of the Stop words dictionary included in the Python NLTK library, so that it eliminates the connecting words since they are not relevant to this research. Subsequently, information such as emails, URLs HTML tags and special characters are removed leaving a clean text ready for vectorization and sentiment analysis.

3.2.1.2 Data Vectorization

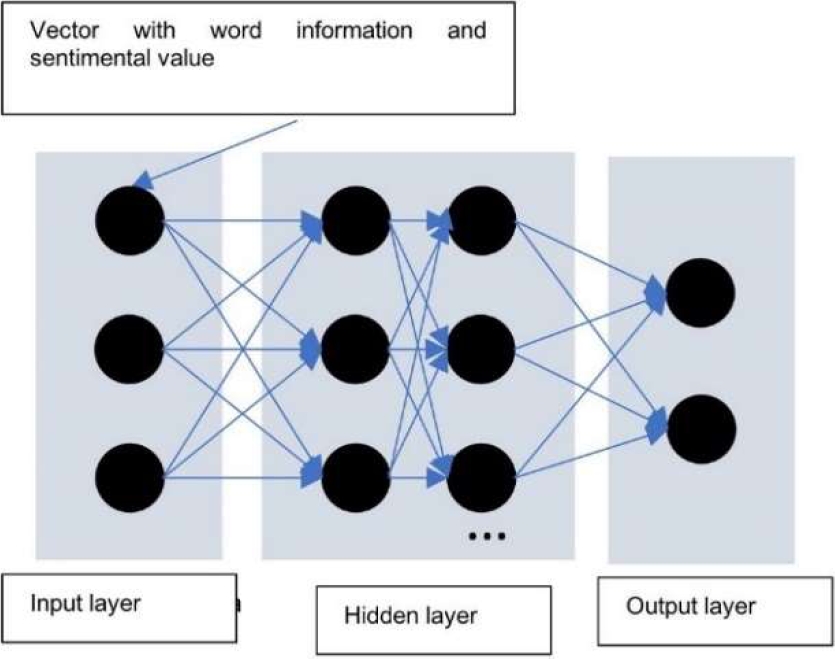

After performing data cleaning, the words were transformed into numbers. To do this, first the vectorization of each sentence collected from the dataset was performed with Word2Vec, the latter makes use of a neural network with a single hidden layer, so that its objective is to train the neural network with the sentences of the corpus (set of words in the dataset) to show us the probability that each word in the corpus has of being a neighbor of the first one.

The input to the neural network is a word represented as a “one hot vector”, that is, a vector with as many positions as the size of the vocabulary. This passes the hidden layer where word vectors with 300 features are trained.

The output of the neural network is another vector of the same size that represents the probabilities of each of the words being neighbors of the word represented in the input, thanks to the Softmax classifier that ensures that all values are between 0 and 1, which indicates the proximity to other words in the corpus, and that the sum of the values is 1 (probability distribution).

The architecture of the neural network is shown in Fig. 2.

For this research, we use the Spacy library, which offers us a Word2Vec corpus already trained with Spanish words, it returns a sentence or text represented in a vector of 300 elements.

3.2.2.1 Multi-Layer Neural Network (MLP)

Having the words represented in vectors, a Multi-Layer Perceptron (MLP) is implemented to help train a model for positive and negative sentiment classification from TASS data that are labeled according to that polarity.

This neural network receives the TASS data already vectorized and labeled as input to train the MLP Neural Network, so that 20% of the total data will be for testing and 80% for training. For this process, SKLearn was used by means of the MLPClassifier to which the number of iterations, the activation function and the optimizer are set. Its’ architecture is shown in Fig. 3.

3.2.2.2 Data Labeling

Having trained the neural network, the texts related to the songs extracted from Twitter that already passed through the preprocessing module are labeled.

Thus, all vectorized texts or sentences enter the MLP network to analyze their information and return the sentimental state of the same, either positive (P) or negative (N), which will be the sentimental state of the song to which these sentences are related. The structure of this new set of labeled data is shown in Table 1.

Table 1 Labeled Twitter dataset

| Text | Spotify Key | Vector | Polarity | |

| 1 | Estoy obsesionado | 48PBbjlk x7n3cICgAVJXR M | [0,7712, -0.123, …, 1.2343] | N |

| 2 | Te extraño | 2tFwfmc eQa1Y6nRPhYb EtC | [0.0198 , 0.125, …, -0.7322] | N |

| … . | …. | … | … | … |

| 411 4 | No arrepiento nada | 1LOuZm emeDN1SkJc9Ee mFI | [0.0128 , 0.156, …, -0.2701] | P |

3.2.3.1 Data Analysis

In this module, it receives the text obtained from the user’s answer to the question “How do you feel?”, this data is previously foes through the preprocessing and sentiment analysis module for its correct vectorization and labeling of its sentimental state. With this information obtained, it verifies within the Twitter data already labeled which are the group of songs more in line with the user’s feelings (positive or negative).

Once the correct group is available, it’s necessary to know which of all of them are the closest to the user’s response, for this purpose the Euclidean distance between vectors is user, therefore, the calculation of this is made between each vector of the approximate group of songs and the vector obtained from the user’s response, so that those that obtain the smallest Euclidean distance are the closest to the user’s sentiment. In that way, the 10 keys of closest songs can be obtained, which will be the ones finally recommended. This process is shown in Fig. 4.

3.2.3.2 Communication with Spotify

Having the keys of the songs to be recommended in this component, the communication with the Twitter API is performed using the Spotify library to extract the name, artist, duration and album to which the songs belong. These data are the ones that will be finally shown to the user.

3.2.3.3 User Module

This module serves as a communication bridge between the recommendation model and the user. It is in charge of receiving the answer to the question “How do you feel?” from the user, this information will be sent to the recommendation module to perform all the analysis work and return the data of the recommended songs, which are graphically displayed to the user for easy reproduction.

4 Results and Discussion

In this section we describe the proposed process which follows the following steps: first it goes through the Preprocessing Module, then through the Sentiment Analysis module and finally through the Recommendation and User module, all this using the datasets mentioned in Section 3.

4.1 Results

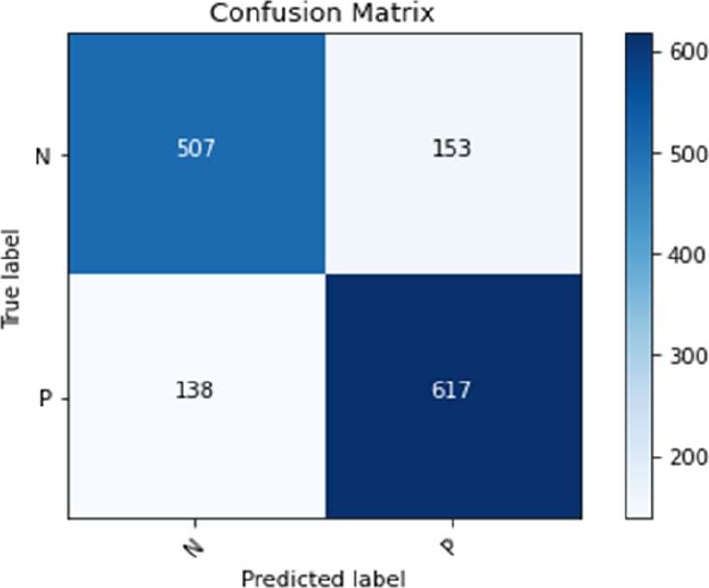

The data obtained from the TASS went through the preprocessing module and then to be used for the training of the MLP neural network which receives as input data the text vectors and their respective polarity labels. For its correct operation, these data were divided into training and test sets, having respectively 5,659 and 1,415 data, which were used for the training of the MLP. It is worth noting that the hyperparameter settings for this neural network were as follows:

As shown in Fig. 5, in the confusion matrix generated by the MLP neural network it can be seen from the 660 negative data, 507 were correctly labeled while for the 755 positive data, 617 were correctly labeled. From these results, the metrics achieved by MLP are shown in Table 2, where it can be seen that negative labeling achieved an accuracy, recall and F1 score of 79%, while positive labeling achieved 82% for the same metrics.

Table 2 Results obtained by the MLP neural network

| Accuracy | Recall | F1 | Data | |

| N | 0.79 | 0.79 | 0.79 | 660 |

| P | 0.82 | 0.82 | 0.82 | 755 |

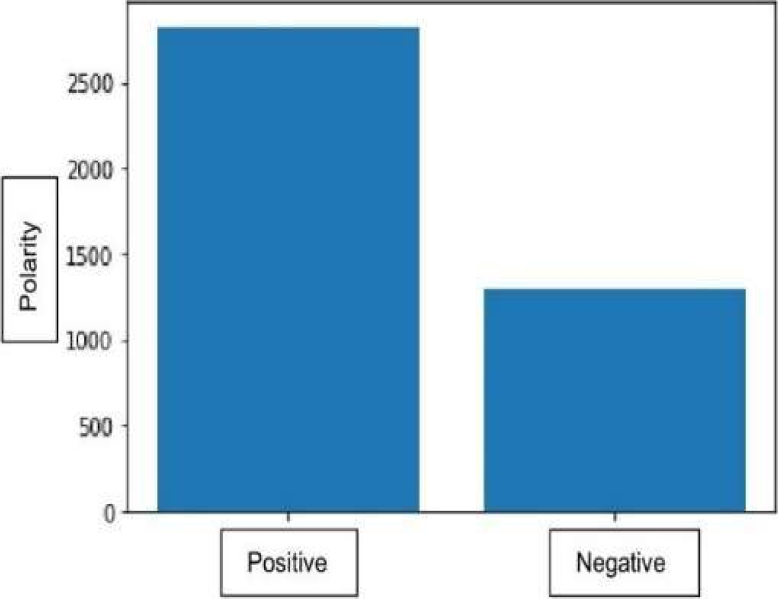

By having the MLP trained, it is possible to label the data extracted from Twitter preprocessor. These 4,114 data entries were entered into the model obtaining at the end 2,826 data with positive polarity and 1,288 with negative polarity as shown in Fig. 6.

To find the songs closest to a user within the labeled Twitter dataset, we labeled the text entered by the user in positive or negative polarity and used Euclidean distance to vectorially find the songs closest to the user’s sentimental information, thus obtaining a minimum and maximum of 906 and 3,089 respectively for the word “feliz” for example.

From these distances, the 10 songs containing the smallest distances were chosen as shown in the example in Table 3 for the word “feliz”.

Table 3 Recommendation result for the text “feliz”

| Song | Artist | |

| 1 | Somewhere Over the Rainbow | Israel Kamakawiwo'ole |

| 2 | Overprotected - Radio Edit | Britney Spears |

| … | … | … |

| 10 | Red | Taylor Swift |

To measure the results, we took the metrics according to the study of [18] so that we compared between the retrieved resources (obtained by the Euclidean distance) and the relevant resources (obtained by the calculation of Pearson’s coefficient) where accuracy is understood as the probability that a selected item is relevant or not and the Recall is understood as the probability that an item is selected. This calculation gave us a result of 0.8 for both cases.

4.2 Discussion

With the results obtained with the music recommender system, the comparative analysis is performed with the different studies that also made a model or music recommender system.

The comparison of this study with the metrics of other studies is shown in Table 4.

Of the studies presented in Table 4, [10, 9] focus on an approach related to user sentiment analysis to improve music recommendation as in our work.

However, study [1] seeks to show the user a novel music recommendation. While study [7] focuses on the user’s context to recommend music.

Regarding the dataset, study [9] used data extracted from WEIBO portal, [10] used review data extracted from NetEase Cloud, [1] extracted data from Douban Music, and [7] used Last.fm, while in this study, we used data extracted from TASS and, in addition, a new dataset was constructed from Twitter user posts.

Regarding sentiment analysis, study [9] uses dictionaries to recognize sentiment words and classifies them into different levels of granularity to generate emotion vectors, while study [10] uses Word2Vec and a Convolutional Neural Network to identify sentiment information.

In this study, like the aforementioned one, Word2Vec is used, but a Neural Network is trained with a Multi-Layer Perceptron that helps with the classification of sentimental texts.

Studies [7, 1] were the only ones to use graphs to implement music recommendation, neither of which was focused on sentiment analysis. Whereas in this research, we have used artificial intelligence algorithms to analyze user sentiments.

In this research, better results were achieved with respect to research [9] for the metrics of accuracy and Recall with 80% in both cases, since they obtained 75% and 60% for the same metrics respectively.

In the case of the study [10] a similar accuracy is achieved with a difference of 0.13%, while with respect to study [1], the accuracy with the proposed model in their research was exceeded by 20%, and with respect to [7] the same result was obtained for the Recall metric.

5 Conclusions and Future Work

In this study we used natural language processing that has allowed us to enhance the music recommendations, making it more accurate, especially at the time of understanding the user about what they think or feel, thus demonstrating a better performance with respect to those investigations that do not make use of this technique.

Despite the achievement of this research, it also has some limitations such as having a small group of songs and not considering musical genres preferred by the user, so there are points for improvement for the recommendation model for future work.

nueva página del texto (beta)

nueva página del texto (beta)