1 Introduction

Painting has been considered as one of the first human artistic expression [10]. It is one of the most important events of human intelligence, therefore, it is considered an intellectual activity [26].

Throughout art history, artistic styles have been defined depending on their space in time, technique, or relevance. In order to classify a given painting, different visual indicators must be considered: e.g. the color palette, the stroke style, color mixing, edge softness, lines, scene theme, among others.

The analysis and classification of a given painting in their artistic style are considered a complicated cognitive task because of the knowledge required about art history and the number of visual cues that needed to be considered [9, 33].

Since there is no simple rule to define the artistic style of a painting, the classification has been exclusively performed by human experts. However, given the massively increasing volumes of digitized paintings, the development of an automatic classification system is an emerging need for the proper administration of such amount of information.

The main goal of such a system is to recognize an artistic style by analyzing the visual aspects of a given painting. Automatic systems have also analyzed other forms of art. For example, Scaringella et al. [29] and Markov et al. [22] classify music pieces by their genre and Zhao et al. [34] categorize buildings by their architectural style.

In the existing literature, we can find a number of approaches for the classification of paintings by artistic style. One of the first questions to be answered is the type and number of visual features to analyze. Among the most popular cues included in classification systems are color, and texture.

Concerning the use of color features, Gunsel et al. [12] incorporated color cues such as the percentage of dark colors, the gradient coefficient, and the luminance information to classify 5 artistic styles. The perceptual color spaces, e.g. the color spaces proposed by the Commission Internationale de l’Éclairage (CIE) such as CIE 1976 L*a*b* (

However, the task of classifying paintings using color as a single feature may be difficult. Recent research has been found that humans often combine multiple sensory cues to improve the performance of perceptual tasks, motivating recent research on the integration of more than one feature. In fact, it has been found that human perception is performed by using collectively color and texture information [15].

The inclusion of texture cues in classification systems has been essential to determine the style of a given painting, capturing visual features such as stroke style, relief, and the spacial relationship of color. Diverse studies have emphasized the inclusion of texture features in combination with color indicators.

Zujovic et al. [35] to classify 5 artistic styles used a set of features including edges, Gabor filters, and histograms of the hue\saturation\value (HSV) color space. Culjak et al. [6], considered the concatenation of 68 features to classify five different styles.

The features used include the number of edges, dark pixels, symmetry, and average values of the red\green\blue (RGB) and HSV color spaces. Guanming Lu et al. [21] proposed to compute the mean and the standard deviation of each color component in four color spaces. Additionally, proposed to combine the information extracted from the moments of second-order and contrast measurements.

Condorovici et al. [4] used a 3D

Siddiquie et al. [31], introduced the use of Gaussian and Laplacian-Gaussian filters to capture the behavior of the isotropic and anisotropic texture within a painting. Additionally, they use histograms of oriented gradients, edges, and color cues from diverse color spaces, for the classification of 6 artistic styles.

Non-supervised learning, such as clustering, has also been proposed for painting classification. Liao et al. [19] proposed to use color, texture, and spatial layout to classify oil painters using a clustering Multiple Kernel Learning Algorithm. Kim et al. [18] use both low-level and high-level features, such as color, shade, texture, saturation, stroke, and color balance, among others to classify oriental painting for wellness contents recommendation services.

Considering that the combination of color and texture cues might result in a high-dimensional feature space, recently, several works have been focused on finding the most relevant set of features for painting classification. Some examples are the research of Huang et al. [14], Gultepe et al. [11], and Paul et al. [27].

The search for the best set of features implies the initial selection of a set of base features. The first selection can be performed automatically or manually. Later, a feature selection process is performed to get the best subset of features from the initial set. The work presented by Huang et al. [14] proposed the usage of a bag-of-features based on MPEG-7 specification.

The bag-of-features contain four descriptors, based on color and texture, which are used to generate an initial feature set for the style classification. Gultepe et al. [11] uses unsupervised features learning with k-means (UFLK), principal component analysis (PCA), and raw pixels without preprocessing.

The authors classify among eight artistic styles: Baroque, Impressionism, Post-impressionism, Realism, Art-Nouveau, Romanticism, Expressionism, and Renaissance. In the work presented by Paul et al. [27], the use of several features for the classification is presented.

They used features such as dense scale-invariant feature transform (SIFT), the dense histogram of oriented gradient, and concatenate 2 × 2 cells (HOG2X2), local binary patterns (LBP), gradient local auto-correlation (GLAC), color naming, and GIST. In such a manuscript, they found that LBP obtains the best performance for the classification of five different styles.

Despite the remarkable performance of the approaches mentioned above, the proper set of features is still an open problem. Additionally, the number of different artistic styles distinguished by the previously proposed methods is still low in comparison to the number of styles in the art industry.

In this paper, we propose a system for the classification of paintings by artistic styles using color and texture cues in a computational intelligence approach. The proposed system is performed in three stages: feature extraction, feature selection, and an Artificial Neural Network (ANN) as a classifier.

Firstly, we select a set of color and texture features and carefully structure their combination. As has been mentioned before, the integration of color and texture features has been essential to describe paintings attributes such as stroke style, relief and, color spacial relationship.

It is worth to mention that in most of the previously reviewed approaches, the texture features are usually computed from the luminance component or from a gray level image obtained by converting the color image into grayscale. However in order to obtain more visual information, in this research work, we propose exploring the combination of color and texture cues extracted from color components of different perceptual color spaces. Secondly, considering that the obtained feature space is high-dimensional, we propose to reduce its dimensionality by using the principal component analysis (PCA) method to select the most relevant features.

The goal is a painting classification system that uses low-dimensional feature vectors without compromising robustness and accuracy. Thirdly, we propose to use a multi-layer perceptron (MLP) as the classifier. We designed the architecture of the MLP to effectively classify seven different painting styles.

The proposed method was evaluated on an extensive and challenging database of paintings designed for artistic style classification. Experiments indicate that our method attains higher accuracy in comparison to other state-of-the-art methods. From now on, we call our method CTArt, for Color and Texture for Artistic Classification.

The remainder of this manuscript is structured as follows: in Section 2, we present the overall proposed classification framework. Additionally, our feature extraction, feature selection, and classification scheme are also introduced. The experiments and results are given in Section 3, finally, the concluding remarks are presented in Section 4.

2 Methodology

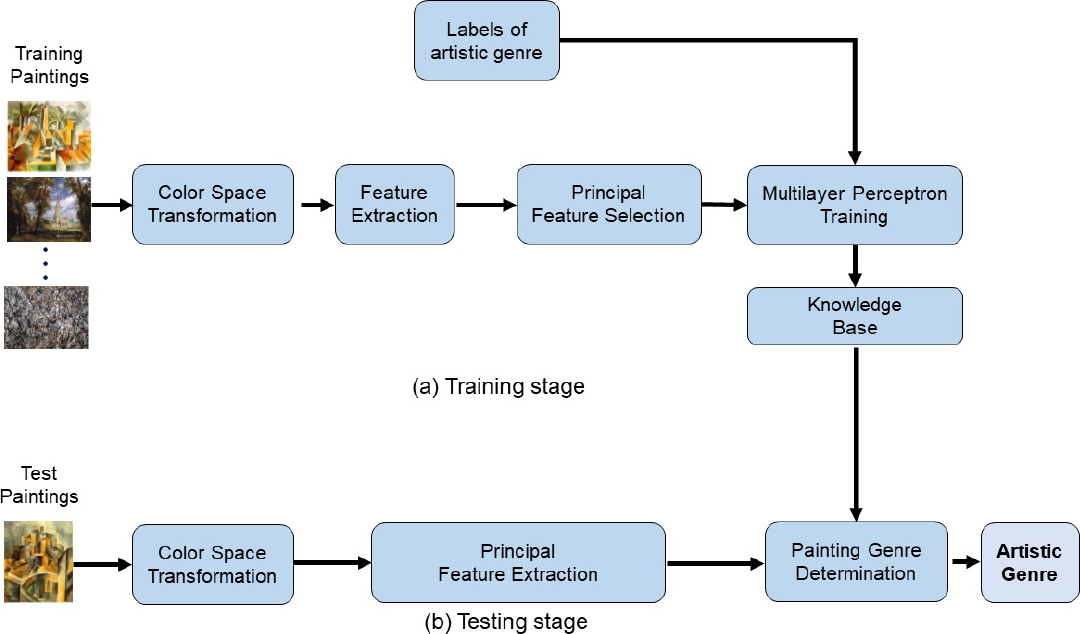

In this section, the theoretical framework of our proposed CTArt is defined. An overview of the proposed approach is illustrated in Figure 1. From this figure, we can observe that our system is divided into two stages: training and testing. In Figure 1(a), the training stage is depicted. Firstly, we perform a color space transformation of the learning images.

Fig. 1. of our proposed CTart model. (a) Color space transformation of the learning images is performed. Then, feature extraction is performed and the dimensionality reduction is carried out. Later, the learning process is performed by using a Multilayer Perceptron algorithm. (b) Principal feature extraction is performed. Then, by using the obtained model the determination of the painting style is performed

Later, extensive feature extraction is performed. Since the obtained set of features is high-dimensional, we use the Principal Component Analysis (PCA) to select the most discriminant features. Then, the obtained principal features are submitted to the multi-layer perceptron (MLP) algorithm for learning purposes.

The obtained model allows us to determine the artistic style of a given painting. In Figure 1(b), the process to test the model is depicted. Only the principal features are computed for a given test image. The outcome of our proposal is the artistic style of the test image. Each block of Figure 1 is detailed in the next subsections.

2.1 Color Spaces and Color Space Transformations

It is well known that the performance of a color analysis method highly depends on the choice of the color space [3]. The RGB color space is the most widely used in the literature. In this color space, a particular color is specified in terms of the intensities of three additive colors: red, green, and blue [8]. The RGB space is the model whose system is based on the cartesian coordinate system where its primary spectral components are: red, green, and blue.

The images of this model are formed by the combination of different portions of each of the primary RGB colors. Although the RGB space is the most used in the literature, this representation does not permit the emulation of the higher-level processes that allow the perception of color in the human visual system [13].

Different studies have been oriented to the determination of the best-suited color representation for a given approach [5], where color is the only feature to be taken. Some of them have found that, for color-alone methods, the so-called perceptual color spaces are the most appropriate.

In our proposal, in order to resemble the human perception of colors, we explore the extraction of features in different color spaces. Hence, in addition to the RGB representation, we explore perceptual color spaces, such as

It was proposed in 1976 by the CIE [23] as an approximation to a uniform color space. The

They represent, respectively, luminosity, hue from red to green, and hue from yellow to blue. The

where

where

The tristimulus values

where

2.2 Color Features

In order to clearly define the color information used by our proposed system, we consider the set of color components such as

Considering that the color palette used by the artist is one of the essential features to categorize a painting [6], we include in our set of color features, the 7 most important colors within the image. Such colors are selected from the RGB space by using the k-means algorithm [20]. The k-means algorithm is defined in Eq. 15:

where

2.3 Texture Features

Visual texture is a perceived property on the surface of all objects and it is a significant reference for their characterization and discrimination. There is a number of perceived qualities, which play an important role in describing the visual texture.

In artistic painting, the texture is of great importance, since it can give us hints of the stroke, the contrast of colors, edge softness, lines, etc. In our methodology, we propose to use two of the most widely used texture features such as Sum and Difference Histograms, and Local Binary Patterns.

Such texture features, which are described in the following paragraphs, have demonstrated to be highly discriminant and robust in diverse classification tasks.

On one hand, we use a subset of the statistical features extracted from the Sum and Difference Histograms (SDH) proposed by Unser [32]. The SDH establishes a numeric relation between two different pixels separated by a given distance

The non-normalized sums and differences, associated with relative displacement

The histograms of sums and differences with parameter

Then, the normalized sum and difference histograms are defined in Eqs. 21 and 22:

From the set of features proposed by Unser, we only used a subset of seven features defined in Eqs. 23-29:

On the other hand, we include in our proposed set of features, the local binary patterns descriptor (LBP), proposed by Ojala et al. [24, 25]. The LBP is a theoretically simple yet efficient approach, to characterize the spatial structure of local texture.

The LBP is a distribution that describes the local texture. According to Ojala, a monochrome texture image

The LBP value for the pixel

A number of variants of the LBP descriptor have been proposed over the years. Hence, the LBP descriptor has become one of the most widely used texture descriptors on diverse applications. In our approach, we propose to use the rotation invariant operators with uniformity of 2 (riu2):

As has been mentioned before, the use of color and texture information collectively has strong links with the human perception and in many applications, the color-alone or texture-alone information is not sufficient to describe an artistic painting.

Ilea and Whelan [15] have found that algorithms that, i) integrate the color and texture attributes in succession and, ii) the methods that extract the color and texture features on independent channels and then combine them using various integration schemes proved to be more promising for applications where links with the human perception are assumed.

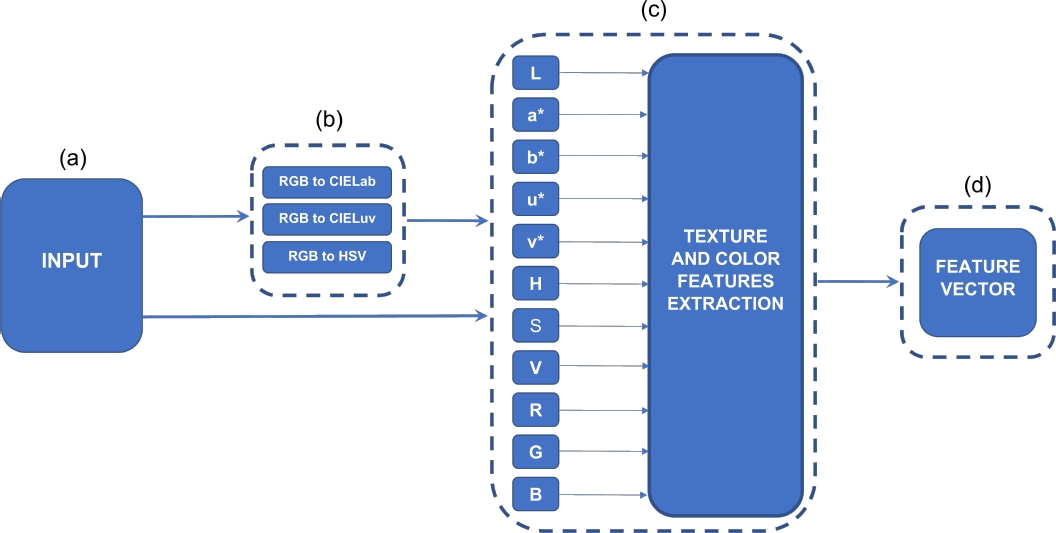

Taking this into account, in our study, we obtain the texture features from each color channel of the painting images transformed to the

Additionally, we obtain 10 and 18 features from the

Fig. 2. procedure of color and texture feature extraction. (a) The input image in RGB color space. (b) Transformation of the RGB input image into 3 color spaces such as

Firstly, we transform the original RGB image into 3 perceptual color spaces. Secondly, from each color component, we obtain color features and texture features in cascade. The concatenation of both sets of features results in a feature vector of 428 dimensions.

2.4 Principal Component Analysis

The use of high dimensional feature vectors is very common in different applications and it is often difficult to analyze. In order to interpret and classify such vectors, most methods require to reduce vector dimensionality, in such a way that the most relevant information in data is preserved.

Several techniques have been developed for this purpose. Among the diverse techniques, the principal component analysis (PCA) has positioned as a classic technique. The main goal of such a technique is to reduce the dimensionality of a feature vector while preserving as much statistical information as possible [16].

The PCA obtains the most relevant features by using the linear transformation of correlated variables. Firstly, the principal component analysis starts by normalizing the feature data. The mean is substracted from data and then, it is divided by the standard deviation.

Secondly, in Eq. 32, the singular value decomposition of the covariance matrix from the normalized data is performed, resulting in eigenvalues and eigenvectors of the covariance matrix. Thirdly, eigenvalues are sorted in a decreasing order effectively representing decreasing variance in the data [17].

Principal components are obtained by multiplying the originally normalized data with the leading eigenvectors whose exact number is a user-defined parameter. Lastly, the high dimensional feature vector is now represented by relatively few uncorrelated principal components that are later used in the learning process. In this paper, we reduce the original 428-dimensions size vector to a 168-dimensions size vector:

where

2.5 Classification Approach Using Artificial Neural Networks

The artificial neural networks (ANNs) are widely used for classification purposes on diverse applications. Recent studies have found that neural network models such as feedforward and feedback propagation ANNs are performing better in their application to problems where strong links with human perception are assumed [1].

There are several different architectures for ANNs. However, one of the most well-known architectures is the multilayer perceptron (MLP), which maps the features at the input to a group of outputs. The information in the MLP runs from the input layer of neurons, through the hidden layer, to the nodes at the output.

In general, the MLP accomplishes a nonlinear transformation of the information from previous layers, applying weighted summations. The MLP is typically trained using the supervised learning method of backpropagation.

The backpropagation algorithm maps the input data to the required outputs by reducing the error between the target outputs and the computed outputs [7]. In this paper, our CTArt approach consists of an ANN with MLP architecture which contains 168 input neurons, 88 neurons in the hidden layer, and 7 neurons at the output.

In order to determine the best number of hidden neurons to our proposed network, we carried out preliminary experiments by varying the number of neurons in the middle layer. It is worth to mention that we varied the number of hidden neurons in a bounded range.

We found that setting as 88 the number of hidden neurons produces the best result. The main goal of the proposed architecture is to classify artistic paintings into their corresponding artistic styles.

Each artistic style is associated with an output node. The activation function, the sigmoid function (S), defined in Eq. 33 is used for the neurons in the hidden and output layers, where the weighted-input summation to the nodes is represented by

The Levenberg–Marquardt learning method was used in the backpropagation algorithm to train the network offline, with randomly-set initial weights and biases, and a mean square error of 1 × 10-8 as the learning rate (

3 Experimental Results

In this section, we present the experimental setup, we describe the dataset used, the parameter settings, and the performance metrics utilized. Additionally, we compare the results obtained by our proposed CTArt system and state of the art methods.

In order to perform an objective comparison, we propose to directly compare our method with the approach recently introduced by Huang et al. [14] which uses a self-adaptive harmony search (SAHS) for feature reduction and support vector machine (SVM) for the classification of 6 artistic styles.

The comparison is performed by using the same dataset which includes the following artistic styles: Cubism, Fauvism, Impressionism, Na¨ıve art, Pointillism, and Realism. Additionally, we perform the experiments based on Huang et al. [14] evaluation methodology. In such approach performance metrics for the classification of each artistic style are proposed:

Normalized Improvement Ratio (NIR), the Absolute Improvement Ratio (AIR) and Accuracy. Normalized improvement ratio (NIR) is defined in Eq. 34 and the absolute improvement ratio (AIR) metric is defined as the numerator of the NIR metric. Accuracy is defined as in Eq. 35:

where recall is the ratio of correctly identified paintings for a style, and

where TP, TN, FP and FN are the results for True Positives, True Negatives, False Positives and False Negatives, respectively. The experimental results are shown in Table 1. From this table, we can observe that our method attains

Table 1 Comparison and results table with Huang et al. [14]

| Method | Feature Vector Dimension | Styles | Feature Reduction Approach | Classifier | Accuracy |

| Huang et al. [14] | 186 | 6 | SAHS | SVM | 69.80% |

| CTArt | 168 | 6 | PCA | ANN | 74.57% |

On the other hand, Huang attains a lower accuracy of

In Table 2, we present the results of the other metrics recall, NIR and AIR, for each artistic style. From this table we can observe that, in average, the performance of our proposal overcomes the Huang approach in all the metrics.

Table 2 Performance comparison of Huang method and our proposal using the same dataset

| Artistic styles | Huang | CTArt | ||||

| Recall | AIR | NIR | Recall | AIR | NIR | |

| (%) | (%) | (%) | (%) | (%) | (%) | |

| Cubism | 77.30 | 60.60 | 72.70 | 30.00 | 13.33 | 16.00 |

| Fauvism | 54.50 | 37.83 | 45.40 | 83.00 | 66.33 | 79.60 |

| Impressionism | 72.70 | 56.03 | 67.20 | 67.00 | 50.33 | 60.40 |

| NaïveArt | 75.00 | 58.33 | 70.00 | 80.00 | 63.33 | 76.00 |

| Pointillism | 72.70 | 56.03 | 67.24 | 90.00 | 73.33 | 88.00 |

| Realism | 68.20 | 51.50 | 61.80 | 88.00 | 71.33 | 85.60 |

| Average | 70.06 | 53.38 | 64.05 | 73.00 | 56.33 | 67.60 |

Our proposal attains a higher recall of

4 Conclusions

In this paper, a combination of color and texture features for paintings classification by artistic styles is proposed. We found that the combination of color information and texture cues improves the classification rate of artistic paintings by their style.

The proposed color and texture features are extracted from each color component of different color representations. Considering that the resulting feature vector is high dimensional, the use of PCA for feature reduction is proposed.

As a classifier, we propose to use a MLP, which is a widely known classification approach. The evaluation of the proposed CTArt was performed by testing on a challenging database. Quantitative results indicate that our CTArt is robust in discriminating among 6 artistic styles, and it has shown to be more accurate than other state-of-the-art approaches.

nueva página del texto (beta)

nueva página del texto (beta)