1 Introduction

Multimodality is a powerful paradigm that elevates the realism and ease of interaction in a virtual Reality environment (VR). Searching techniques supported for 3D interaction adapted to the requirements of the user, such as the tasks of navigation, is an important step for the realization of a future system of 3D interaction that supports multimodality, in order to increase efficiency and usability [3].

For better understanding of the requirements of the user interface, it is important to start by identifying the frequent and significant tasks carried out in the VR. These tasks are defined as coordinated or logical sequences of actions, and can share different applications; therefore, these tasks can be broken down into elementary actions.

For example, Wüthrich [18], identifies three types of actions elementary: select position and deform. Since the VR cover more space which can be seen from a single angle, users should be able to navigate efficiently within the environment in order to obtain different views of the stage. In fact, a 3D world is as useful as the ability of the user to be able to move and interact with the information within it. In this work we focus on the navigation task, which is the task more commonly used in the VR [4], we do not consider secondary task such as selection and manipulation.

Navigation in VR gives the user the feeling of an easy and intuitive movement within a virtual world. A good 3D navigation typically falls into two products: research to understand the cognitive principles within the navigation, and design tailored to create navigation for tasks and application-specific techniques. With the massive use of natural User Interfaces (NUI), such as, gesture-based interaction, vocal interaction, among other, we have observed that it not as natural as the name promotes as the mental model of the interaction in each individual is different. Even if a user defines its own natural language then the problem is about workload and the complexity of remembering the amount of commands that they just created, it is a whole new vocabulary. This is even worse as each time that you try to use new software the language is totally new. We have seen this with a small test of Kinect® videogames where sometimes it is really hard just to start to play.

So, in this work we have the research question is natural interaction really natural? We explored the question around a virtual reality task and compare the performance of users interacting with a system using natural gesture-based interaction. The experiments compare the use of traditional and well known metaphors and those novel and new gesture-based to get some conclusions about the natural interaction.

The rest of the article is structured as follows, next section discusses navigation in virtual environment and natural user interfaces as a way to interact with a virtual environment. The next chapter introduces the techniques to create a virtual environment and the experiment set up. Then chapter four discusses the results of the experiments and finally, we conclude this work and introduce the future work.

2 Related Work

There are many techniques of navigation previously developed, although these are highly dependent of interfaces of hardware. These techniques are efficient for isolated navigation tasks, but if we consider the global actions in VR (including tasks of navigation, selection and manipulation), where different devices may be necessary, in addition to the need to switch between them according to the need for the task, adding the possible difficulty of driving these devices for users. A proposal for a model of 3D interaction which facilitates and takes into account previous observations is the technique Fly Over [3], which has the following features: compatible with all 2D, 3D and 6 d (position and 3D orientation) as mouse or head/hand/finger tracking, which can return to a position/orientation in 2D or 3D from a user or manipulated object; It maintains the same logic of use of all devices; is intuitive; It is associated with a short duration of training. In Figure 9 you can see the Fly Over two areas of 3D interaction technique: designed for a task 6 d in an EV Z1 and Z2 [3].

Another remarkable developed interaction technique is the Visual interaction platform [1], (VIP for its acronym in English), which is a platform for augmented reality, which allows different interaction techniques such as write, draw, manipulate and navigate 2D and 3D environments. In Figure 10, we can see an example of this VIP technique implemented with their respective hardware that includes a LCD project to create a computer with a large work space on a flat surface which contains a digitizing Tablet.

Be worthwhile to highlight the work of so [15], which creates a taxonomy that categorizes existing navigation techniques and using complaints among them to create structures preliminary tasks of navigation, allowing the creation of new techniques.

3 Navigation in a Virtual Campus with Natural User Interfaces

Maps are a useful tool for people in different ways. Initially the maps were started with the purpose of knowing the world, but today they are an important source of information, more specifically speaking, its usefulness is highlighted when it comes to finding a particular place, or familiarizing itself with an environment for Make the actual tour easier. Some maps also have metric measurements, which makes it possible to take measurements away, which is related to real-world measurements.

The maps have gone through different stages in terms of their creation, initially they were only traces in the sand or the earth, but they have evolved until arriving at really sophisticated digital maps.

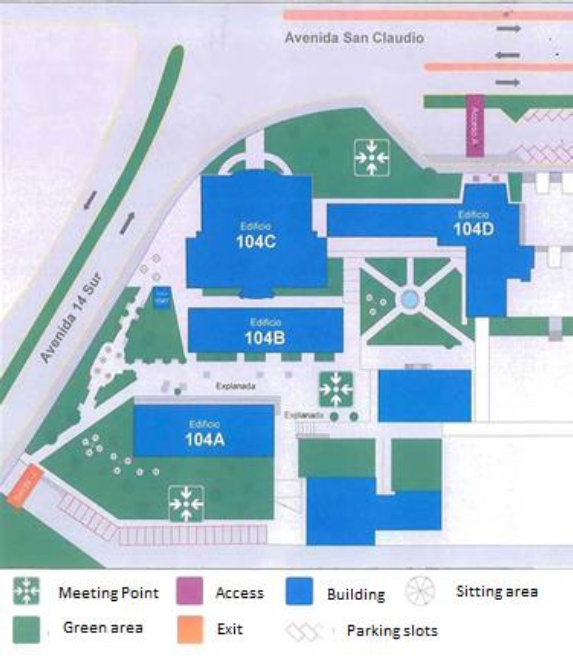

Testing different interaction techniques, a virtual campus of the University of Puebla was built. We choose this scenario as for people who are unfamiliar with any place, and for the first time looking to find a particular place, they have the problem that when they arrive, they do not know where to go. The project consists of 3D models on a scale that represent a university campus in a real way, it does not only target the students of the university, but also, all those interested in knowing more about the campus, being able to travel in a virtual way, allowing them to find sites of interest, or simply walk between their buildings and tour the different faculties. Although the process of creating a virtual campus may seem straightforward this is not the case. The first step was to build the 3D models of the different buildings, around 150 buildings, roads, objects such as cars, people, busses, threes were needed as well. Even that this section uses well known 3D modeling techniques, we consider that a novice reader in this field may find useful to read how these worlds were built.

3.1 Routing Set Up

Once the virtual world was built, you need to index all the objects so a user can locate important places, as well as to find the fastest roads to reach a destination. This could be done with the help of different interaction techniques. However, it is necessary to obtain and digitize coordinates of every object inside the University Campus as well as the roads available by walking. Each building was stored with the following description: name, official nomenclature, the colloquial name, if any, geographic coordinates (latitude and longitude). With the help of a mobile device we captured GPS coordinates, with the app AndroidTS GPS®, see Figure 1, running on Android operating system. Thanks to this application we efficiently stored and labeled all important buildings within the university campus. It was necessary not just to map buildings but also to identify walking routes in the campus. A series of interviews were also conducted to identify information about colloquial names of the buildings. Finally, all this information was stored with a total of 200 key places within of campus, which included: buildings for academic, administrative, sport activities, food places, plazas and important parks, among other highlights.

As one of the interaction techniques to be tested was mini maps thus a 2D representation of the surface of each building was needed. These polygons are also for each building that was located with the GPS. For this task, an analysis was made of the tools they offer for making interactive maps. The Google Map Maker® web application was chosen because of its benefits to the needs of the project. In this process, the data of the official nomenclature as well as the colloquial name is entered, the location of the place is specified and the plotting is done by means of the union of vertices that is shown in the tool, this can be seen in the Figure 2.

Fig. 2 2D models of the virtual campus, Polygons drawn with Google Map Maker® exported as KLM then mapped on a HMTL canvas

Having made the polygon tracings of the more than 200 key places, then, we store all trajectories in the format of an adjacency matrix, so to later use graph-based algorithms to recommend the shortest path to a specific location. A square matrix was used as a way to represent binary relationships, i.e., whether or not one element is connected to another. In this case our elements are the places and buildings of our virtual campus with some points of interconnection necessary to form routes.

These routes were also made with Google Map Maker®. The collected data was made available through the KML format. So, this is how the virtual campus in 2D was made.

3.2 Building 3D Models of the Virtual Campus

Modeling is a fundamental part of 3D worlds, since without this it would be an empty world. Broadly speaking, when we talk about 3D design, we are referring to the three-dimensional creation of pieces, objects, characters or structures, generally employed in engineering and architecture, or the generation of 3D images related to the multimedia world and 3D animation.

For 3D modeling there are different tools such as: SketchUp, Blender, 3D Max, Maya, each software has its advantages and disadvantages compared to others, but the possibility of performing quality work does not depend on this, but on knowledge, creativity, and not so much software.

In this project we used SketchUp to model the buildings. Most 3D modeling programs require at least one drawing knowledge base. However, Google SketchUp is designed for anyone to use it as it is a very intuitive tool, especially compared to other 3D drawing programs, plus its basic version is free. However, as all software has disadvantages, the greater is that it is limited compared to other modeling tools, as it lacks technical support and does not have some specific 3D modeling tools, nor does it generate reports. You also cannot export to 3D Studio Max or AutoCAD.

Creating the world in 3D is not enough modeling, you have to have where to integrate all the components developed, i.e. a videogame engine. The basic use of a videogame engine is to serve as a rendering engine either 2D or 3D, where physics engine, a collision detector, sounds, animation, scripting among other features are available. In general, it is a tool that facilitates the construction of Game levels and mechanics, by importing Assets (external objects) such as sounds, animations, models and graphics. In this project we use Unity 3D as videogame engine, possibly one of the best known graphics engines to date. Robust, easy to use, powerful, versatile for both an artist and a programmer, compatible with a lot of platforms, innovative in the way that it deals with the development of a video game and above all a large community of users.

The best feature of Unity is its stability and robustness, besides having free version that offers almost everything necessary for a great development.

Wayfinding is defined as the process to determine the strategy, direction and course necessary to reach a desired destination [17]. In virtual worlds there are problems with wayfinding, which means users cannot find their destination easily. This is a major inconvenience of usability that impacts the experience of use in virtual worlds, since if they cannot find their destination; they simply will not be able to use the virtual world [14]. When virtual worlds began to be studied, one of the great problems cited about wayfinding was associated with the lack of realism or simplicity of the models [16]. However, with the development of technology these are no longer problems, unless you do not have the human or technological resources necessary to make the virtual world with enough quality for a realistic appearance.

Although it is possible to create realism in the virtual world, it is usually difficult to become familiar with the virtual environment because it is different from our reality and we do not have a reference model that allows us to situate ourselves in the virtual world in an effective way. For example, imagine a university campus that we visited for the first time and ask us to reach the rectory building. It is a difficult task since we do not know the appearance of the rectory building, much less where we are with respect to the university campus, where we can go, where we come from, fundamental questions that the user should answer if there are elements of support Wayfinding.

In this context of real worlds, we situate ourselves with this investigation. In particular, we are looking for effective navigation strategies of our virtual university campus to support visitors and members of our university community to identify the location of a desired destination. And in this scenario we face three major challenges [2], which are: i) decision making, ii) execution of the decision, and iii) processing of information. Our work is developed in the context of real-world scenarios with which there is no familiarity, based on the understanding that users of the system do not know the university campus and require help to reach their destination. This type of scenario requires the user to be assisted whenever required [14], i.e.: i) for decision making, helping the user to understand the whole world, how it is organized and divided, identify Where they are and where they want to go: ii) execution of the decision, continuous guide accompanying him to his destination; (Iii) processing information, make it clear that they have reached their destination. This tool will allow you to get to know the campus without having to walk through it completely, users will be able to search for a particular building without having to walk face-to-face and arrive in a more direct way, or just know the campus. While many interaction techniques have traditionally been designed to help navigate virtual worlds appropriately, such as route mapping or GPS, they often cannot be adapted to any 3D environment without a specific goal. In this test we focused on the use of the mini map metaphor as a tool to aid in the navigation of virtual worlds.

The development of the world in miniature was done with the following steps:

A map of the reality to represent, which will provide the basis for building in virtual environment, since the main objective is to show what exists today, the more recent the fidelity to the real world. This was explained previously and the results are shown in Figure 2.

3D models. 3D models represent the virtual world based on the actual environment on which you want to navigate. We already discussed this process in the previous section.

Textures. The textures, for this specific case, form the main part of the world in miniature, since these offers the perspective that facilitates the navigation in the 3D environment.

Software. The software is involved in the creation of textures, as well as in the creation of 3D models and the virtual environment.

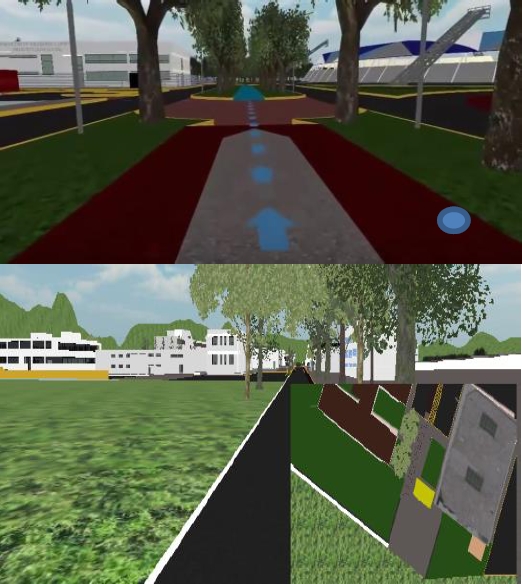

Some assistance was added to the virtual world, mini map or world in miniature as a guidance to follow a direction and arrows to clearly follow the right path following the waypoints route mapped on the road, Figure 4.

Fig. 3 3D models of the virtual campus, models drawn with SketchUp® exported as FBX then imported and rendered in Unity®

4 The Experiment

We have three scenarios tested. The first scenario users used a mouse and keyboard interaction as a starting point and to compare user performance when navigating in virtual environments. To analyze natural interaction, we explored three different mechanisms to navigate maps, hand gesture (Leap Motion ©) and body gesture (Kinect © camera body movement recognition).

The Scenario was the same. A student was asked to go from one location to a second location. The task was simple but the distance was considerably so the task could last at least around 30 seconds. Each user receives a training session to go from one direction to another when they used the Graphical User Interface interaction mode, and the leap motion device, i.e., the system was preconfigured and there was no way to modify the gestures. When using the body-gesture scenario first users had to provide a body language for common tasks, such as: Zoom in, Zoom Out, Front Tilt View, Back Tilt View, Move Back, Move Up, Move Left, Move Right, Rotate Left, and Rotate Right. Once those commands were communicated to the systems then the user executed the task to go from one location to another.

The participants were students with prior experience using maps; eight students for each of the three experiments, 24 in total. This amount of participants is consistent with related work, as the average of participants reported in the literature has an average of 7 test users and two test scenarios and most of did not consider other solutions 68% of reported works [13]. The age range was from 20 to 22 years old and most of them were male participants 80%. During each test different variables were track: execution time, error rate, successful tasks. Every participant intervention was recorder with video as a backup to check facial expression and determine some simple emotions, such as: Surprise, happiness, and frustration.

4.1 The Set Up

The first scenario was to test graphical interaction the set up was a desk, with a computer and a screen a mouse and keyboard. The arrow keys are used to set the direction of the navigation, by using the mouse wheel to zoom in and out, the keyboard to open and close the map, and the arrow direction keys to move around the virtual world.

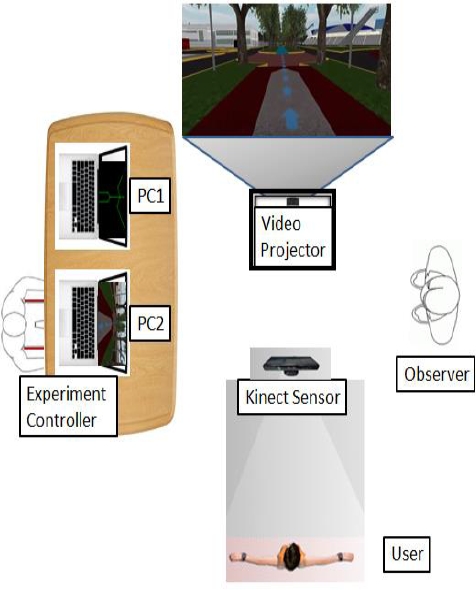

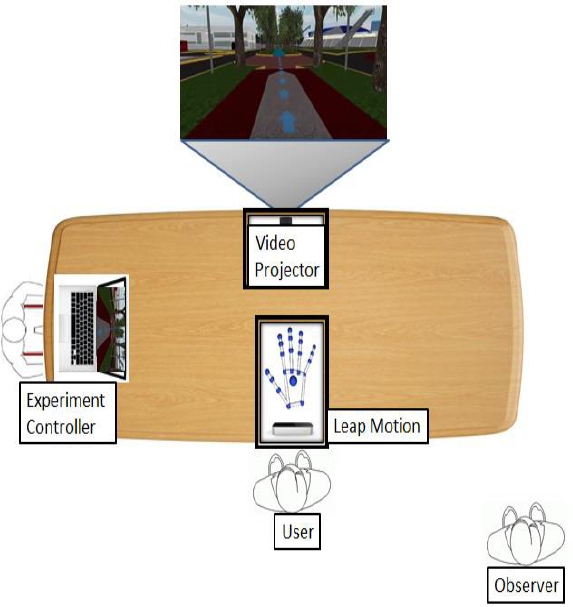

The second experiment was to test hand gesture, we used the Leap Motion as input device. The experiment elements were: a laptop, a projector, the Leap Motion device and the distribution was as depicted in Figure 5. The observer kept notes about user behavior, performance, error rate and any verbalization related to the experience using the system.

Fig. 5 Setup for the hand gesture experiment, the device use was the Leap motion®. A user navigates through a virtual environment using hand gestures

In the third experiment body gesture was explored with the help of a wizard of Oz [11], technique. We picked to test this strategy to prevent the development of an expensive system, in terms of time and effort, based in body movements is a challenge because you need to identify the most suitable set of body-gestures. Contrary to the Leap Motion experiment there were no specific solution or common agreement with the participants and developing multiple systems to probe every possible configuration is very complex.

As a consequence, we decided to run experiments using the real navigation system but faking the input with keyboard commands and asking the user to define their own body gestures to navigate through the virtual world. As we used the Wizard of Oz method, see Figure 6, the real user (Experiment Controller), controlled the navigation system using control keys on keyboard. The user under evaluation executed his movements and the corresponding commands were passed to the system by the experiment controller. The setup included two computers (Laptop 1 and Laptop 2), the first in control of Microsoft Kinect and a webcam for document the experiment. The second computer, connected to the projector to simulate user interaction with the system. The test subjects who were responsible for providing the movements of the platform configuration and make the simulated paths. An observer from experiment, this will provide support in case of any doubt arise, the wizard of Oz could not solve. The experiment controller (Wizard of Oz) managed the devices to document the experiment and explain how the activity is performed with each user.

Once the experiments were run, the resulting vocabulary, gestures, is presented in this section. First, for the first experiment, the hand gestures resulting are listed in Table 1. This vocabulary was the result of a survey with undergraduate students. More than fifty students were asked to indicate what hand gesture would they do to perform each task? The vast majority agreed on the selected gestures with an average of 90% of preferences. In fact, those hand-gestures are the same to those proposed to control Google Earth with the Leap Motion device.

Table 1 Hand Gestures for Navigation using the Leap Motion Device

The two resulting gesture languages were the two most predominant from the experiments. We do not claim that they are the best or those with better performance but at least those closer to the user mental model. In the next section we show the results from the experiments.

4.3 Results and Discussion

Although the number of participants for each experiment (eight), is not statistically significant is eight evaluated, yielding information to determine system problems. We are that different from what is reported in the literature, 68% of reported works evaluate with seven users [12]. Our evaluation included computer science students from the Computer Science Faculty of Autonomous University of Puebla, who knew the tool and received a brief description and examples on how to use the platform. Later, they were asked to make a move from one faculty to another. Without any premeditation, we just simply asked for volunteers for each experiment seven male and one female showed up.

In the first experiment, the results of using mouse and keyboard based interaction were very favorable. All users were able to complete the task and the response times did not exceed the minute. The support of the maps and the possibility of opening them using a combination of keys were very positive.

As you can see in the table, navigation achieved times, even close to the ideal. Since the minimum time to make the virtual tour was 35 seconds. In general, no person presented a problem, except a user who did not remember how to open the mini map but if like opening the guide with arrows.

In the following experiments, the opposite happened, although users could ask for support or enable the map, practically nobody made use of the help. At the end of the experiment we question the users about the reason why they did not enable the map or the arrows to guide their route. Curiously all pointed to the complexity of remembering gestures to control by hand or body. This phenomenon was less problematic with the hand gesture. In the end people remembered that with a snap of fingers could open the mini map and with a closed fist the guide with arrows.

They stated that they did not feel lost but in reality the observation revealed that they were. In addition, the instability of the leap motion control, mostly due to inexperience, conducted to frustration, so at a certain point users felt so lost that they completely forgot the option to enable the guides.

We consider that a good lesson learned is that these guides should be activated by default. The second experiment was run with the leap motion setup (Figure 5), and using the hand-gesture vocabulary from Table 1. In Table 4 the execution times and comments from the observation are listed.

Table 2 Body Gestures for Navigation using Kinect Device. The vocabulary is the result of the gestures where users performed better

Table 3 Execution Time and Comments to the GUI Experiment

| Gender | Execution Time |

Comments |

|---|---|---|

| M | 01:00 | No problem detected. |

| M | 00:57 | No problem detected. |

| M | 00:44 | No problem detected. |

| M | 00:38 | No problem detected. |

| M | 00:58 | No problem detected. |

| M | 00:55 | No problem detected. |

| M | 00:45 | Did not recall the key to open the map but he used the arrows to get directions. |

| F | 00:53 | No problem detected. |

| Average | 00:51 |

Table 4 Execution Time and Comments to the Hand Gestures Experiment

| Gender | Execution Time |

Comments |

|---|---|---|

| M | 0:48 | He got lost a little but quickly get back to the right track and was located |

| M | 0:25 | We went far away from the target but came back very quickly |

| M | 4:19 | Using as located and after missing several times managed to reach |

| F | 2:16 | Asks for continued help. Very few system accuracy. This creates a lot of stress and nerves. Despite the explanation forgot how to use it. Moving randomly to find the reference waypoints. |

| M | 0:35 | No problem. |

| M | 0:20 | No problem. |

| M | 1:12 | Perfect control of interactive. Very easy to find. |

| M | 2:07 | Got lost at the begging and had problems to control the device. Good control at the end. |

| Average | 01:08 |

With the Leap Motion users tend to use the zoom out, locate the target location and zoom in to the closest location and easily get to the target.

The second experiment was run with the leap motion setup (Figure 5) and using the hand-gesture vocabulary from Table 1, tend to use the zoom out, locate the target location and zoom in to the closest location and easily get to the target. The common problem was due to losing control of the operations.

Similar to the previous experiment all participants finished the experiments successfully. However, three of the users had some control errors, so they were doing the wrong hand-gesture. One of them was constantly locking for help to try to remember the controls as he forgot most of them.

The comments with regard to the leap motion were common agreement that it is hard for a human to keep the arms constantly extended to interact with a system because you got tired. While the hand-gesture language was almost an agreement, everybody agreed that at least for this task they would rather prefer the use of a GUI as it is unnatural to use the arms to navigate.

Navigation in real life is something that we do with our body. That is why we decided to test the navigation using body-gestures. In this sense, we determine in the same way what gestures would make a person to indicate that he wants to walk.

Navigation in real life is something that we do with our body. That is why we decided to test the navigation using body-gestures. In this sense we opted to determine in the same way what gestures would make a person to indicate that he wants to walk. In a first stage we explore Kinect video games where the user will explore virtual worlds. In the first instance we were surprised that the "natural" gesture of the march was not present except for sports games where the user had to run.

The "normal" is that the user had to extend some of his arms to indicate direction, direction, speed. However, we insist on looking for a "natural" gesture for the users, and that is why we started with an experimentation of the Wizard of Oz type, where the user could train the system with his body-language.

The surprise was pretty big since no person proposed the march like a gesture "natural" to denote that it is wanted to advance.

In fact, everyone agreed to move their bodies, arms, to indicate the gesture. In Table 2 we show only, the list of gestures that mostly yield better results.

Even worse, memorization problems were increased, gesture use errors were constant, and Zoom type controls were omitted. The curious thing is that many of the movements defined were somewhat contradictory. For example, some proposed to open their arms to zoom out and make the opposite gesture, open arms and close them to zoom in. The problem was that returning the arms to the original position after opening them generated the wrong impression of zoom in. Same case happened, when they defined the gesture of arms up to raise and arms in position of rest to lower.

The obvious problem that arises is, the rest position, it is natural to adopt it while we do nothing, does not mean that we want to indicate the direction below. The third experiment we used the Kinect. The results are summarized in Table 5. During the experiment, some of the moves made, were somewhat confusing, because sometimes the positions of rest, might seem an order, the return of a move to its rest position, it could also be confused with commands to the device.

Table 5 Execution Time and Comments to the Body Gestures Experiment

| Gender | Execution Time |

Comments |

|---|---|---|

| M | 4:06 | Problems to find the place. There are no cues, so he was lost. He was constantly lost until he used the guides. |

| M | 3:03 | No problem detected. |

| M | 2:34 | No problem detected. |

| M | 07:25 | Fail to accomplish the task. Very confused, Wayfinding not good, a lot of error with his own body dialog, memory problem. He does not know where to go. |

| F | 11:38 | A lot of doubt, frustration, desperation. Constantly got lost. She changes interaction techniques. |

| M | 1:05 | So pleased with the technique. He wishes to have a Kinect for his own. |

| M | 2:00 | Minor frustration. He just uses one hand gestures to manipulate the virtual world. |

| M | 01:51 | Some problems with wayfinding, memory, doubts about their own technique. |

| Average | 04:20 |

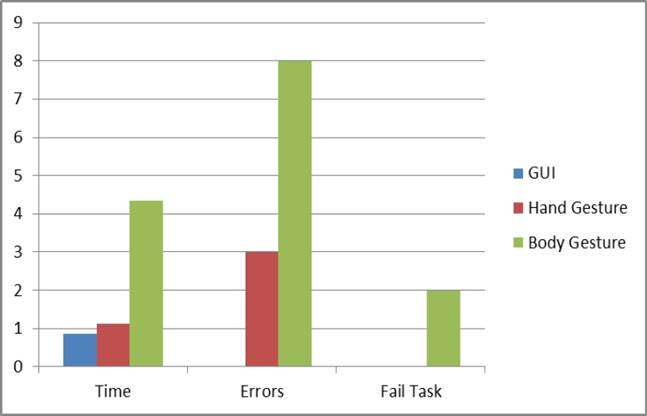

The reader can identify that the execution time of the interface based on body gestures was by far the worst option, when one hoped it would be the best. The errors increased, the eight participants showed some confusion when using the commands, see Figure 7.

Fig. 7 Summary of the evaluation test were elements were evaluated. Execution time, user that has errors while using the system, and unfinished tasks

Even if they had defined the body-gesture language, however, in a second round, despite modifying it, there were no significant changes. It is clear that the natural thing is to walk and rotate but we are still far from having equipment that allows the user to move freely in the same way that he does in real life, without being in it, there are examples of special forces training simulators where it is added to the real world increased information such as terrorists or explosions. We even discard the use of an immersive reality device as people become dizzy and more complex the interaction.

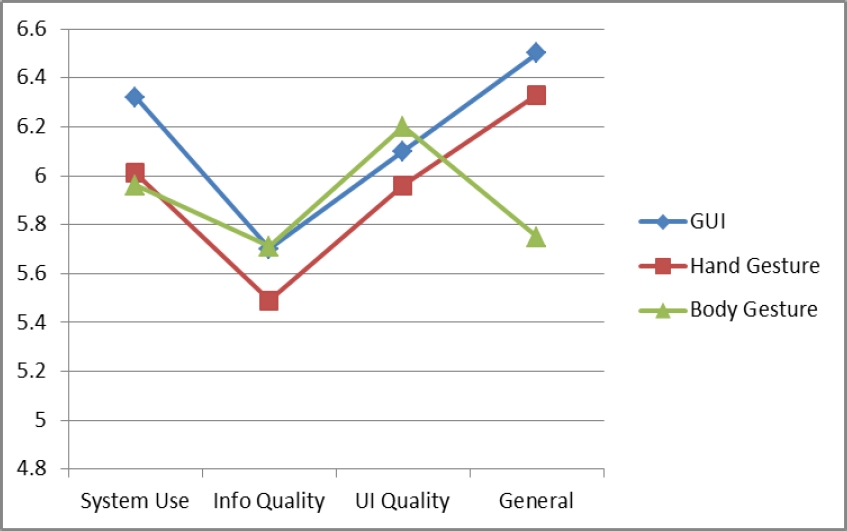

Finally, we evaluate the user preferences while using the software. We use the using the IBM Computer Satisfaction Usability Questionnaire (CSUQ) [12], for its simplicity, and its high correlation to the results (empirically proved with r=0.94). This questionnaire consists of the following 19 questions. These questions are structured in four groups or concepts: system use (SYSUSE- questions 1 -8), information quality (INFOQUAL - questions 9-15), user interface quality (InterQual - questions 16 -18), and a global estimation (GLOBAL - question 19). Each question is answered on a 7 point Likert scale, where seven is the best and one is the worst. Afterwards, an average for each group is determined, as well as the standard deviation. This allows us to know the score range obtained in each category. Each experiment finished by asking the students to fill the questionnaire and the results are depicted in Figure 8 and Table 6.

Fig. 8 IBM CSUQ evaluation of the system confirming that users preferred interaction technique is the GUI and the worse is body-gesture

Table 6 IBM CSUQ average estimated values for each evaluated parameter. System use, information quality (Info Qual), user interface quality (UI Qual) and a global estimation (General)

| System Use |

Info Qual |

UI Qual |

General | |

|---|---|---|---|---|

| GUI | 6.32 | 5.7 | 6.1 | 6.5 |

| Hand Gestu-re | 6.01 | 5.49 | 5.96 | 6.33 |

| Body Gestu-re | 5.96 | 5.71 | 6.2 | 5.75 |

As shown in Table 6 and Figure 8, there is no big difference with regard to the system functionality, quality of the messages and the User Interface, which seems normal as they were using the same interactive system, what it was different was the interaction technique. With regard to the natural user interfaces the results were very similar, but in general terms, his inclination was more towards the Leap Motion device, as the overall vision has a better score compared to the Kinect.

4.4 Guidelines for Natural User Interfaces

Based on the experiment and the results we propose the following guidelines to support the design and development of Natural User Interfaces have been reported [12]. Based on this work, we confirm our list [8]:

Realism of the objects. Virtual objects should be similar as much as the real objects [10].

Compatibility with the navigation. When the user is expected to navigate in a virtual word with a vast surface extension, it is important to let her navigate using different perspectives such as: egocentric and exocentric views [7].

Movement metaphors compatible. The user might walk its avatar through the virtual world using the most appropriate metaphors, such walking, flying, virtual carpet [7]. Today most of the renders of 3D Web applications allows fast movements. However, the flying property or virtual carpet should be added to the avatar.

Speed of the movement compatible. Similarly the speed of the movement should be in harmony with the metaphor used, to fly faster speed than when walking [7].

The nature of the user movement compatible the human nature. It is important that the user uses his body to interact in a virtual world in correspondence to the movements that they normally do [10]. This guideline is particularly important when gloves, head mounted displays or any other input device is used. However, it is applicable and relevant to Web application as the use of the keyboard and mouse should try to consider this issue as well. This is the case when using the augmented reality toolkit that can track the head movements so the viewpoint of the virtual world could be attached to the head movements.

Compatibility with the task and the guidance offered. It is important that ac-accordingly to the task some guidance should be provided [10]. This can be assured as the task model should be modelled considering the desired scenario. If it is a learning application, then highlighting to guide the user must be explicitly determined in the task model then this information will be automatically considered when concretizing the 3DUI. Figure 4 depicts navigation in a virtual reality scene where the user is moving thanks to arm or body gesture movements. The navigation direction (or pointer), is represented by a plot of arrows that is moving according to the navigation. When the user requests some help, then you have a mini map to provide guidance.

Spatial organization of the virtual environment. It is important to keep the spatial distribution of the objects in a virtual world devoted to training or to be the mock-up of a place as similar as the real space.

Spatial organization of the virtual environment. Related to the previous guideline, this guideline refers to the need to represent a virtual world in a way that end users may easily discover some other areas related to the main one [10]. Authorities may want to know where the office is located and walk through the virtual world.

Decoration appropriate to the context. Decoration of the virtual world should be compatible with the context of use that is represented [7]. In Figure 4, the decoration is exactly the same as the building, roads, and trees.

Wayfinding: users should be able to know where they are from a big picture perspective and from a microscopic perception [12]. In this case we developed the metaphor on world in miniature.

Simulate before implement. We learned that using the Wizard of Oz method was really useful running a simulation of a system dialog with natural language. Since its origins [11] has been used throughout the history of the development of interactive system, in particular, in the field of natural interfaces is a way to collect corpus for voice interaction system [5], mixed reality interfaces [5], or movement commands for interaction with kids [9].

Prevent the use of interactive metaphors that are not considering natural movements. This is the case when we use hand gesture, body or arm gesture. It could be really painful to keep your arms constantly forward, although it is a good exercise, but not so natural. Sometimes, you ask the user to move their body to a position that is really uncomfortable thus it will be hard for the system to recognize such position and for the user to imitate it.

When it is available show directions by default. As we mention earlier it is better to show directions by default instead of expecting the user to know where to go. Wayfinding is tricky and sometimes, as we observe, the user does not activate direction help even if it was available.

This set of heuristics we expect would be useful for practitioners when they are confronted to develop interactive systems with natural interaction and virtual worlds, particularly when it is related to navigation task.

5 Conclusion

The set of heuristics listed in the previous section was compiled considering natural user interfaces (NUI) while navigating a virtual world. The terms used are generic but as long as we could we mentioned terms that are specific to certain interfaces, such as “gesture” and “screen”. Our conclusion is about emphasizing that even though you build a solution expecting to have we tried to look at their proposed guidelines, learned lessons and recommendations from an impartial perspective. This is a double-edged blade, as it may lead to heuristics that do not apply to every NUI (especially to NUIs that were not invented yet), but at the same time is meant to be of help to any NUI designer or HCI researcher.

Regarding universal access, we believe all heuristics are adequate to any kind of user, although some heuristics have a more evident contribution to assistive technologies. This last remark is especially true if we consider the scenario depicted in section 2. The lack of proposed solutions that do not allow for users to send interactions to the system can be remedied by heuristic 1 in Table 3. Providing different modes of operation with distinct information carriers implies offering not only multiple forms of communication (system to user and vice-versa), but also different types of feedback that can each be suitable to a kind of disability.

Furthermore, all the heuristics in the “User Adoption” group are essential when thinking of new assistive technologies. First, because users who already live with their disabilities in a long time already have their own strategies to dealing with it, so a new technology must offer really good advantages to them. Second, because many solutions are developed keeping in mind only the novelty of the technology behind it, and not necessarily if it will actually be acceptable to users in their everyday activities.

nueva página del texto (beta)

nueva página del texto (beta)