1 Introduction

Extracting bilingual lexicons from comparable corpora has received a massive interest in the NLP community, mainly because parallel data is a scare resource, especially for specific domains. More than twenty years ago, 5 and 22 described two methods for how this can be accomplished. While those studies differ in the nature of their underlying assumption, they both assume that the context of a word shares some properties with the context of its translation. In the case of 22 the assumption is that words in translation relation show similar co-occurrence patterns.

Many variants of 22 have been proposed since then. Some studies have for instance reported gains by considering syntactically motivated co-occurrences, either by the use of a parser 24 or by simple POS-based patterns 18. Extensions of the approach in order to account for multiword expressions have also been proposed (e.g. 2). Also, many have studied variants for extracting domain specific lexicons; the medical domain being vastly studied, see for instance 1 and 17. We refer the reader to 23 for an extensive overview of works conducted in this vein.

There is a trend of works on understanding the limits of this approach. See for instance the study of 12 in which a number of variants are being compared, or more recently the work of 10. One important limitation of the context-based approach is its vulnerability to rare words, which has been demonstrated in 20. The authors reported gains by predicting missing co-occurences thanks to co-occurences observed for similar words in the same language. In 21, the authors adapt the alignment technique of 6 initially proposed for parallel corpora by exploiting the document pairing structure of Wikipedia. The authors show that coupling this alignment technique with a classifier trained to recognize translation pairs, yield an impressive gain in performance for rare words.

Of course, many other approaches have been reported for mining translations in comparable corpora; again see 23 for an overview. A very attractive approach these days is to rely on so-called word embeddings trained with neural-networks thanks to gradient descent on large quantities of texts. In 15 the authors describe two efficient models of such embeddings which can be computed with the popular Word2Vec toolkit. In 16 it is further shown that a mapping between word embeddings learnt independently for each language can be trained by making use of a seed bilingual lexicon. Since then, many refinements of this approach have been proposed (see 14 for a recent comparison), but 16 remains a popular solution, due to its speed and performance.1

In this study we compare three of the aforementioned approaches: the context-based approach 22, the word embedding approach of 16 and the approach of 21 dedicated to rare words. We investigate their hyper-parameters and test them on words with various properties. This comparison has been conducted at a large scale, making use of the full English-French Wikipedia collection.

In the remainder of this article, we describe in Section 2 the approaches we tested. Our experimental protocol is presented in Section 3. Section 4 summarizes the best results we obtain with each approach, and report on the impact of their hyper-parameters on performances. In Section 5, we analyze the bias those approaches have toward some word properties. We conclude in Section 6.

2 Approaches

We implemented and tested three state-of-the-art alignment techniques. Two of them (context and embedding) makes use of a so-called bilingual seed lexicon, that is, pairs of words in translation relation; the other (document) is exploiting instead the structure of the comparable collection.

2.1 Context-Based Projection (context)

In (22), each word of interest is represented by a so-called context vector (the words it co-occurs with). Source vectors are projected (or “translated”) thanks to a seed bilingual lexicon. Candidate translations are then identified by comparing projected contexts with target ones, thanks to a similarity measure such as cosine.

We conducted our experiments using the eCVa Toolkit2, 10which implements several association measures (for building up the context vectors) and similarities (for comparing vectors).

2.2 Document-based Alignment (document)

This approach relies on a pre-established pairing of comparable documents in the collection. Given such a collection, word translations are identified based on the assumption that source and target words should appear collection-wise in similar pairs of (comparable) documents.

The approach was initially proposed for handling the case of rare words for which co-occurrence vectors are very sparse. On a task of identifying the translation of medical terms, the authors showed the clear superiority of this approach over the context-based projection approach. In their paper, the authors show that coupling this approach to a classifier trained to recognize translation pairs is fruitful. We did not implement this second stage however, since the performance of the first stage was not judged high enough, as discussed later on.

2.3 Word Embedding Alignment (embedding)

Word Embeddings (continuous representations of words) has attracted many NLP researchers recently. In 16, the authors report on an approach where word embeddings trained mono-lingually are linearly mapped thanks to a projection matrix W which coefficients are determined by gradient descent in order to minimize the distance between projected embeddings and target ones, thanks to a seed bilingual lexicon

where

We trained monolingual embeddings (source and target) thanks to the Word2Vec3 toolkit. The linear mapping was learnt by the implementation described in 3.

3. Experimental Protocol

The approaches described in the previous section have been configured to produce a ranked list of (at most) 20 candidate French translations for a set of English test words. We measure their performance with accuracy at rank 1, 5 and 20; where accuracy at rank 𝑖 (TOP@𝑖) is computed as the percentage of test words for which a reference translation is identified in the first 𝑖 candidates proposed.

Each approach used the exact same comparable collection, and possibly a seed lexicon. In the remainder, we describe all the resources we used.

3.1 Comparable Corpus

We downloaded the Wikipedia dump of June 2013 in both English and French. The English dump contains 3539093 articles, and the French one contains 1334116. The total number of documents paired by an inter-language link is 757287. While some pairs of documents are likely parallel 19, most ones are only comparable 9. The English vocabulary totalizes 7.3 million words (1.2 billion tokens); while the French vocabulary counts 3.6 million ones (330 million tokens).

We used all the collection without any particular cleaning, which departs from similar studies where heuristics are being used either to reduce the size of the collection or the list of candidate terms among which a translation is searched for. For instance, in 21 the authors built a comparable corpus of 20169 document pairs and a target vocabulary of 128831 words. Also, they concentrated on nouns only. This is by far a smaller setting than the one studied here. While our choice brings some technical issues (computing context vectors for more than 3M words is for instance rather challenging), we feel it gives a better picture of the merit of the approaches we tested.

3.2 Test Sets

We built two test sets for evaluating our approaches; one named 1k-low gathering 1000 rare English words and their translations, where we defined rare words as those occurring at most 25 times in English Wikipedia; and 1k-high gathering 1000 words occurring more than 25 times. For the record, 6.8 million words (92%) in English Wikipedia occur less than 26 times.

The reference translations were collected by crossing the vocabulary of French Wikipedia with a large in-house bilingual lexicon. Half of the test words have only one reference translation, the remainder having an average of 3 translations. It should be clear that each approach we tested could potentially identify the translations of each test word, and therefore have a perfect recall.

3.3 Seed Bilingual Lexicon

The context and embedding approaches both require a seed bilingual lexicon. We used the part of our in-house lexicon not used for compiling the test sets aforementioned. For the embedding approach, we followed the advice of 3 and compiled lexicons of size up to 5000 entries.4 More precisely, we prepared three seed lexicons: 2k-low which gathers 2000 entries involving rare English words (words occurring at most 25 times); 5k-high gathering 5000 entries whose English words are not rare, and 5k-rand which gathers 5000 entries randomly picked. For the context approach, we used 107 799 words of our in-house dictionary not belonging to the test material.

4. Results and Recipes

In this section, we report on the best performance we obtained with the approaches we experimented with. We further explore the impact of their hyper-parameters on performance.

4.1 Overall performance

The performance of each approach on the two test sets are reported in Table 1, where we only report the best variant of each approach according to TOP@1. This table calls for some comments. First, we observe a huge performance drop of the approaches when asked to translate rare words. While context and embedding perform (roughly) equally well on frequent words, with an accuracy at rank 1 of around 20%, and 45% at rank 20, both approaches on the 1k-low test set could translate correctly only 2% of the test words in the first place; the embedding approach being the less impacted at rank 20 with 12% of test words being correctly translated. What comes to a disappointment is the poor performance of the document approach which was specifically designed to handle rare words. We come back to this issue later on.

Table 1 Performance of the different approaches discussed in Section 2 on ourtwo test sets. The best variant according to TOP@1 is reported for each approach. On 1k-high, we only computed the pe^ormance of the document approach on a subset of 100 entries

It is interesting to note that approaches are complementary as evaluated by an oracle which picks one of the three candidate lists produced for each term. This is the figure reported in the last line of Table 1. On rare words, we observe almost twice the performance of individual approaches, while on 1k-high, we observe an absolute gain of 10 to 15 points in TOP@1, TOP@5, and TOP@20. This said, we observe that no more than 57% (resp. 19%) of the test words in 1k-high (resp. 1k-low) could be translated in the top-20 positions, which is disappointing.

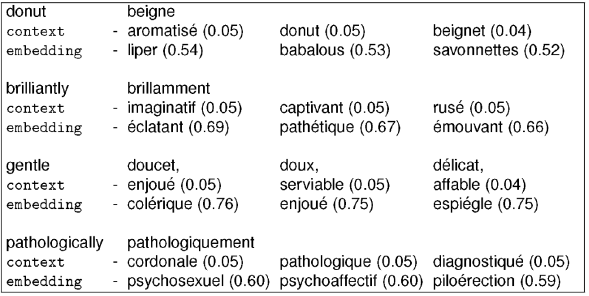

Examples of outputs produced by our best configurations are reported in Figure 1. While it is rather difficult to pinpoint why many test words were not translated, we observe tendencies. First, we notice a “thesaurus effect”, that is, candidates are often related to the words being translated, without being translations, as aromatisé (aromatized) proposed for the English word donut. Some errors are simply due to morphological variations and could have been counted correct, as pathologique produced instead of pathologiquement. We also observe a few cases where candidates are acceptable, but simply not appear in our reference.

We compared a number of variants of each approach in order to have a better understanding of their merits. We summarize the main outcomes of our investigations in the following subsections.

4.2 Recipe for the context approach

We ran over 50 variants of the context-based approach, varying some meta-parameters, the influence of which is summarized in the sequel.

Each word in a context vector can be weighted by the strength of its relationship with the word being translated. We tested 4 main association measures and found PMI (point-wise mutual information) and discontinuous odds-ratio (see 4) to be the best ones. Other popular association measures such as log-likelihood ratios drastically underperforms on 1k-high (TOP@20 of 7.8 compared to 44.3 for PMI).

Context words are typically picked within a window centered on the word to translate. While the optimal window size somehow varies with the association measure considered, we found the best results for a window size of 7 (3 words before and after) for the 1k-high test set, and a much larger window size (31) for the 1k-low test set. For rare words, context vectors are very sparse, therefore, increasing the window size leads to better performance.

Last, we observed a huge boost in performance at projection time by including in the projected vector source words unknown from our seed lexicon. Without doing this, the best configuration we tested decreased in TOP@20 from 44.3 to 31.7. Our explanation for this unexpected gain is that some of those words are proper names or acronyms which presence in the context vector might help to discriminate translations.

4.3 About the document approach

Since this approach does not deliver competitive results (see Table 1) we did not investigate many configurations as we did for the other two approaches we tested. One reason for the disappointing results we observed compared to the gains reported in 21 might be the very different nature of the datasets used in our experiments. As a matter of fact, we used the entire English-French Wikipedia collection while in 21 they only selected a set of 20000 document pairs. Also our target vocabulary contains almost 3 millions words while theirs is gathering 120k nouns.

We conducted a sanity check where we randomly selected from our target vocabulary a subset of 120k words to which we added the reference translations of our test words (so that the aligner could identify them). On the 1k-low test set, this led to an increased performance with a TOP@1 of 4.9 (compared to 0.7) and a TOP@20 of 20.2 (compared to 5.0). Those results are actually very much in line with the ones reported by the authors, suggesting that the approach does not scale well to large datasets.

4.4 Recipe for the embedding approach

We trained 130 configurations varying meta-parameters of the approach. In the following, we summarize our main observations.

First of all, the configurations which perform the best on 1k-high and 1k-low are different. On the former test set, our best configuration consists in training embeddings with the cbow model and the negative sampling (10 samples). The best window size we observed is 11, and increasing the dimensionality of the embeddings increases performance steadily. The largest dimensionality for which we managed to train a model is 200.5 Those findings confirm observations made by for frequent terms. In this work the authors managed to trained a model with embeddings of size 300. On their task, the best model trained performs a TOP@1 of 30 while on our task, the configuration described achieved a TO@1 of 22. In 15 the author also report a TOP@1 of around 30 for embeddings of size 1000. Recall that in our case, the target vocabulary size at test time is around 3 million words, while for instance in it is in the order of a hundred thousands (depending of the language pair considered), which might account for some differences in performance.

For the 1k-low test set, the best configuration we found consists in training a skip-gram model with hierarchical softmax, and a window size of 21 (10 words on both sides of each word), and an embedding dimensionality of 250 (the largest value we could afford on our computer). Again this confirms tendencies observed in 3 for the case of translating unfrequent words. Also, 16 observed that the skip-gram model and the hierarchical softmax training algorithm are both preferable when translating unfrequent words.

Regarding the influence of the seed lexicon, we observed that using the largest one is preferable. This confirms the findings in 3 that a lexicon of 5k is optimal for training the mapping of embeddings. For the low frequency test set, we further observed that using a seed lexicon of rare words (5k-rand) is better. By doing so, we could improve TOP@1 of 1 absolute point and TOP@20 of 3 points. On the 1k-high test set, the best performance is obtained with 5k-high (TOP@1 of 44.9%), then 5k-rand (TOP@1 of 40.5%) and 2k-low (TOP@1 of 10.3%).

5 Analysis

In the previous sections, we analyzed the impact of the hyper-parameters of each approach on performance. In this section, we analyze more precisely the results of the best configuration of each aligner in terms of a few properties of our test set. We believe such an analysis useful for comparison purposes. Also, in order to foster reproducibility, we are happy to share the test sets as well as the seed lexicons we used in this study.6

5.1 Frequency

We already observed a clear bias of the approaches we tested toward frequency. Figure 2 reports the performance of the best configuration of each approach when translating test words which frequency in English Wikipedia do not exceed a given threshold. For instance, we observe that on the subset of test words which frequency is 10 or less, the best approach according to TOP@1 (embedding) achieves a score of 8.76%. The frequency biais is clearly observable, and even for rather large frequency thresholds. Both context and embedding compete across frequencies, but if not too frequent test words have to be translated, and if a shortlist is what matters (TOP@20), then context might be the good approach to go with.

5.2 String Similarity

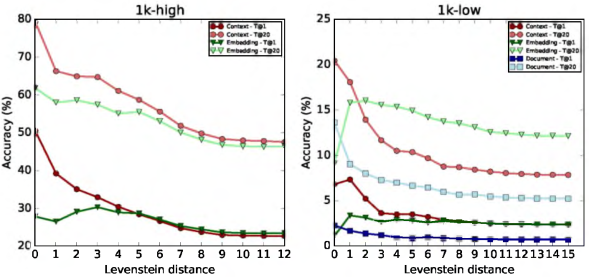

It is rather difficult in the relevant literature to get a clear sense of the intrinsic difficulty of the translation task being tackled. In particular, the similarity of test words and their reference translation is almost never reported, while for some language pairs, it is a relevant information that is even used as a feature in some approaches (e.g.,12). Figure 3 illustrates the performance of our best configurations as a function of the edit-distance between test words and their reference translation.7

Fig. 3 Accuracy TOP@1 and TOP@20 of the best configurations as a function of the edit-distance between test words and their reference translation

As we expected, we observe overall a decrease of performance for words which reference translation is dissimilar. On words translated verbatim, TOP@1 performance is as high as 79.3% for context and 61.8% for embedding, while both approaches compare as the edit-distance augments. On rare words, embedding seems to be less sensitive to the edit-distance between the source term and its reference translation.

5.3 Medical terms

Medical term translation is the subject of many papers (e.g.17,8,11 to mention just a few). In order to measure if this very task is easier than translating any kind of word, we filtered our test words with an in-house list of 22773 medical terms. We found only 22 medical terms in 1k-low and 80 in 1k-high. Although those figures are definitely not representative, we computed the performance of our best configurations on those subsets. The results are reported in Table 2. On frequent words, the gain in performance is especially marked for the context approach. We also note that the document approach seems to perform rather well on unfrequent medical terms, which is exactly the setting studied in 21. One possible explanation for this positive difference is that medical terms, at least in English Wikipedia tend to be rather frequent and their translation into French have an average edit-distance which is lower than for other types of words, two factors we have shown to impact performance positively.

6 Conclusion

In this study, we compared three approaches for identifying translations in a comparable corpus, and studied extensively how their hyper-parameters impact performance. We tested those approaches without reducing (somehow arbitrarily) the size of the target vocabulary among which to choose candidate translations. We also analyzed a number of properties of the test sets that we feel are worth reporting on when conducting such a task; among which the distribution of test words according to their frequency in the comparable collection and the distribution of their string similarity to their reference translation.

Among the observations we made, we noticed that the good old context-based projection approach 22 when appropriately configured competes with the more recent neural-network one (especially on frequent words). This observation echoes the observation made in 13 that carefully tuned count-based distributional methods are no worse than trained word-embeddings. This said, in our experiments, the embedding approach revealed itself as the method of choice overall. We also observed that the approach of designed specifically for handling rare words, while being good at translating medical terms had a harder time translating other types of (unfrequent) words. Definitely, translating rare words is a challenge that deserves further investigations, especially since unfrequent words are pervasive.

We considered only one translation direction in this work. Since mining word translations is likely more useful for language pairs for which limited data is available, we plan to investigate such a setting in future investigations. Also, we would like to compare other approaches to acquire cross-lingual embeddings.

We also provide evidence that the approaches we tested are complementary and that combining their outputs should be fruitful. Since a given approach typically shows different performance depending on the properties of test words (their frequency, etc), it is also likely that combining different variants of the same approach should lead to better performance. This is left as a future work.

nueva página del texto (beta)

nueva página del texto (beta)