1 Introduction

The aim in spatio-temporal processing is to recover signals coming from a direction of interest, while attenuating signals from other directions. The processing element that allows such selective recovery/attenuation is known as a spatial filter or beamformer26.

Although new implementations of spatial filters may improve their poor resolution when resolving signals originating from closely-located regions2, they also suffer of a fundamental limitation: their performance directly depends on the number of sensing elements (aperture), independently of the number of time samples or the signal-to-noise ratio (SNR).

One might think that the limitations on aperture could be solved by using a large number of sensors (dense sensor array) and by using large-sized sensors. However, this cannot be always achieved in practice18. First, because sensors might be costly, but mainly due to the fact that adding many of them would result in an increase of computational cost. This is the result of the output of the spatial filter being a linear combination of the data acquired by M sensors at N time samples.

The computational cost increases as the number of sensors is increased, this is due to spatial filters being dependent on the covariance matrix and its inverse, which is calculated from raw data. Thus, the more sensors are considered, the greater the data matrix would be and therefore it will be more mathematically complex to calculate the covariance matrix and its inverse.

Given the limitations previously mentioned, spatial filters in biomedical applications were initially used only for interference removal, such as the case of fetal heart monitoring in28. A spatial filtering method for localizing sources of brain electrical activity suited for MEG recordings was first described and analyzed in27. However, their analysis only considered a sphere to model the head given the limitations in computer power at the time.

The advent of high speed digital computers nowadays has led to the emergence of many numerical techniques and with the rapidly growing computing capabilities, numerous problems of real engineering interest can now be solved with relative ease and in a much shorter time. Applying new numerical techniques in the solution of the inverse problem using a realistic model as a conductive model would result in an increased resolution, then making the estimation of the magnitude and location of a current source within the brain more accurate.

In this paper, a new perspective on how today’s computers make it possible to handle the mathematical complexity involved in MEG array signal processing is presented, up to the point when new and ever more complex neural activity analysis methods can be developed and realistic geometries to model the head can be used.

2 Methods

This section briefly reviews the concepts related to spatial filters, then the processing steps involved in their use for MEG source localization are explained.

2.1 Measurement Model

MEG is a non-invasive technique that allows the measurement of ongoing brain activity produced by the activation of multiple neurons (i.e.,50,000-100,000) in a specific area generating a measurable but extremely small magnetic field oriented at an orthogonal direction outside the head. Therefore, MEG requires an array of extremely sensitive superconducting quantum interference devices (SQUIDs) that can detect and amplify the magnetic fields generated by neurons a few centimeters away from the sensors. MEG is an attractive technology to study brain activity since magnetic fields pass unimpeded through the skull, resulting in a undistorted signature of neural activity that can be recorded at the scalp level13,10.

MEG measurements are assumed to be produced by a neural source that can be modeled by an equivalent current dipole (ECD), whose magnitude is given by s(t)=[sx(t),sy(t),sz(t)]T (assuming a Cartesian coordinate system) and located within the brain. The dipole is allowed to change in time but it remains at the same position rs during the measurements period. The ECD model holds in practice for evoked response and event-related experiments19. Then, the MEG data can be grouped, for the case of k=1,2,…,K independent experiments (trials), into a spatio-temporal matrix Yk of size M x N at the k th trial such that

where y m(t)is the measurement at the mth sensor, for m = 1,2,…,M, and acquired at time sample t = 1,2,…,N. Under these conditions, the following measurement model can be proposed:

where A(rs) is the M x 3 array response matrix, S=[s(1)⋯s(N)] is the 3 x N dipole moment matrix, and V k is the noise matrix. The array response matrix is derived using the quasi-static approximation of Maxwell’s equations, which connect time-varying electric and magnetic fields produced by an equivalent current dipole on a volume that approximates the head’s geometry (see15,7, and references therein). Then, in a physical sense, A(r s) represents the material and geometrical properties of the medium in which the sources are submerged.

2.2 Spatial Filtering

A spatial filter W(rs), such that S=WT(rs)Yk, can be designed in order to satisfy the following condition:

Note that the unit response in the recovery band is enforced by

while zero response at any point r in the attenuating band implies W(rs) must also satisfy

There are many ways to compute W(rs). One of them is the linearly constrained minimum variance (LCMV) spatial filter, which offers an alternative to design an optimal filter, that is to find W(rs) such that the variance at the filter’s output is minimum while satisfying the linear response constraint (4). Let us consider that the variance of the signal is given by

where tr{⋅} indicates the trace. Note that R=E[YYT] corresponds to the data’s covariance matrix. Hence, (6) can be written as

Therefore, the LCMV spatial filtering problem is posed mathematically as

The solution to (8) may be obtained using Lagrange multipliers (which is the classical method for finding local minima of a function subject to equality constraints) and completing the square, which results in25

For the case of unknown R, a consistent estimate of this covariance matrix (denoted by ˆR) can be used.

Applying (9) to the original MEG measurements provides an estimate of the dipole moment at location r s. Furthermore, the estimated variance or strength of the activity at r s is the value of the cost function in (8) at the minimum. Then, after some algebra, the estimated variance of the neural source is given by

It is also useful to estimate the amount of variance that can be credited to the noise. Hence, in a similar way as in (10), the noise variance is given by

where ˆQ corresponds to an estimate of the covariance matrix of the noise. This matrix is usually estimated from portions of the measurements where the neural source due to the stimulus is not active (e.g., pre-stimulus interval or base level of brain activity). Therefore, an estimate of the source localization based on (10) and (11) can be computed as

which is equivalent to maximizing the source’s variance (normalized by the variance of the noise) as a function of r.

Equation (12) provides an accurate estimate of r s under the assumption that regions of large variance presumably have substantial neural activity27. For that reason, ηLCMV(r) in (12) is often referred to as a neural activity index. However, the sensitivity of the LCMV filter to imperfections in the model knowledge is a well-documented fact 21. The main approach to remedy this problem is to improve the conditioning of the covariance matrix ˆR via an eigenspace projection, under the consideration that its signal and noise contributions belong to orthonormal subspaces20,24:

where Π⊥R corresponds to the projection matrix of the data onto the null space of the covariance matrix, and U 0 is the matrix whose columns are the orthonormal eigenvectors of ˆR that correspond to its zero eigenvalues. Hence, motivated by the “classical” LCMV solution in (9), the following structure can be proposed9:

Note that in (14) the inverse of the matrix has been replaced by the generalized inverse (denoted by [⋅]- ) because it is a reduced-rank beamformer8.

While (10) uses the classical neural activity index based on an estimate of the signal’s variance, a similar calculation based on (14) will produce an estimate of the sparsity as a function of the position30. Such estimate is obtained by replacing ˆR-1 by Π⊥R in (10), which results in

while a similar sparsity measure can be defined for the case of the noise:

where Π⊥Q is the projection matrix of the noise onto the null space. Therefore, an estimate of the source localization based on (10) and (11) can be computed as

which is equivalent to minimizing the source’s sparsity (normalized by the sparsity of the noise) as a function of r. Hence, ηEIG(r) will be referred to as the neural sparsity index.

Going back to the properties of the “classical” index in (12), they have been thoroughly investigated in 27 and derived works. Its main drawback has been found to be its sensitivity to correlated source cancellation and its poor performance under low SNR conditions. To circumvent this difficulty, a multi-source extension has been recently proposed in14. Namely, the following multi-source activity index (MAI) has been proposed for the case of l neural sources as

Where

And

The applicability of MAI(rs) has been already demonstrated in14. Nevertheless, it shall be noted that small changes in H(rs) may cause huge changes in H(rs)-1 if the former is ill-conditioned5. Furthermore, ill-conditioning can also be the result of using the estimates ˆR and ˆQ instead of R and Q, respectively, which is a common practice in source localization techniques based in EEG/MEG recordings. In order to alleviate the aforementioned shortcomings of the multi-source activity index defined in (18), a reduced-rank extension has been introduced in16 as follows:

where p is a natural number such that 1≤ρ≤3l, where l is the unknown number of concurrently active sources, and PR(G(rs)ρ) is the orthogonal projection matrix onto the subspace spanned by p eigenvectors corresponding to the largest eigenvalues of G(rs). The RRMAIT1(rs,ρ) achieves its maximum when the covariance matrix H(rs)-1 is replaced by a well suited estimator, such as the one proposed in 17,29. Based on that, another reduced-rank activity index can be defined as16

where PR(H(rs)p) is the orthogonal projection matrix onto the subspace spanned by p eigenvectors corresponding to the largest eigenvalues of H(rs).

3 Numerical Examples

In this section, the applicability of the neural activity indexes in (12), (17), (18), and (22) is shown through numerical examples using real MEG data corresponding to measurements of visual responses. The goal of these experiments is to show the use of those spatial filters in finding the location of neural sources from the MEG data.

The data used for these experiments is available at the MEG-SIM portal, which is a repository that contains an extensive series of real and simulated MEG measurements freely available for testing purposes1. The data were acquired at a sampling rate of 1200 Hz, and they were band-pass filtered between (0.5, 40) Hz with a sixth-order Butterworth filter. The data correspond to the response of Subject #1 to small visual patterns of two sizes (1.0 and 5.0 degrees visual angle) which were presented at 3.8 degrees eccentricity in the right and left visual fields, respectively. The small visual pattern was designed to activate ≈4mm 2 of tissue in primary visual cortex (located in the occipital lobe of the brain), while the large stimulus activate ≈20mm2 of visual cortex. In both cases, the activation in the brain is expected to appear in the contra-lateral hemisphere (i.e., opposite to the side of the presentation of the stimulus). The subject passively viewed a small fixation point at the center of the screen while the stimuli were randomly presented to the left and right visual fields for a duration of 500 ms and at a rate of 800-1300 ms (slightly randomized to avoid expectation). Two hundred individual responses for each of 2 stimulus conditions were acquired.

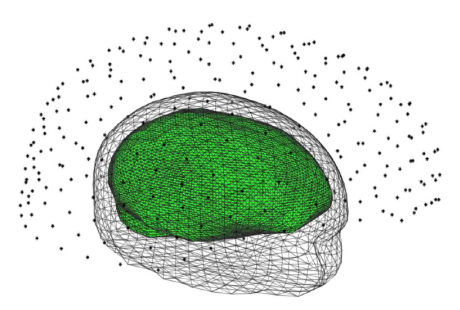

The MEG measurements were obtained with an array of M=275 channels with the spatial distribution of the VSM MedTech MEG system considered at the MEG-SIM portal. There, the anatomical MRI data of the subject is provided as well. Hence, a realistic head model can be created by first segmenting the MRI images with BrainVISA3, next tessellated meshes were generated from the segmented volumes using Brainstorm23. If a more refined and specific segmentation of brain structures is required as an aid in the source localization, brain atlases may be used to find homologous points or structures11. Here, the head model was composed by three tessellated meshes which were nested one inside the other in order to approximate the geometry of the scalp, skull, and brain. Each volume was given a homogeneous conductivity of 0.33, 0.0041, and 0.33 S/m, respectively. In particular, the volume corresponding to the brain was constructed with 11520 triangles. A full rendering of the head model and the position of the magnetometers is shown in Figure 1.

Fig. 1 Head model used in our experiments (only the scalp and the brain are shown). The asterisks indicate the position of the magnetometers above the head

Based on those conditions, the beamformers were evaluated at the position r corresponding to the centroid of each of the triangles comprising the brain mesh. In both cases, the array response matrix A was calculated using the computer implementation provided in22, which corresponds to a solution of based on the boundary element method (BEM) of the quasi-static approximation of Maxwell’s equations. BEM is a numerical method for solving partial differential equations (in this case Maxwell’s equations) with the advantage of reformulating them as discrete integral equations that then are solved on simple geometrical elements of a boundary mesh12. The data covariance matrix ˆR and the noise covariance matrix ˆQ were estimated from the data acquired in the 240 ms following the stimulus and the previous 240 ms, respectively. For comparison purposes, we considered the case of l=1 active source in the calculation of MAI and RRMAIT2.

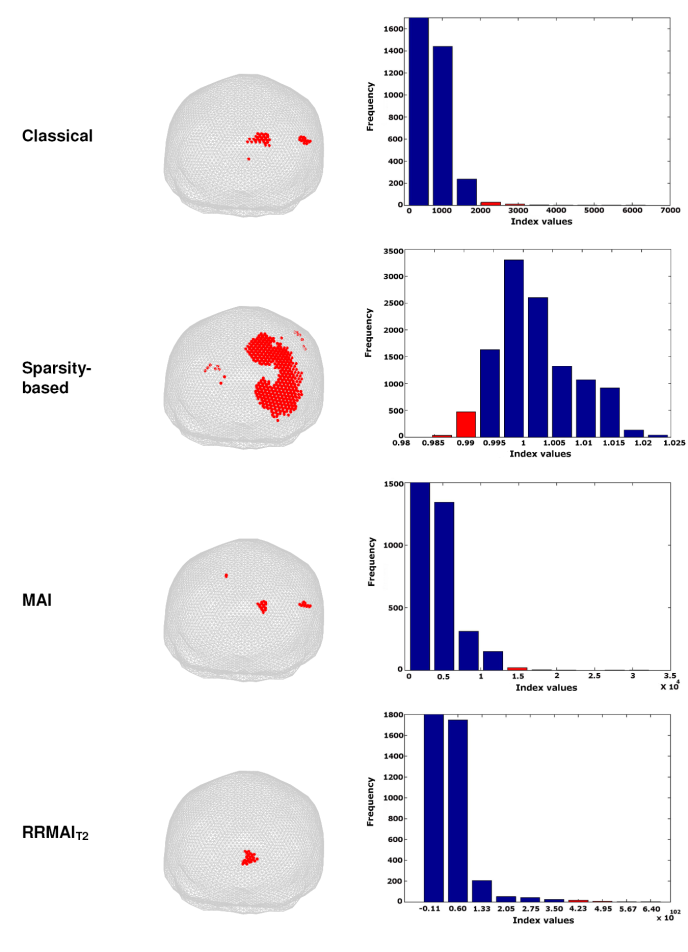

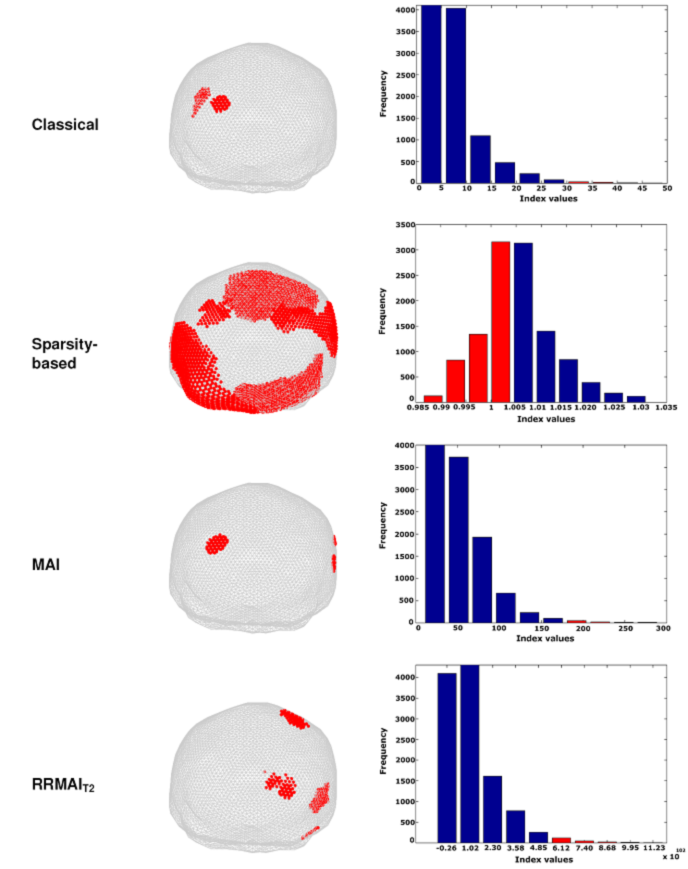

Figures 2 and 3 show the results of computing the index values for each of the beamformers. Given that the magnitudes of the indexes are very different, we decided to compare them in terms of their distributions. Therefore, we show the histogram of each index, where the red bars indicate the percentiles that were necessary to display so that the positions r with most significant index values (the minimum for the case of the sparsity-based index and the maximum values for the others) had an anatomical correspondence with the expected position neural source. Note that most of the positions indicated with red dots on the surface of the brain’s mesh coincide with a neural activation located at the primary visual cortex, but different portions of the respective distributions of the indexes were accounted for in order to achieve such correspondence. In all cases, we show the mesh modeling the head in an orientation such that the occipital lobe is fully seen from the back of the head.

Fig. 2 MEG source localization of a large visual stimulus presented on the left side of the visual field for one subject. The red dots are superimposed in the brain mesh in order to highlight the location of the most significant values of the corresponding index. In the histogram, the red bars indicate the percentile that corresponds with the index values that are displayed

Fig. 3 MEG source localization of a small visual stimulus presented on the right side of the visual field for one subject. The red dots are uperimposed in the brain mesh in order to highlight the location of the most significant values of the corresponding index. In the histogram, the red bars indicate the percentile that corresponds with the index alues that are displayed

In the case of a large visual stimulus presented to the left visual field (Figure 2), both MAI and RRMAIT2 provide very good results in terms of focalization of the source, but RRMAIT2 outperforms MAI as its most significant index values correspond with the tail of the distribution. The classical beamformer also achieves good results in those terms, but the position of the estimated source location is biased. Finally, the sparsity-based index is accurate in detecting the region with less-sparse-sources (i.e., those more likely to be related with the stimulus), but fails in terms of focalization. Clearly, an extreme-value distribution would be the most desired outcome in the index calculation process, but the sparsity-based index tends to be better described by a Gaussian distribution.

For a small visual stimulus presented to the right visual field (Figure 3), we obtained similar results as those previously described in terms of the shape of the distributions. However, in this case RRMAIT2 fails to estimate the source location (an ipsi-lateral patch is detected instead). This can be credited to the fact that we maintained the same value of p in (22) for all our calculations, while it is well-known that such parameter requires to be adjusted in a case-to-case fashion (see16 for a full account of that issue).

In terms of the computational cost, the calculation of each of the indices here tested was fully implemented in Matlab®, and the computer used was a HP ProLiant ML110 G7 server with a Xeon E3-1220 processor, 3.1 GHz of speed, and with 6 GB of RAM memory. Under those conditions, computing the sparsity neural index (which is the most mathematically complex of the four) took 42.3095 minutes. Nevertheless, the distance between the two possible solutions (i.e., the distance between the centroids of two triangles sharing a side) was 4.7 millimeters, which is much larger than the allowed error in source localization for clinical applications, such as in neurosurgery, where the sources must be located with a precision of at least 1 mm. Hence, for clinical applications, a much more dense brain mesh (i.e., more triangles in the tessellation) must be used. Still, such increase in the computing complexity is something that can be handled through many different types of hardware (e.g., graphic processing units), and with different algorithms to implement the beamformer (see, e.g., 4). In fact, thanks to the increase in computer power, beamforming has been resurrected as a suited technique for analysis of brain activity.

4 Conclusions

The use of spatial filters in the solution of the neuroelectric inverse problem involves very complex mathematical calculations. However, it is possible to manage such calculations with today’s computer power. Furthermore, new spatial filters, such as those based on eigenspace projections, can be used to improve the classical LCMV solution originally proposed in27.

Here, different indices of neural activity (all of them based on beamforming) were compared in terms of their ability to provide a focalized and anatomically correct estimation of a neural evoked response. Therefore, we looked for a beamformer to generate significant index values (i.e., at the tail of the distribution) and with an accurate correspondence with the expected location of the neural activity (primary visual cortex in this case). However, the methods here analyzed showed little consistency, that is, a single method not always provided good results for the same type of data.

Nevertheless, we do not expect to find a spatial filter that performs well for all types of data. For example, in the case of the sparsity-based index, we believe it did not provide good results for the evoked responses here tested as it is better suited for data with low SNR (i.e., with larger sparsity). Another example is the MAI, which is known to perform better for cases where correlated sources are involved, then MAI can be computed within a region-of-interest (ROI) in order to provide a focalized estimation. Therefore, it is necessary to continue with the development of new indexes based on more consistent estimates, as well as characterize the response of different beamformers for different types of data.

Finally, it is worth mentioning that the methods here tested are not mutually exclusive, and information obtained from a combination of methods may improve the overall result. An example of such approach has already been presented in a preliminary version of this paper (see6), in which the solutions of the classical neural activity and the sparsity-based indexes were combined in order to increase the focusing in the estimation of auditory evoked responses. Therefore, we believe new techniques in brain source localization may benefit from using hybrid techniques that take advantage of complementary information. Such complementarity could be further extended to the joint analysis of electroencephalographic (EEG) and MEG data. While for this paper only MEG data was available, new acquisition systems allow for the simultaneous measurement of EEG and MEG.

text new page (beta)

text new page (beta)