INTRODUCTION

Electromyography is a technique that measures the bioelectrical signals that muscles generate when the body performs an action; these signals have been used for the performance analysis of athletes, for the remote control of highly complex mechanical and electronic systems in movement, to measure development in rehabilitation, among other applications. sEMG signals are acquired by means of surface electrodes.

The literature reports the use of 1, 2 and up to 3 electrode arrays using the RLD (Right Leg Driven) configuration. The commercial device called Myo Armband, Thalmic labs [1], is a wireless electronic bracelet consisting of 8 channels (8 arrays of 3 mini dry-surface electrodes). When this bracelet of 8 arrangements of 3 superficial electrodes is positioned on the forearm, you have a complete overview of the sEMG signals of the forearm muscles when the hand performs any movement. This way of measuring EMG around a human extremity is called sEMG-360°.

In recent years, different researches have considered sEMG signals to identify the intention of movement of the human hand and to be able to reproduce them in a robotic hand. These signals are acquired when different gestures (movements) are made and then they are processed to acquire the most important information of the signal and once processed they can be entered into a classifier in order to identify the movements made by the user.

Tavakoli et al. [2] reports the sEMG signals of the forearm when certain movements are performed: open hand, closed hand, hand at rest and wrist flexion, these are acquired with the use of 3 superficial electrodes, then an extraction of characteristics is made in the time domain to the signals, where the mean value (MV) of each of them is obtained, then an SVM classifier is used to predict the movements, where they obtained an accuracy of 90%.

Another study presented by Shi et al. [3], where superficial electrodes are used to acquire the sEMG signals from the forearm when performing 4 gestures such as: closed hand, extended index finger, extended thumb, and the 4 extended fingers together. An extraction of characteristics in the time domain was applied, where the main descriptors were: the MAV, the zero crossing (ZC), the slope sign change (SSC) and the waveform length (WL), these characteristics were used in the nearest neighbor classifier (KNN) where they obtained an accuracy of 94%.

In the study presented by Krishnan et al. [4], the Myo Armband device is used to acquire EMG signals by performing 5 movements of the human hand. The author used some feature extraction methods in the time domain as the simple square integral (SSI), the maximum value and the minimum value, the average frequency and the average potential; these methods were used in an SVM classifier where they obtained an identification accuracy percentage of 92.4% for one user and 84.27% for another user.

In Mukhopadhyay et al. [5], 8 arrays of 3 electrodes are used to predict 8 classes of hand movement: wrist flexion, wrist extension, wrist pronation, wrist supination, force grip, pinch grip, open hand, and rest. Power- spectral descriptors were used to extract the characteristics of the sEMG signals. Subsequently, they used a deep neural network (DNN) as a classifier, obtaining 98.88% accuracy.

In the study presented by Sanchez et al. [6], the author obtained sEMG signals by using the Myo Armband bracelet to predict 8 hand gestures to reproduce on a robotic hand. They used an extraction of characteristics in the time domain as MAV, the RMS, the WL, the mean amplitude change (AAC), the integrated EMG value (IEMG) and the absolute standard deviation (DASDV); these data were used in an extended associative memory (EAM) classifier and obtained an accuracy of94.83% when using the MAV and RMS extractors.

Table 1 shows a summary of the previous literature that includes different characteristics such as the number of electrodes, their position, number of gestures, type of feature extraction method, and the identification accuracy.

Table 1 Summary of the previous works in the state of the art about EMG and classification of gestures.

| Article | No. of Electrodes on forearm | No. Of Gesture | Feature extraction method | Classifier | Accuracy (%) |

|---|---|---|---|---|---|

| Tavakoli et al [2] | 1 | 4 | MV | SVM | 90 |

| Shi et al [3] | 2 | 4 | MAV, ZC, SSC and WL | KNN | 94 |

| Krishnan et al [4] | 8 | 5 | SSI, Max, Min, MF and MP | SVM | 92.4-84.27 |

| Mukhopadhyay et al [5] | 7 | 8 | fTDD | DNN SVMK NNRF DT | 98.66, 90.64, 91.78, 88.36 |

| Sanchez et al [6] | 8 | 8 | IEMG, MAV, VAR, RMS, DASDV AAC and WL | EAM | 95.83 |

From the previous reviewitcan be seen that there are different articles on the identification of some hand gestures using different extractors and classifiers [7] with 1, 2, 4, and 8 array electrodes but with different identification accuracy percentage. Dueto the random nature of sEMG signals, it is of the researchers’ interest to improve the identification accuracy of human hand gestures using EMGsignals.

The present work proposes to improve the gesture accuracy identification using only sEMG-360 array and keeping the same feature extraction methods (MAV, RMS and AUC) in the time domain and the SVM classifier to identify 7 gestures of the human hand.

MATERIALS AND METHODS

This section shows the methodology used to extract the characteristics of the sEMG-360 signals acquired (in the 8 channels at 45° around the forearm) corresponding to 7 movements of the human hand: open hand (5 fingers extended), closed hand (5 fingers flexed), and 5 flexion-extension movements for each finger individually, as well as to explain the method used to evaluate the performance of the identification.

Myo Armband bracelet

It is an electronic device developed by the Thalmic Lab company as illustrated in Figure 1[1]. It is a bracelet that can be placed on the forearm to record the sEMG activity that is generated by the movement of the muscles. This device has 8 arrays of 3 dry-surface electrodes to monitor sEMG, it combines an accelerometer, a gyroscope, a magnetometer, an ARM Cortex M4 processor, indicator LEDs, motor vibrators, Bluetooth communication and a rechargeable power supply.

The sampling frequency of the Myo Armband bracelet is 200 Hz. The total sample rate of the Myo Armband is 200 Hz, which gives us a sample time as shown in Equation 1:

Where Tsampling is the sampling time, Hz is the sample rate and ms equals milliseconds.

This indicates that every 5 ms, the EMG signals of the 8 channels are acquired in 360° configuration of the Myo Armband bracelet.

Acquisitionof EMG signals

The Myo Armband device was used to acquire the 8 sEMG signals (8 channels) for each flexion-extension movement of the fingers of the human hand, the extended hand position (open) and the relaxed hand (closed). By means of Bluetooth (integrated in the device) and using the Python software, each af the 8 channels of each of the established movements were captured and saved on a PC for later analysis.

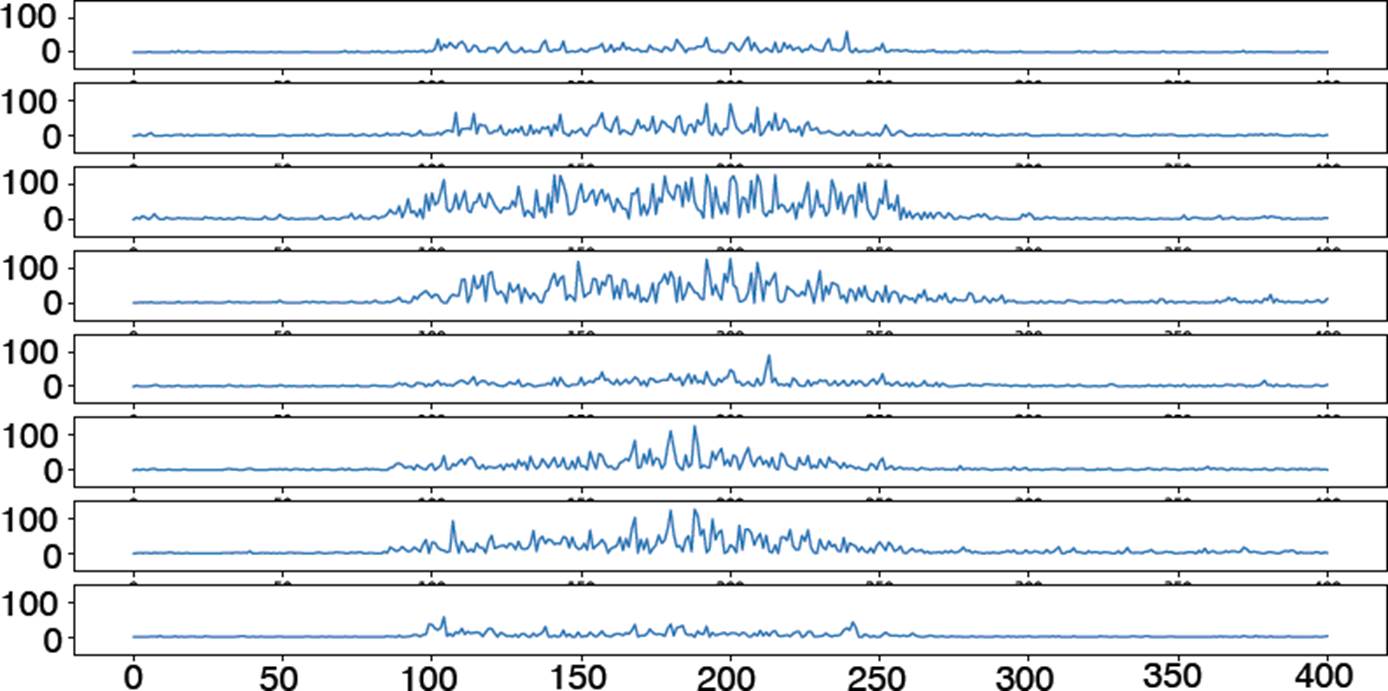

A window of 400 data was generated as shown in Figure 2, where the sEMG signals of the 8 channels are graphed. The movement developed in Figure 2 corresponds to the flexion-extension movement of he middle finger. A segment of 400 samples represents 2 seconds of the signal, though the gesture to k 1 second, the rest is the time interval before and after the gesture.

Figure 2 sEMG signals of the 8 channels, corresponding to the flexion-extension movement of the middle finger.

Once the sEMG signals were acquired for the preset gestures, feature extraction methods of the 8 channels were performed for the 7 motions. These feature extractions were performed in the time domain. It was proposed to implement 3 types of extractors, which are the most reported in the literature: the mean absolute value method (MAV) [8], the root mean square (RMS) method [9] and the area method under the curve (AUC) [6].

In the MAV method, the mean value of the sEMG signal is calculated. The formula tocalculate it is shown in Equation 2.

Where N represents the number of data contained in the sample and Xi the data contained in said sample.

The RMS method obtains the root mean square value of the sEMG signal. nhe mathematical expression is shown in Equation 3.

Where N represents the numberof data contained in the sampleand Xi thedata contained in said sample.

The area method consists of applying the trapezoid rule as an approximation of the definite integral by adding the areas of the trapezoids that make up the sEMG signal. Thefnrmula for calculating the area under the curve is shown in Equation 4.

Where Δx = (b-a) / N is the length of the subintervals and Xi = a + i Δx. The valuesof a and b belong to the interval where the integral will be evaluated.

Classifier

Once the feature extraction has been carried out using each of the proposed extraction methods, a classifier is required to be applied to separate the information and identify each movement. For this study, the support vector machine classifier (SVM) is used, where 100 data of each of the predefined gestures will be used. The data used for SVM can be more or less than one hundred, according to literature, one hundred is a representative number when statistics is applied [10] [11] [12]; the SVM classifier is an algorithm that can determine a plane that separates the acquired data set into several sets (vectors). For a new set, the similarity of the vector is determined, and it is classified within the set associated with that vector.

SVM algorithms use a set of mathematical functions called kernels. There are different types of kernel functions, such as: linear, polynomial, radial basis function (RBF) among others. Depending on the type of kernel, an accurate or inaccurate classification of the data is achieved.

The kernel functions are represented as:

Where x and y are the data that is entered into the classifier, b is a parameter to improve performance and n is the degree of the polynomial, y defines how much influence a single training example has.

In this work, the 3 types of kernels were applied for the SVM in order to determine which kernel maximizes the percentage of accuracy in the identification of movements.

Confusión matrix

In order to calculate the percentage of accuracy that exists during an identification process, the confusion matrix is used.

In the confusion matrix, each column represents the number of predictions for each class, and the rows represent the instances of the real class, so it is possible to observe the successes and errors of the model during the identification of the movement [13]. Figure 2 shows a confusion matricfor 4 cearees: A, B, C and D.

To calculate the accuracy value (AC), Equation 5[14] is used.

Where T = VA + VB + VC + VD; VA, VB, VC and VD are the numbers numbers where the classes are identified as true. FAB....FCD is the number in which a class is classified in another class. With100% accuracy, all the values for FAB… FCD are 0.

RESULTS AND DISCUSSION

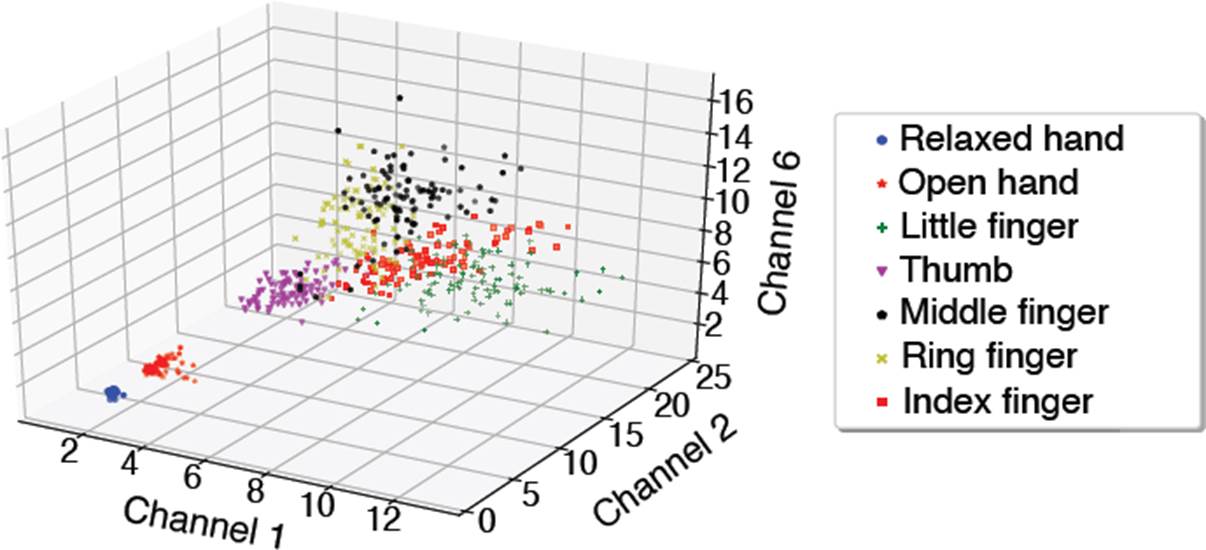

Since each gesture has8 associated sEMG channels, it is ideal to find the relationship of the 8 channels with the gesture. One technique used is to graph the data in an eight-dimensional space (number of channels). To simplify and show graphically this relationship, Figure 3 shows the corresponding three-dimensional space data for channels 1, 2 and 6of each of the 7 movements using the MAV extractor (different colors). It is observed that the classes are separated since they are in different ranges within the three-dimensional space. It is observed that the open hand and closed hand gestures are naturally separated from the gestures of moving a single finger. It is also observed that the little finger, middle finger and ring finger are the gestures that least allow classification because they overlap each other.

The behavior was calculated for the CUA and RMS extractors. There were no significant differences between them.

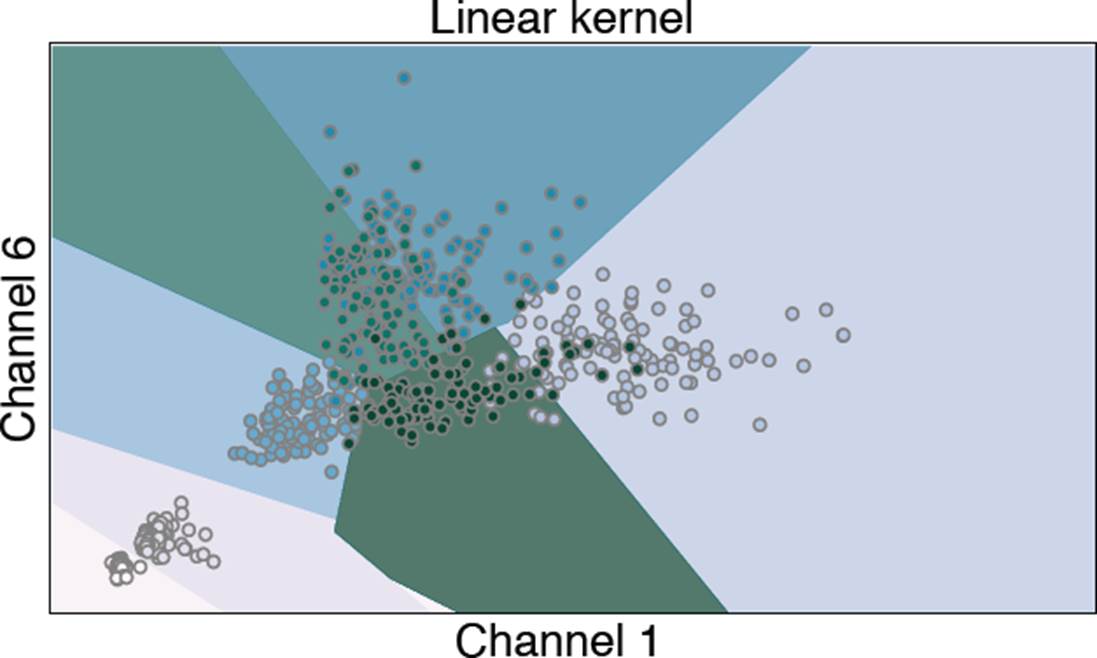

Once the MAV, AUC and RMS feature extractors were applied to the sEMG signals, the SVM classifier was applied. Figure 4 shows the classification carried out by the SVM with a linearkern el of the data obtained from the MAV for channels 1 and 6. It is observed that there are some data that were misclassified since they are in the region of a different class.

Considering the classification obtained from the classes with 100 samples for each gesture, the correSponding confusion matrix was obtained (Table 3). 70% of the data was used for training and the remain ing 30% for testing. Table 2 was obtained for a linear kernel SVM..

Table 2 Confusion matrix for 4 classes.

| Actual Class | ||||||

| A | B | C | D | … | ||

| Predicted Class | A | VA | FAB | FAC | FAD | … |

| B | FBA | VB | FBC | FBD | … | |

| C | FCA | FCB | VC | FCD | … | |

| D | FDA | FDB | FDC | VD | … | |

| … | … | … | … | … | … | |

Table 3 Confusion matrix for the identification of the 7 movements. Shown for SVM with RMS as feature extraction method.

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | |

| (1) | 30 | 0 | 0 | 0 | 0 | 0 | 0 |

| (2) | 0 | 30 | 0 | 0 | 0 | 0 | 0 |

| (3) | 0 | 0 | 30 | 0 | 0 | 0 | 0 |

| (4) | 0 | 0 | 0 | 30 | 0 | 0 | 0 |

| (5) | 0 | 0 | 0 | 0 | 30 | 0 | 0 |

| (6) | 0 | 0 | 0 | 0 | 0 | 30 | 0 |

| (7) | 0 | 0 | 0 | 0 | 3 | 0 | 27 |

In Table 3, number (1) refers to the gesture of the closed hand, number (2) the open hand, number (3) is the flexion-extension (FE) of the little finger, number (4) is for FE of the thumb, number (5) for FE of the middle finger, number (6) for FE of the ring finger and finally number (7) refers to FE of the index finger. In the same way, the black-colored diagonal values show the successes that a class has in respect to itself, and the other values that are outside the diagonal, refer to how many times the classifier predicted a value of a class that does not belong to the assigned one.

Table 4 shows the percentage of accuracy in the identification of the 7 gestures. It is shown that the RMS + SVM with the linear kernel, RMS + SVM with rbf kernel and AUC + SVM with rbf kernel obtained the highest percentage of accuracy in the identification with 99.52%.

Table 4 Percentage of accuracy in the identification of gesture under different extractors and kernels.

| Article | Extractor | SVM kernel | Accuracy % |

|---|---|---|---|

| This work | MAV | Linear | 98.57 |

| MAV | Polynomial | 99.04 | |

| MAV | RBF | 98.09 | |

| RMS | Linear | 99.52 | |

| RMS | Polynomial | 99.04 | |

| RMS | RBF | 99.52 | |

| AUC | Linear | 98.57 | |

| AUC | Polynomial | 99.04 | |

| AUC | RBF | 99.52 | |

| Tavakoli et al [2] | MV | Gaussian | 90.00 |

| Krishnan et al [4] | SSI, Max, Min, MF and MP | Linear | 92.4 and 84.27 |

| Mukhopadhyay et al [5] | fTDD | Linear | 98.66 |

The RBF hyperparameters used were C = 10000.0 and Gamma = 1e-05 for MAV feature, for RMS were used C = 10000000 and Gamma = 1e-09, and for the AUC the value of C = 100.0 and Gamma = 1e-09. The Polynomial parameter degrees used for MAV, RMS and AUC were the value of 2.

As shown in Table 4, the best combination of extractor + classifier was that of RMS + SVM with linear kernel also the RMS +SVM and AUC + SVM with the RBF kernel. In a general analysis, it can be observed that there was no significant difference between the feature extraction methods.

According to Table 4, in all the cases we have a high-percentage of identification accuracy, this means that realistically, the sEMG-360 could improve the accuracy. This result was expected because having 8 channels for each gesture to identify is a real advantage comparing with literature (Table 1). In the introduction, Mukhopadhyay et al. [5] using 8 arrays of 3 electrodes to predict 8 gestures and using a deep neural network (DNN) as a classifier, 98.98% accuracy was obtained. This high accuracy may be because the author used 8 electrodes, as we did, as well as a DNN classifier. In our case, identification accuracy is in the range of 98.5-99.5% in all the cases. In a different way, it is observed that a second parameter for increased identification accuracy is the classifier SVM. And a third parameter is the feature extraction method. According with our results, RMS is the better extraction method compared to MAV and AUC. At last, from a computational point of view, RMS + SVM implementation is very easy if compared to the DNN classifier.

Therefore, this research shows that s-EMG-360 improves the accuracy of identification though it is used with the common feature extraction method (RMS, MAV, AUC) and SVM classifier.

CONCLUSIONS

The results show that the RMS extractor in conjunction with the SVM classifier is the combination with the best percentage of accuracy (99.52%) in the identification of the 7 proposed movements of the fingers of the human hand.

It is shown that using a Myo Armband device is a good option for the implementation of the sEMG-360 method.

The sEMG-360 method improves the identification accuracy of human hand gestures. The SVM classifier was the second principal parameter to improve identification accuracy.

It is concluded that the feature extraction methods in the time domain (MAV, AUC and RMS) give the same result for identification accuracy, with RMS having a slight advantage.

At last, we concluded that sEMG-360 around the forearm is a powerful technique because it adds 8 sEMG to the processing data for gesture identification.

nueva página del texto (beta)

nueva página del texto (beta)