Introduction

Making information available and accessible to anyone is an intrinsic part of the work of Library and Information Science professionals, a principle that, according to Way (2010), was already identified by Ranganathan in The Five Laws of Library Science. What is observed, however, is that most scientists and researchers tend not to make their work available in open access (OA). In fact, as reported by Gargouri et al. (2010), only 15 to 20% of articles published worldwide are self-archived. According to the researchers, even if institutional strategies are adopted to encourage researchers to self-archive, many authors would only self-archive when required by institutions where they are they employed or by funding agencies.

Given the fact that the impact of scientific research is usually gauged by the number of citations garnered by a scientific paper, a much-debated question is whether works available through OA are more frequently cited than those available only through non-OA. This hypothesis, known as the “Open Access Citation Advantage” (OACA), argues that the ease of access can increase visibility and, consequently, potentiate citation frequency. The pioneering work in this area by Lawrence (2001) is often cited in this respect. He analyzed citation patterns of conference papers from the field of Computer Science and related areas, verifying that the most recent articles were more likely to be available online. This ease of access contributed, in turn, to the increase in the number of citations these papers receive.

Since the publication of Lawrence’s (2001) results, as Swan (2010) has shown, numerous other studies have been performed with the aim of examining the existence of the OACA in several other knowledge fields (Antelman, 2004; Harnad and Brody, 2004; Atchinson and Bull, 2015). Archambault et al. (2013), for example, verified OACA in 22 knowledge fields. Swan (2010), however, emphasized that there is also research showing there is no advantage in the number of citations for certain scientific fields, such as Economics (Frandsen, 2009 in Swan, 2010).

For the specific case of the Library and Information Science, Xia, Myers and Wilhoite (2011) conducted research to verify the existence of the OACA in 20 journals selected from Ulrich´s Periodicals Directory and the Journal Citation Report (JCR). As a result, the authors found there is a positive and statistically significant correlation between the number of citations and OA availability. Furthermore, the researchers verified that the number of citations also increases hand in hand with number of OA copies available on the web, which is more likely to occur when the paper has multiple authors, who either self-archive the paper in their respective institutional repository, or who receive help from librarians or student assistants with the archiving process in the repository.

Nevertheless, according to Gargouri et al. (2010), criticisms of the OACA hypothesis point out that these OA citation advantages may merely reflect self-selection bias. In other words, scientists will make only those papers available in OA that they themselves consider to be of higher quality and, therefore, potentially more likely to be cited by the scientific community. Harnad (2005), for example, sought to identify the reasons why publications made available in OA would have a greater advantage in the number of citations. Besides the self-selection bias, the author also indicates: i) the advantage bias of anticipation, i.e., papers whose results are made available in OA from the pre-print stage have an advantage over those self-archived later, since they may be cited in advance of publication; ii) use advantage bias, in which OA papers tend to be downloaded more than non-OA and, therefore, tend to be more frequently cited; and iii) quality advantage bias, when papers are genuinely of high quality and, consequently, more frequently cited than the others. In this case, unlike self-selection bias, it is not only the author who judges the value of the work highly, it is also peers. Harnad (2005) and Gargouri et al. (2010) argue that the criticism of the self-selection bias is no longer valid, since 100% of a researcher’s publications are in OA.

In contrast, Haustein et al. (2013) and Priem, Groth and Taraborelli (2012) believe that the assessment of a given paper’s impact should move beyond analysis of formal citation. The relatively new sub-field of research metrics, Altmetrics, has emerged to accompany the growing diversity of channels mentioning scientific research outputs, such as posts on social media, or participation in academic collaboration networks (Galligan and Dyas-Correia, 2013; Kousha and Thelwall, 2007).

For Wang et al. (2015), Altmetrics data can also be used as a supplementary indicator in the research of OACA. These authors propose making a comparison of OA papers published in in Nature Communications against those published in non-OA journals, in terms of the average of citations and views received, and the discussions the works prompt on social networks such as Twitter and Facebook. Their analysis confirms the citation advantage hypothesis for journal papers available in OA, verifying that this advantage extends also to Altmetrics data. That is, OA papers tend to receive more attention on social networks than non-OA papers.

Poplašen and Grgić (2016) sought to determne the existence of an “OA Altmetrics Advantage.” To do so, they used the list of top 100 papers on Altmetric.com for the year 2014, comparing non-OA with OA papers, as identified on the website. For their analysis, the authors verified the average, minimum, maximum and median values of each series for open and non-open access papers, finding that “OA and non-OA articles have similar results - average altmetric score is slightly higher for OA articles, but the median slightly higher for non-OA articles” (p. 457). For this reason, the authors, on the basis their sample, were unable to determine whether or not publishing an article in OA afforded any advantage.

In this paper, we report the results of research on the existence of an “OA Altmetrics Advantage”(OACA) in the area of Information Science, by examining whether the free and open availability of articles to the scientific community contributes to frequency of citation in other scientific papers and/or mentions on social networks.

Method

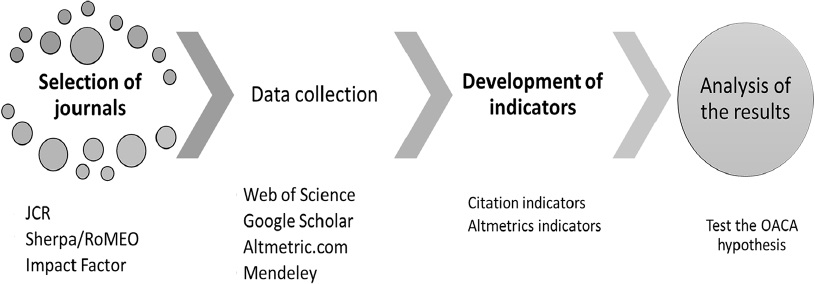

Bibliometric and Altmetrics indicators were developed using the procedure shown in Figure 1.

The first stage involved the selection of the journals to be studied. We opted to use the journals listed under the category Information Science & Library Science in 2015 Social Sciences edition of the Journal Citation Reports (JCR), published by Thomson Reuters. This choice was motivated by the fact that JCR is linked to the Web of Science bibliographic database, which is acknowledged for indexing scientific journals of scholarly relevance. Moreover, it is traditionally used in bibliometric studies. From this list, and using the Sherpa-RoMEO database (http://www.sherpa.ac.uk/romeo/index.php), we noted journal policies with regard to permission to authors to provide OA to their work in an institutional repository or on a personal webpage (the so-called “green road” to OA). We were thereby able to identify those journals distributed exclusively through subscription access, those which allow OA and those using ae hybrid publishing model.

Given that we were unable to find a free means of automating the Altmetrics data collection, we set up filters for selecting select a manageable number of journals for data collection. The first selection made was from those journals classified as hybrid, that is, subscription access journals that also offe authors the choice for immediate publication in OA if they pay the article processing charges (APCs). We also decided to limit our collection to articles published in 2013, the time needed for article citations to be indexed and subsequently entered in the indexing database.

It should be noted that depending on the policies of each journal, publishers can authorize the deposit of different versions of the paper submitted. In addition to this, distinct embargo periods can be stipulated, after which authors can self-archive their papers in repositories. Therefore, we accessed the website of each journal to check the embargo period between the publication date and online deposit in an OA institutional repository. In this way, we chose journals whose embargo periods varied from 0 and 12 months.

Finally, we applied a journal selection filter, choosing those only those journals with an impact factor (IF) of 1.000 or above. Table 1 details the characteristics of the set of journals analyzed in this study, showing the IF, required embargo period before self-archiving, the total number of articles and the percentage of papers available in OA to April 21, 2017.

Table 1 Details of selected journals

| Journal | Impact Factor |

Embargo period (months) |

Total no. of published articles |

Nº of articles OA journals (APC) |

Nº of green road OA articles |

% OA Total (AP- C+green road) |

|---|---|---|---|---|---|---|

| Aslib Proceedings* | 1.147 | 0 | 33 | 0 | 12 | 36.4 % |

|

European Journal of Information Systems |

2.892 | 12 | 35 | 0 | 10 | 28.6 % |

| Information Society | 1.333 | 0 | 22 | 0 | 6 | 27.3 % |

|

Information Systems Journal |

2.522 | 12 | 26 | 0 | 10 | 38.5 % |

|

Information Systems Research |

3.047 | 12 | 58 | 0 | 30 | 51.7 % |

| Information, Technology & People | 1.150 | 0 | 18 | 1 | 10 | 61.1 % |

|

Int. Journal of Computer-Supported Collab. Learning |

2.200 | 12 | 19 | 0 | 7 | 36.8 % |

|

International Journal of Geographical Inf. Science |

2.065 | 12 | 122 | 1 | 39 | 32.8 % |

|

Journal of Academic Librarianship |

1.150 | 12 | 71 | 1 | 37 | 53.5 % |

|

Journal of Documentation |

1.063 | 0 | 40 | 0 | 20 | 50.0 % |

|

Journal of Librarianship and Information Science |

1.239 | 12 | 23 | 0 | 16 | 69.6 % |

|

Library & Information Science Research |

1.230 | 12 | 35 | 0 | 15 | 42.9 % |

|

Online Information Review |

1.152 | 0 | 48 | 0 | 18 | 37.5 % |

|

Program - Electronic Library and Information Systems |

1.000 | 0 | 22 | 1 | 13 | 63.6 % |

| Scientometrics | 2.084 | 12 | 249 | 9 | 126 | 54.2 % |

|

Social Science Computer Review |

1.525 | 12 | 51 | 3 | 31 | 66.7 % |

| Total | - | - | 872 | 16 | 400 | 47.7 % |

| Source: Data from the research * Currently: Aslib Journal of Information Management | ||||||

Bibliographic data collection of published articles for these journals was performed in the Web of Science’s Core Collection on July 27, 2016 and on April 21, 2017. The number of citations each article garnered was checked in the Citation Report, accessed through the same database. We also collected citation data from three other sources: Google Scholar, Altmetric.com and Mendeley, in order to assess if any of these sources best captures the impact that these open access articles have on social networks. To that end, we attached the Altmetric Bookmarklet, a free app provided by the Altmetric.com website, to each article. For data from Mendeley, we noted the number of readers who had downloaded the reference data of the articles under analysis. We first carried out the Altmetrics data collection between July 27 and 30, 2016. The second data collection was carried out between April 21 and 22, 2017.

We accessed each journal site individually to verify which articles were available in OA. In line with Antelman (2004), in order to ascertain whether articles are accessible via the green road, we performed a search in Google Scholar for articles deposited in OA repositories, on personal webpages of authors and in other sources in order to determine the extent of full-text and barrier-free access to these papers. Interestingly, at the time of the second data collection, there were 58 green road OA articles not originally available in OA at the time of the first data collection. Evidently, these articles were archived in a repository at some point between the two data collection moments.

For articles located in OA, we logged data on the version available, i.e., the pre-print or the post-print. We should emphasize that if copies of the article existed on more than one website, we counted only the first result brought up by the search engine. Thus, there might have been cases in which the article was available simultaneously in the institutional repository and on the author’s personal webpage. We did not check each case, because at this point in the research, we were interested in whether the article was openly and freely available. In this sense, we were taking the cue from Willinsky (2006) who observed that an alternative to the creation and maintenance of digital repositories by institutions is, indeed, the posting of publications on researchers’ personal webpages, on webpages within the university or website operated by the research group. For this reason, our study employs the term Institutional website to webpages hosting the freely downloadable article, whether an institutional repository or webpage on a university website.

Lastly, in order to verify the OACA hypothesis and in view of observations by Craig et al. (2007) regarding potential methodological issues, we followed the method used by Wang et al. (2015) and Poplašen and Grgić (2016) to calculate the average number of citations and mentions on the social web garnered by OA articles and compare these against those obtained by the non-OA articles. The aim of this stage of the data collection is to determine whether the OACA hypothesis can be confirmed for the Altmetrics data collected from sources with a much wider reach than the Web of Science. Moreover, this analysis was performed individually for each data collection set, and the two analyses were compared against each other in order to verify whether OA contributes to a faster increase in the average citation value compared to non-OA articles. We also separately assessed open access offered through the payment of APC and through the self-archiving of articles in OA by the authors.

Results and discussion

As seen in Figure 2, the post-print versions of the articles (61.3%) were those versions most frequently found on Google Scholar. This may be the consequence of the journal’s open access archiving policies, allowing either self-archiving of the pre-print or post-print version of the published paper. A trend was also observed regarding the use of institutional websites and academic social networks for self-archiving, which confirms the relevance of institutional repositories for the dissemination of knowledge generated in a given institution and leveraging scientific progress (Harnad, 2007; Swan, 2010; Suber, 2012). Figure 2 also shows that only 3.8% of the total number of OA articles analyzed are immediately available in open access in journals through the payment of the APCs.

The results show of the 416 open access (OA) articles in the second data collection, 385 had at least one citation, which represents a citedness of 92.5%. For non-OA articles, the citedness rate is 90.8% (414 out of 456). This shows, at least for the number of citations, that the two values are extremely close. For comparison purposes, the rate of mentions presented by the Altmetric.com variable is only 48.3% for OA articles and 35.1% for non-OA articles. Although this indicator shows OA seems to achieve at least one social web reference for a greater number of articles, when we compare this data against the citation rate, we find there are only a few articles that exhibit this behavior. Tables 2 and 3 show the results identified from the calculations carried out for the verification of the OACA hypothesis for the first and second data collections.

Table 2 Statistical averages for the first dataset

| Information source | Function | Non-open access | Open access | |

|---|---|---|---|---|

| APC | Green road | |||

| Web of Science (average no. of citations) |

average | 3.95 | 6.94 | 4.99 |

| min | 0 | 0 | 0 | |

| max | 42 | 25 | 39 | |

| median | 3 | 5 | 3 | |

| Google Scholar (average no. of citations) |

average | 12.58 | 20.38 | 16.79 |

| min | 0 | 1 | 0 | |

| max | 222 | 96 | 232 | |

| median | 8 | 15 | 11 | |

| Mendeley (average no. of readers) |

average | 29.70 | 46.69 | 35.18 |

| min | 1 | 12 | 2 | |

| max | 184 | 104 | 214 | |

| median | 24 | 44 | 27 | |

| Altmetric.com (average no. of donut score) |

average | 1.13 | 4.31 | 2 .47 |

| min | 0 | 0 | 0 | |

| max | 47 | 25 | 61 | |

| median | 0 | 1 | 0 | |

Table 3 Statistical averages for the second collected dataset

| Information source | Function | Non-open access | Open access | |

|---|---|---|---|---|

| APC | Green road | |||

| Web of Science (average no. of citations) |

average | 6.07 | 8.69 | 7.39 |

| min | 0 | 0 | 0 | |

| max | 82 | 40 | 74 | |

| median | 4 | 6.5 | 5 | |

| Google Scholar (average no. of citations) |

average | 17.16 | 22.00 | 22.40 |

| min | 0 | 5 | 0 | |

| max | 347 | 122 | 332 | |

| median | 12 | 15.5 | 15 | |

| Mendeley (average no. of readers) |

average | 35.44 | 53.38 | 43.16 |

| min | 1 | 14 | 2 | |

| max | 229 | 127 | 244 | |

| median | 29 | 46.5 | 33 | |

| Altmetric.com (average no. of donut score) |

average | 1.24 | 4.75 | 2 .43 |

| min | 0 | 0 | 0 | |

| max | 47 | 25 | 57 | |

| median | 0 | 2 | 0 | |

According to Tables 2 and 3, was can infer that OA offers some advantage in the number of citations for journals in the field of Information Science, since the averages of OA articles located in both Web of Science and Google Scholar are higher than those for non-OA articles. This information is valid for the two collection periods. It can also be observed in the similar maximum and median values exhibited for green road OA articles and non-OA papers.

Additionally, the Altmetrics data also corroborate the OACA hypothesis. For Altmetric.com and the Mendeley data, we observe that OA articles via APC are those that show the greatest proportional advantage. This suggest the impact of an article on social webs can be improved by making it immediately available. i.e., providing OA, on the website of the journal where it was published. Figures 3 and 4 illustrate the mean values obtained for each of the information sources analyzed.

Figures 3 and 4 show that for the dataset analyzed for the field of Information Science, open access via the payment of article processing charges (APCs), in most cases, leads to more citations, a greater number of mentions on the social web and a greater number of readers on Mendeley when compared to papers self-archived by authors.

Table 4, on the other hand, shows the percentage growth rate of the means of the variables analyzed in this study. As can be observed, non-open access articles exhibited the largest percentage of growth in citation averages on Web of Science and Google Scholar. It should also be noted that the average Altmetric Score for green road open access articles declines from one data set to another. This could be related to the fact that in the second collection some of the articles did not obtain as many scores as in the first collection. On this point, Haustein, Bowman and Costas (2015) explain that there is uncertainty regarding the consistency of the Altmetrics data, because they are linked to platforms that are constantly changing and whose users are so diverse they have not yet been completely understood.

Table 4 Average percentage variation between the two data collection

| Information source | Non-open access | Open access | |

|---|---|---|---|

| APC | Green road | ||

| Web of Science | 53.8% | 25.2% | 48.1% |

| Google Scholar | 36.4% | 8.0% | 33.4% |

| Mendeley | 19.3% | 14.3% | 22.7% |

| Altmetric.com | 9.5% | 10.1% | -1.9% |

Our results showed that regardless of whether an article is OA or non-OA, the largest increases in absolute numbers of the total citations obtained from the Web of Science were attained by articles that had reached a large number of citations in the first data collection. This also occurred with Google Scholar citation values and the number of readers in Mendeley. This phenomenon, in which success seems to lead to more success, was also observed by Solla Price (1976), who called it the “Cumulative Advantage Distribution.” Analogous to the “Matthew Effect” described by Merton (1968), Solla Price (1976) argues that articles with garnering a large number of citations are more likely to be cited versus those with few citations. Similarly, a journal that is constantly used as a reference for a particular subject area tends to be consulted more often than those less frequently used of those that are just emerging.

In the case of Altmetric.com, as only 76 articles (8.7%) exhibited changes in scores. Moreover, it was not possible to identify a pattern to justify greater or smaller increases or decreases in the donut score. The low number of articles exhibiting changes in Altmetric Score shows that this indicator, obtained from the mentions on social networks, seems to be more stable than the others, insofar as it captures only the most immediate impact of a publication. Therefore, after a period immediately following its publication, an article is unlikely to be mentioned again on a social network.

Final considerations

Through the elaboration and analysis of bibliometric and altmetrics indicators, the aim of this research is to examine the impact of open access (OA) of journal articles in the area of Information Science. The study allows us to infer that archiving an article in open access seems to contribute to an advantage in the potential number of citations and mentions in social media. The study, therefore, corroborates results found regarding the Open Access Citation Advantage (OACA) for other areas of knowledge, while showing that these advantages also exist in terms of mentions in social networks. As such, the viability of an “OA Altmetrics Advantage” is supported.

In this way, we see that OA can contribute to a greater diffusion of knowledge within the scientific community and potentiate the number of citations scientists obtain, something that can positively influence the impact of their research groups or institutions. “OA is not just about public access rights or the general dissemination of knowledge: It is about increasing the impact and thereby the progress of research itself” (Gargouri et al., 2010: 1).

Although articles made available in OA immediately by journal - via the payment of the article processing charge (APC) - exhibited the greatest percentage advantage regarding their number of citations and social network mentions, we cannot indisputably assert that this model is the best in terms of returns to the authors in the form of citations; since only 16 of the 872 articles were made available in OA in this way. Additionally, the literature in this area suggests OACA exists largely because scientists tend to make their better-quality articles available in open access. As such, these articles already tend to receive more citations. This phenomenon was also demonstrated in our research, since the articles in the second sample with the highest percentage citation increases were also those that had already reached a large number of citations in the first data collection. Therefore, it is important to acknowledge that while OA drives a higher citation numbers, the quality of the article is also a significant factor.

In addition to testing the OACA hypothesis, we verified its existence in two time periods in order to examine OA influence of over time. Despite the lower average values, we found the number of citations increased more rapidly for non-OA articles. Furthermore, the Altmetrics data remained practically stable between the two data collections, regardless of which set was analyzed. This demonstrates that we must examine what altmetric indicators actually measure. We hope we have contributed to this discussion by showing that altmetric indicators on the whole actually tend to reflect the more immediate and short-term repercussion of publications: and that it is unlikely that an article will be mentioned again in social networks after the period immediately following publication. Despite this, it should be noted that this study set about the task of obtaining the Altmetrics data. Since an automated, free method of obtaining this information was not available, it was difficult to perform the study on a large sample, something that limits the research to providing only a partial view of Altmetrics in the field of Information Science. Further research could verify the existence of a correlation of the data with shorter time intervals, whereby its could be ascertain whether articles with a substantial number of social media mentions today might lead to a large number of citations in the future.

Finally, we emphasize that more than half of the articles in our sample were not OA available either by APC or by the green road. This aligns with Gargouri et al. (2010), who observed that not all authors self-archive their publications after expiration of the embargo period, which means subscription to the journal is still required to access a large portion of the literature. From this standpoint, our results could provide support to decision-makers at government and funding agencies to act more effectively in formulating future science and technology policies that encourage making scientific information available to any interested party; and, in this way, contribute to the achievement of the goals pursued by OA. Such policies might include, for example, the establishment broad institutional repositories providing access to more than theses and dissertations, or automatic OA publication of the articles after expiration of embargo periods. Such measures would do much to contribute to the consolidation of OA in the field of Information Science.

nova página do texto(beta)

nova página do texto(beta)