Servicios Personalizados

Revista

Articulo

Indicadores

Links relacionados

-

Similares en

SciELO

Similares en

SciELO

Compartir

Revista mexicana de análisis de la conducta

versión impresa ISSN 0185-4534

Rev. mex. anál. conducta vol.34 no.2 México dic. 2008

Effects of the Contiguity Between the Extinction and the Reinforcement Components in Observing–response Procedures1

Efectos de la contigüidad entre los componentes de extinción y de reforzamiento en un procedimiento de respuestas de observación

Rogelio Escobar and Carlos A. Bruner2

Universidad Nacional Autónoma de México

2 Address correspondence to:

Rogelio Escobar, Office 2153,

Department of Psychology. West Virginia University.

Morgantown, WV 26506–6040.

Office: (304) 293–2001, Fax: (304) 293–6606.

Email: Rogelio.Escobar@mail.wvu.edu. or

Laboratorio de Condicionamiento Operante,

Facultad de Psicología UNAM. Av. Universidad 3004

Cd. Universitaria. México, D.F. 04510 (rescobar@servidor.unam.mx).

Received: 20 October, 2008

Reviewed: 4 November, 2008

Accepted: 23 December, 2008

Abstract

The role of the stimulus correlated with extinction (S–) in observing–response procedures has been ambiguous. Although the S– is associated with extinction, some studies demonstrated that it effectively sustained observing behavior. To explore the conditions responsible for the S– to function as a conditioned reinforcer, the present experiment showed whether observing responses during an extinction component are controlled by the temporal contiguity between S– presentation and the reinforcement component. Presses on one lever were reinforced on a mixed schedule of reinforcement random–interval extinction. Pressing a second lever resulted in 5–s stimuli correlated with the components of the mixed schedule. For one group of three rats after observing responses were established, a no–consequence interval (NCI) was added between the end of the extinction component and the beginning of the reinforcement component. For other three rats the NCI was added between the end of the reinforcement component and the beginning of the extinction component. Observing responses during the extinction component decreased only when the NCI was added at the end of the extinction component. It was concluded that although the S– is nominally correlated with extinction it may function as a conditioned reinforcer because it is intermittently paired with the reinforcement component.

Key words: Observing responses, conditioned reinforcement, lever pressing, stimuli pairing, rats.

Resumen

El papel del estímulo correlacionado con extinción (E–) en los procedimientos de respuestas de observación ha sido ambiguo. A pesar de que el E– está asociado con un componente de extinción, algunos estudios han mostrado que mantiene consistentemente las respuestas de observación. Para explorar las condiciones responsables de que el E– funcione como un reforzador condicionado, el presente experimento determinó si las respuestas de observación durante el componente de extinción están controladas por la contigüidad temporal entre la presentación del E– y el componente de reforzamiento. Se reforzaron las presiones en una palanca conforme a un programa de reforzamiento mixto intervalo al azar extinción. Las presiones en una segunda palanca resultaron en estímulos correlacionados con los componentes del programa mixto durante 5 s. Para un grupo de tres ratas, después de establecer las respuestas de observación, se añadió un intervalo sin consecuencias (ISC) entre el final del componente de extinción y el inicio del componente de reforzamiento. Para otras tres ratas el ISC se añadió entre el final del componente de reforzamiento y el inicio del componente de extinción. Las respuestas de observación durante el componente de extinción disminuyeron solamente cuando el ISC se añadió al final del componente de extinción. Se concluyó que a pesar de que el E – nominalmente está correlacionado con extinción, puede funcionar como un reforzador condicionado debido a su apareamiento intermitentemente con el componente de reforzamiento.

Palabras Clave: Repuestas de observación, reforzamiento condicionado, presiones a la palanca, apareamiento entre estímulos, ratas.

An observing response is an operant that exposes an organism to discriminative stimuli without affecting the availability of primary reinforcement. In the first study of observing behavior, Wyckoff (1952, 1969) used an experimental chamber equipped with a response key, a food tray and a pedal located on the floor below the response key. He reinforced key pecking in one group of pigeons on a mixed fixed interval (FI) 30 s extinction (EXT) 30 s in which both components of the schedule alternated randomly. The response key was illuminated with a white light during both FI and EXT unless the pigeons stepped on the pedal that changed the light to red during FI and to green during EXT. Whenever the pigeons stepped off the pedal the response key light changed back to white. For another group of pigeons the color of the response keys did not correlate with the components of the mixed schedule. Wyckoff recorded the accumulated durations of each of the stimuli and reported that the pigeons produced the discriminative stimuli during more of the session time when the color of the response key correlated with the components of the mixed schedule than when it did not. It can be concluded that the stimuli functioned as conditioned reinforcers for observing.

One advantage of observing procedures over other techniques for studying conditioned reinforcement is that the occurrence of observing behavior and stimuli presentation does not interfere with the schedule that controls the delivery of the primary reinforcer. Given this advantage, several studies have been conducted to study conditioned reinforcement using the observing procedure (Lieving, Reilly, & Lattal, 2006, Shahan, Podlesnik, & Jimenez–Gomez, 2006). However, the fact that these procedures arrange the presentation of stimuli correlated with both, the reinforcement component (S+) and the extinction component (S–) has raised issues for interpretation.

For example, Lieberman (1972) exposed monkeys to a mixed variable–ratio (VR) 50 EXT schedule that produced food reinforcement for pressing one lever. In subsequent conditions, pressing a second lever produced stimuli correlated with both components of the mixed schedule (S+ and S–) or only with the reinforcement component (S+ only). He found that observing response rate was lower when only the S+ was presented than in the condition when both, the S+ and the S– were presented. He concluded that the S– functioned as a positive conditioned reinforcer. A similar finding was reported by Perone and Baron (1980) and Perone and Kaminski (1992) who found than in human subjects removing the S– presentation decreased the frequency of observing behavior in factory workers and college students, respectively. As Perone and Kaminski stated, this results posed a challenge to a theory of conditioned reinforcement based on the pairing of the originally neutral stimulus and the primary reinforcer (cf. Kelleher & Gollub, 1962).

According to an associative conception of conditioned reinforcement in the observing procedures the S+ correlated with primary reinforcer delivery should function as a conditioned reinforcer. The role of the S– is complex given that if it is assumed that it is associated with a reduction in reinforcement rate it should function as an aversive stimulus (see Dinsmoor, 1983 and Fantino, 1977 for reviews). Another interpretation is that observing responses are sustained exclusively by the S+ and the S–s are produced accidentally while the observing responses are emitted (Branch, 1983). In either case, the S– could not function as a positive conditioned reinforcer.

Several studies showed that the S– did not function as a conditioned rein–forcer. For example, Mueller and Dinsmoor (1984) showed that removing the S, as in Lieberman (1972) procedure did not have effects on observing–response rate. In other study, Dinsmoor, Mulvaney and Jwaideh (1981) reported that if a second peck on an observing key turns off the presentation of the stimuli associated with the schedule component in effect, pigeons turn off more rapidly the S– than the S+. (see also, Auge, 1974; Case, Fantino & Wixted, 1985; Fantino & Case, 1983). Although several studies supported an explanation of observing in terms of an associative conception of conditioned reinforcement, it is not clear why some studies reported that the S– rather than functioning as an aversive or a neutral stimulus functioned as a conditioned reinforcer.

One explanation for the fact that the S– in some studies functioned as a positive conditioned reinforcer is that the S– is accidentally associated with the reinforcement component. For example, Kelleher, Riddle and Cook (1962) exposed pigeons to a mixed variable ratio (VR) 100 EXT schedule on one key. Concurrently, pecks on a second key produced 30–s stimuli correlated with the components of the mixed schedule. Kelleher et al. reported that observing responses occurred in repetitive patterns consisting of successive observing responses producing S–s. Once an S+ was presented, food responses replaced observing responses until several responses went unreinforced, after that, successive observing responses occurred again. Escobar and Bruner (2002) replicated this pattern of observing using rats as subjects and using an observing procedure consisting of a mixed random–interval (RI) EXT schedule of food reinforcement in one lever in combination with 5–s stimuli produced on a second lever. In both experiments, at least intermittently, the S– was followed closely by the S+ presentation and even by the reinforcer delivery.

Given the occasional contiguity between the S– and the S+ or reinforcer delivery, it is conceivable that the S– rather than signaling EXT component may have signaled the occurrence of the S+ and reinforcer delivery, thus functioning as a conditioned reinforcer for observing responses occurring during the EXT component. The purpose of the present experiment was to determine whether the occasional contiguity between the S– and reinforcement component has effects on observing responses during the extinction component using an observing procedure.

A strategy to avoid the contiguity between the S– and the reinforcement component using the typical observing procedure is to add an interval without programmed consequences between the end of the EXT component and the beginning of the subsequent reinforcement component of the mixed schedule of reinforcement. This strategy was followed to determine whether increasing the minimum interval between the S– and the reinforcement component would affect observing rate during the extinction component. If observing responses during the extinction component are indeed related to the reinforcement component adding an interval between extinction and reinforcement component should result in a decrease in observing responses during the extinction component.

METHOD

Subjects

The subjects were six male–Wistar rats kept at 80% of their ad–lib weight. The rats had previous experience with a procedure of observing responses involving multiple and mixed RI EXT schedules of reinforcement but were never exposed to periods in which pressing the observing lever had no programmed consequences. The rats were housed within individual home cages with free access to water.

Apparatus

Three experimental chambers (Med Associates Inc. model ENV–001) equipped with a food tray located at the center of the front panel and two levers, one on each side of the food tray, were used. A minimum force of 0.15 N was required to operate the switch of the levers. The chambers were also equipped with a house light located on the rear panel, a sonalert (Mallory SC 628) that produced a 2900 Hz 70 dB tone, and one bulb with a plastic cover that produced a diffuse light above each lever. A pellet dispenser (Med Associates Inc. model ENV–203) dropped into the food tray 25–mg food pellets that were made by remolding pulverized rat food. Each chamber was placed within a sound–attenuating cubicle equipped with a white–noise generator and a fan. The experiment was controlled and data were recorded from an adjacent room, using an IBM compatible computer through an interface (Med Associates Inc. model SG–503) using Med–PC 2.0 software.

Procedure

All the rats were exposed directly to a mixed RI 20 s EXT reinforcement schedule on the left lever. The RI component duration was 20 s and the EXT component duration was 60 s. These component durations were selected to guarantee a high and stable rate of observing responses during the EXT component. In previous studies it was reported that the rate of observing was relatively high during the EXT component using such asymmetric durations of the components (Escobar & Bruner, 2002). Each component in a pair of reinforcement and EXT components was presented randomly; thus, no more than two components of the same type could occur in succession in consecutive pairs. The RI schedule reinforced with food the first lever press after a variable interval of time (mean of 20 s) had elapsed. The time interval was arranged by setting the probability of reinforcement assignment at 0.25 at the end of each consecutive 5–s interval. Each press on the right lever (observing lever) produced for 5 s a steady tone during the EXT component (S–) or a blinking light during the reinforcement component (S+). A tone was selected as an S– for all rats to reduce the probability of unrecorded escape responses during S–presentations that could be favored with a tone as an S– (i.e., had a light been aversive, the rats might have turned away to escape from its presentation; see Shull, 1983). If the component ended before the termination of a stimulus, the remainder of the stimulus was cancelled. Presses on the observing lever during the stimuli had no programmed consequences. No changeover delay was scheduled to avoid further non–systematic increases in the interval between and S– and reinforcer delivery than those scheduled in the subsequent condition. Each session consisted of 30 pairs of reinforcement and EXT components and this condition was in effect for 30 sessions. The particular values of the schedules chosen in the present experiment were based on our previous experiments, in which we found that these values maintained a sufficient and relatively stable rate of observing responses.

In a subsequent 30–session condition, for three rats a 32–s no–consequence interval (NCI) separated the end of each EXT component whenever it was followed by a reinforcement component. The NCI was unsignaled, and consisted of the absence of consequences for pressing the levers. Throughout the NCI the houselight and the white noise remained on. For the other three rats, the NCI was added between the reinforcement and the EXT component whenever the components occurred in this order. Therefore, for one group of rats the NCI occurred at the end of the EXT component and for the other the NCI occurred at the beginning of the EXT component. Throughout the experiment sessions were conducted daily, seven days a week always at the same hour.

RESULTS

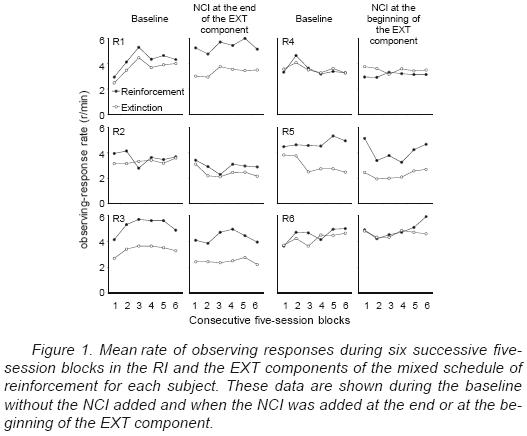

Figure 1 shows individual mean observing–response rates during both, the RI and the EXT components of the mixed schedule of reinforcement across six successive blocks of Ave sessions. The two columns at the left show the data for the three rats during the baseline and during the condition with the NCI added at the end of the EXT component. The two columns at the right show the data for the rats exposed to the NCI at the beginning of the EXT component. The observing rates were correted by excluding stimulus duration from the duration of each component of the mixed schedule of reinforcement.

For Rats 1, 3 and 5, observing–response rate was higher during the reinforcement than during the EXT component during the baseline. For the rest of the rats, observing–response rates during the baseline were generally similar in the two components of the mixed schedule. Adding the NCI, either at the end or at the beginning of the EXT component, did not have systematic effects on the relative rate of observing responses during the EXT and the reinforcement components. For Rats 1,2 and 3, adding the NCI at the end of the EXT component resulted in a decrease in observing–response rate during the EXT component relative to the baseline responding. Observing–response rate during the reinforcement component decreased for Rats 2 and 3 and increased for Rat 1 when the NCI was added at the end of the EXT component. For all the subjects that were exposed to the NCI at the beginning of the EXT component, in comparison to the baseline, observing–response rate did not vary systematically when the NCI was added.

To facilitate the comparison of the observing responses during the EXT and the reinforcement components relative to the baseline, Figure 2 shows the rate of observing responses during each component as a percentage of the rate of observing responses during the baseline. Adding the NCI at the end of the EXT component resulted in a rate of observing that was lower than that found during the baseline without the NCI for the three rats. The presentation of the NCI at the beginning of the EXT component did not have systematic effects on observing response rate relative to the baseline. Wtien the NCI was added at the end of the EXT component the observing–response rates during the reinforcement component varied asystematically between subjects relative to the baseline condition. The NCI added at the beginning of the EXT component did not have systematic effects on the rate of observing during the reinforcement component relative to the baseline.

Figure 3 shows the mean individual food–response rate across the six consecutive five–session blocks during the baseline and during the condition with the NCI added at the end or t the beginning of the EXT component. Food–response rate was higher durig the reinforcement than during the EXT component for all rats throughout the experiment and did not vary systematically when the NCI was added.

To determine whether the addition of the NCI indeed modulated the obtained temporal separation between S– presentations and the events occurring during the reinforcement component, the interval between the last S– during the EXT component and the first presentation of the S+ or the reinforcer was calculated. Figure 4 shows the mean interval between the S– and the S+ and between the S– and the reinforcer across the six consecutive five–session blocks during the baseline and during the condition with the NCI added at the end or at the beginning of the EXT component. As expected from adding the NCI at the end of the EXT component, the interval between the S– and the S+ and between the S– and the reinforc r notably increased for the three rats. In contrast, the intervals remained generally unchanged between the baseline and the condition with the NCI at te beginning of the EXT component. For all rats, the interval between the S– and the S+ was shorter than the interval between the S– and the reinforcer dlivery.

Table 1 shows the individual mean number of reinforcers per session across the successive five–session blocks during the baseline condition without the NCI added to the EXT component and during the condition with the added NCI at the end or at the beginning of the EXT component. The number of reinforcers per session did not vary systematically between groups during the baseline condition or when the NCI was added to the EXT component.

DISCUSSION

The results of the present experiment showed that when the temporal contiguity between the S– and the reinforcement component was avoided, the S– sustained a lower rate of observing responses than when intermittent contiguities between the S– and the reinforcement component were allowed. These results suggest that observing responses occurring during the EXT component are not independent from the experimental events occurring during the reinforcement component.

One explanation for the present data is that the S– functioned as a conditioned reinforcer due to its occasional contiguity with the reinforcement component. When the minimum interval between an S– and the reinforcement component was lengthened, the reinforcing properties of the S– decreased to some extent. These results are comparable with previous studies that demonstrated that the value of a stimulus as a conditioned reinforcer decreased as the temporal distance between the stimulus and the primary reinforcer increased (Bersh, 1951; Jenkins, 1950).

The fact that observing–response rate did not vary systematically for the group of rats exposed to the NCI added at the beginning of the EXT rather than at the end of the EXT component eliminates alternative explanations for the results of the present experiment. For example, adding a NCI at the end of the EXT component lengthened the interreinforcement interval (IRI), thus it was conceivable that observing–response rate could have decreased due to the reduction in the primary reinforcer frequency (Escobar & Bruner, 2002; Shahan, 2002).

Another alternative explanation could have been that the results of the present experiment may have resulted from the intermittent reinforcement of observing responses with the S+. It could have been argued that when the NCI was added at the end of the EXT component, the inter–S+ interval was lengthened, thus, decreasing observing response rate during the ExT component relative to the baseline condition without the NCI. However it was not the case in the present experiment in which it was shown that the reduction in observing–response rate during the ExT was determined by the temporal separation between the EXT and the reinforcement component only when the components were presented in this order.

The present results are also congruent with an associative conception of conditioned reinforcement in terms of context (e.g., Fantino, 2001). Specifically, a stimulus functions as a conditioned reinforcer if it signals a reduction in time to the subsequent reinforcer relative to the duration of the interrein–forcement interval (e.g., Fantino, 1977). According to Fantino (1977) the S+ functions as a conditioned reinforcer for observing because it reduces the delay to the subsequent reinforcer delivery relative to the compound duration of the EXT and the reinforcement component. In comparison the S– signals a longer delay to the reinforcer delivery and should not reinforce observing. The present findings can be reconciled with a delay reduction explanation. As expected, the S+ functioned as a conditioned reinforcer because of its temporal proximity with reinforcer delivery. The S– functioned as a less effective conditioned reinforcer given that it signals a longer delay to the reinforcer delivery. However, the S– also signals a reduction in the delay to the subsequent rein–forcer relative to the stimuli present in the experimental chamber before the experimental sessions. This interpretation suggest an explanation for the fact that the S– associated with an EXT component functioned as a conditioned reinforcer in some studies (Lieberman, 1972, Perone & Baron, 1980; Perone & Kaminski, 1992). This finding, that was considered incongruent with an explanation of conditioned reinforcer in terms of the association of stimuli and reinforcers, may have resulted from the accidental association between the S– and the reinforcement component. However, further research is necessary to support such statement.

Although, the results of the present study were generally consistent across subjects, the decrease in observing responses during the ExT component when the NCI was added at the end of the EXT component, varied from close to 50% responding relative to baseline responding for Rats 2 and 3 to nearly 98% for Rat 1 in the different blocks of sessions. A possible explanation for the lack of more consistent effects is that even though the NCI duration imposed a minimum interval between the S– and both S+ and reinforcer delivery, the maximum interval could still vary from the minimum interval programmed to the total duration of the EXT component. Given that two EXT components could occur successively the maximum interval between the beginning of an S– and the S+ or reinforcer delivery could have been as long as 120 s. For example, if an S– was presented at the beginning and later at the end of an EXT component, it would be expected that the stimulus signaling both, a long and a short interval to the reinforcer delivery would function as a neutral stimulus. As a consequence it would be expected that the NCI between the EXT and the reinforcement component would have only slight or no effects on observing response rate. Possibly, if the minimum and the maximum intervals between the S– and the reinforcement component are kept constant adding an interval between the S– and the reinforcement component may yield stronger effects than those obtained in the present study.

The results suggest that it is necessary to separate the component of the mixed schedule of reinforcement in observing procedures. In observing procedures traditionally the reinforcement and the extinction components of the mixed schedule are presented one immediately after the other with no blackout between components (Auge, 1974; Shahan, et al. 2006; Gaynor & Shull, 2002). The results of the present experiment suggest that such practice may result in the fact that observing within the ExT component is controlled to some extend by the events occurring during the reinforcement component. A similar effect concerning the competition of stimulus control using multiple schedules of reinforcement was suggested by Pierce and Cheney (2004) and blackouts have been implemented to avoid such confounding effects (e.g., Grimes & Shull, 2001).

1 The experiment reported here was part of a doctoral dissertation of the first author supported by the fellowship 176012 from CONACYT. This manuscript was prepared at West Virginia University during a post–doctoral visit of the first author that was supported by the PROFIP of DGAPA–UNAM. The first author wishes to express his debt to Richard L. Shull for his invaluable and continuing contributions and to Florente López for his insightful comments and suggestions.

REFERENCES

Auge, R. J. (1974). Context, observing behavior, and conditioned reinforcement. Journal of the Experimental Analysis of Behavior, 22, 525–533. [ Links ]

Bersh, P. J. (1951). The influence of two variables upon the establishment of a secondary reinforcer for operant responses. Journal of Experimental Psychology, 41, 62–73. [ Links ]

Branch, M. N. (1983). Observing observing. Behavioral and Brain Sciences, 6, 705. [ Links ]

Case, D. A., Fantino, E., & Wixted, J. (1985). Human observing: Maintained by negative informative stimuli only if correlated with improvement in response efficiency. Journal of the Experimental Analysis of Behavior, 43, 289–300. [ Links ]

Dinsmoor, J. A. (1983). Observing and conditioned reinforcement. Behavioral and Brain Sciences, 6, 693–728. [ Links ]

Dinsmoor, J. A., Mulvaney , D. E., & Jwaideh, A. R. (1981). Conditioned reinforcement as a function of duration of stimulus. Journal of the Experimental Analysis of Behavior 36, 41–49. [ Links ]

Escobar, R. & Bruner, C. A. (2002). Efectos de la frecuencia de reforzamiento y la duración del componente de extinción en un programa de reforzamiento mixto sobre las respuestas de observación en ratas. Revista Mexicana de Análisis de la Conducta, 28, 41–46. [ Links ]

Fantino, E. F. (1977). Conditioned reinforcement: Choice and information. In W. K. Honig, & J. E. R. Staddon (Eds.), Handbook of Operant Behavior (pp. 288–339). Englewood Cliffs, NJ: Prentice Hall. [ Links ]

Fantino, E. F. (2001). Context: a central concept. Behavioural Proceses, 54, 95–110. [ Links ]

Fantino, E. F. & Case, D. A. (1983). Human observing: Maintained by stimuli correlated with reinforcement but not extinction. Journal of the Experimental Analysis of Behavior, 40, 193–210. [ Links ]

Gaynor, S. T., & Shull, R. L. (2002). The generality of selective observing. Journal of the Experimental Analysis of Behavior, 77, 171–187. [ Links ]

Grimes, J. A., & Shull, R. L. (2001). Response–independent milk delivery enhances persistence of pellet–reinforced lever pressing by rats. Journal of the Experimental Analysis of Behavior, 76, 179–194. [ Links ]

Jenkins, W. O. (1950). A temporal gradient of derived reinforcement. American Journal of Psychology, 63, 237–243. [ Links ]

Kelleher, R. T. & Gollub, L. R. (1962). A review of positive conditioned reinforcement. Journal of the Experimental Analysis of Behavior, 5, 543–597. [ Links ]

Kelleher, R. T., Riddle, W. C., & Cook, L. (1962). Observing responses in pigeons. Journal of the Experimental Analysis of Behavior, 5, 3–13. [ Links ]

Lieberman, D. A. (1972). Secondary reinforcement and information as determinants of observing behavior in monkeys (Macaca mulatta). Learning and Motivation, 3, 341–358. [ Links ]

Lieving, G.A., Reilly, M.P., & Lattal, K.A. (2006). Disruption of responding maintained by conditioned reinforcement: alterations in response–conditioned reinforcer relations. Journal of the Experimental Analysis of Behavior, 86, 197–209. [ Links ]

Mueller, K. L. & Dinsmoor, J. A. (1984). Testing the reinforcing properties of S–: A replication of Lieberman's procedure. Journal of the Experimental Analysis of Behavior, 41, 17–25. [ Links ]

Pierce, W. D., & Cheney, C. D. (2004). Behavior analysis and learning (3rd ed.). Mahwah, NJ: Erlbaum. [ Links ]

Perone, M. & Baron, A. (1980). Reinforcement of human observing behavior by a stimulus correlated with extinction or increased effort. Journal of the Experimental Analysis of Behavior, 34, 239–261. [ Links ]

Perone, M., & Kaminski, B. J. (1992). Conditioned reinforcement of human observing behavior by descriptive and arbitrary verbal stimuli. Journal of the Experimental Analysis of Behavior, 58, 557–575. [ Links ]

Shahan, T. A. (2002). Observing behavior: Effects of rate and magnitude of primary reinforcement. Journal of the Experimental Analysis of Behavior, 78, 161–178. [ Links ]

Shahan, T.A., Podlesnik, C.A. & Jimenez–Gomez, C. (2006). Matching and conditioned reinforcement rate. Journal of the Experimental Analysis of Behavior, 85, 167–180. [ Links ]

Shull, R. L. (1983). Selective observing when the experimenter controls the duration of observing bouts. Behavioral and Brain Sciences, 6, 715. [ Links ]

Wyckoff, L. B., Jr. (1952). The role of observing responses in discrimination learning. Part I. Psychological Review, 66, 68–78. [ Links ]

Wyckoff, L. B., Jr. (1969). The role of observing responses in discrimination learning. Part II. En D. P. Hendry (Ed.), Conditioned reinforcement (pp. 237–260). Homewood, II: Dorsey Press. [ Links ]